Qwen 2 is a family of open-source large language models (LLMs) developed by Alibaba Cloud, serving as the second-generation successor in the Qwen AI model ecosystem. These models are Transformer-based decoder-only LLMs, trained with next-token prediction on massive multilingual data.

Qwen 2 builds on the strengths of its predecessors (Qwen 1 and Qwen 1.5) by expanding model sizes and improving training stability and efficiency. Released under an open Apache 2.0 license for permissive use, Qwen 2 provides both base models (pre-trained on raw data, not instruction-aligned) and instruction-tuned models (fine-tuned to follow human instructions for chat and task completion). This series also extends into multimodal domains – the Qwen team has introduced vision-language and audio-language variants (Qwen-VL and Qwen-Audio) as part of the broader ecosystem.

In this guide, we provide a high-level and practical overview of Qwen 2’s architecture, capabilities, and integration workflows, with a focus on developer-friendly insights for using Qwen 2 in applications.

Architecture Overview

Model Sizes and Architecture: The Qwen 2 release includes five model variants: four dense Transformer models of 0.5B, 1.5B, 7B, and 72B parameters, and one Mixture-of-Experts model of 57B (with 14B parameters activated per token). The smaller 0.5B and 1.5B versions are optimized for lightweight deployments (e.g. mobile or embedded devices), while the 7B and 72B models target higher-end GPU servers. All models share the same Transformer decoder architecture with multiple self-attention layers and feed-forward networks, but scale up in depth and width for the larger parameter counts.

Notably, Qwen 2 uses SwiGLU activation functions and pre-normalization with RMSNorm for training stability, following modern best practices. The Mixture-of-Experts (MoE) variant replaces each feed-forward network with a set of expert networks and routes tokens to a subset of experts, effectively increasing model capacity without a proportional increase in per-token compute. This 57B MoE model (denoted 57B-A14B) has 57 billion total parameters with 8 experts (out of 64) active per token (~14B parameters used per inference step), providing a trade-off between the 7B and 72B dense models in terms of performance and resource usage.

Tokenizer and Input Length: Qwen 2 employs a byte-level Byte Pair Encoding (BPE) tokenizer, identical to the one from Qwen 1, with a vocabulary of 151,643 tokens plus 3 special control tokens. This tokenizer is highly efficient for multilingual text and code, yielding compact sequences and enabling broad language coverage. All Qwen 2 models share the same tokenizer and thus can handle over 27 languages (English, Chinese, and many others) out-of-the-box. Regarding context window, Qwen 2 supports very long context lengths: models were initially trained with a 4,096 token context, then extended to 32,768 tokens in later training stages to enhance long-text handling.

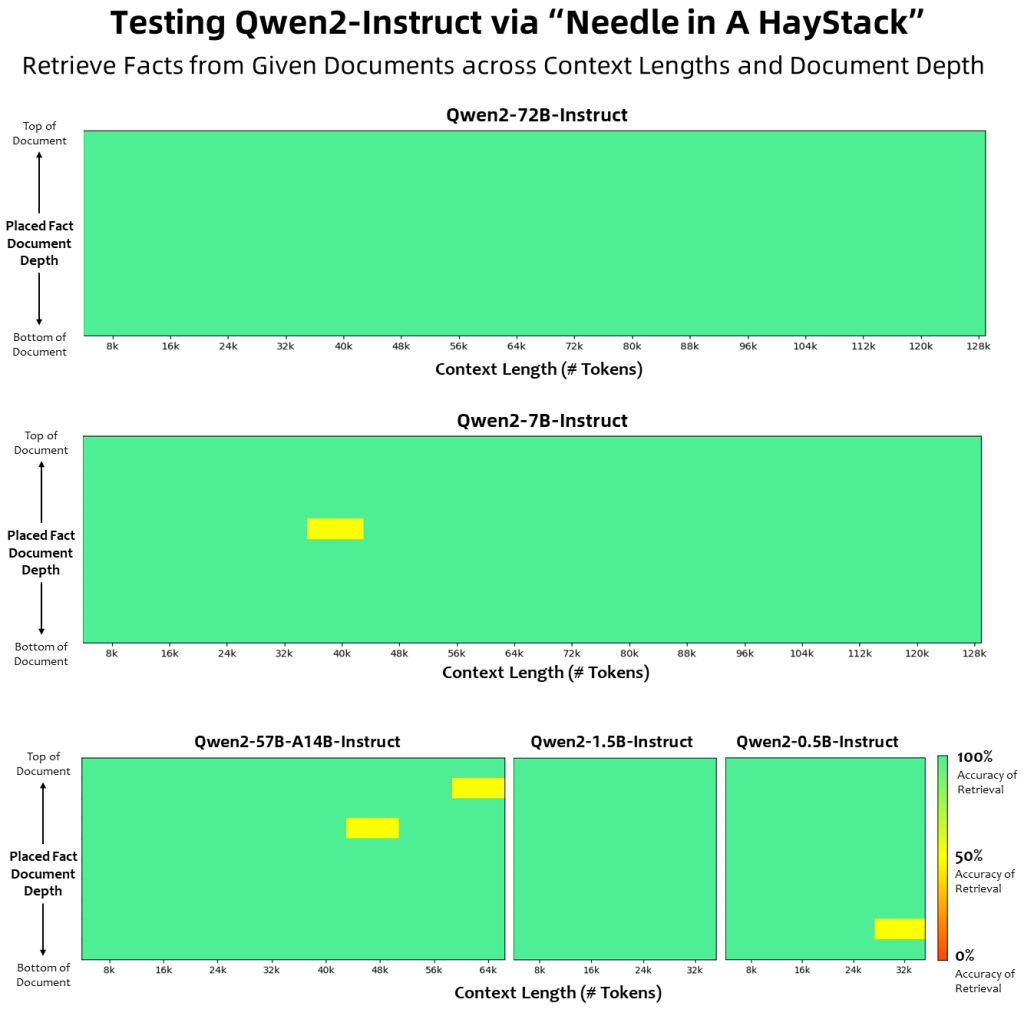

Thanks to architectural upgrades (explained below), the larger Qwen 2 models can even extrapolate up to 64K or 128K tokens of context without significant performance degradation. In fact, the 7B and 72B variants officially support context windows up to 128,000 tokens, while the 0.5B and 1.5B models support up to 32,000 tokens. This enormous context capacity makes Qwen 2 suitable for tasks like long document summarization and multi-document retrieval, assuming the inference infrastructure can accommodate the memory requirements.

Efficiency Improvements – GQA, DCA, and YARN: Qwen 2’s architecture integrates several optimizations to improve inference speed and memory usage, especially for long contexts. A key innovation is Grouped Query Attention (GQA), which replaces standard multi-head self-attention with a grouped query design. GQA reduces the number of key/value heads, significantly cutting down the size of the key-value cache per token and thereby lowering memory footprint during generation. This translates to faster throughput and the ability to handle longer prompts without exhausting GPU memory. All Qwen 2 models use GQA – for example, the 72B model uses 64 attention query heads but only 8 key-value heads, resulting in much smaller KV storage per token.

In addition, Qwen 2 implements Dual Chunk Attention (DCA) combined with the YARN scaling mechanism to manage very long sequences. DCA divides an extremely long sequence into chunks and processes them in a way that preserves cross-chunk attention, enabling effective long-range context handling beyond the normal training window. YARN (Yet Another Re-normalization) further rescales attention weights to improve length extrapolation, ensuring the model remains stable and coherent even as input lengths approach the 100K+ token range. Together, GQA + DCA + YARN allow Qwen 2 to process long inputs (64K–128K tokens) with only minimal loss in perplexity and performance, a capability rarely seen in most LLMs of this class.

Shared Features and Training: All Qwen 2 models use rotary position embeddings (RoPE) for position encoding (with adaptations to support extended context lengths) and include learned QKV biases in attention layers. The embedding matrices are untied (separate input and output embeddings) for the larger models to improve expressiveness, whereas the smallest models keep embeddings tied to reduce parameters. Qwen 2 models were pre-trained on an extremely large and diverse corpus – over 7 trillion tokens spanning open-domain text, code, and multi-language content. Compared to earlier Qwen editions, Qwen 2’s training data places greater emphasis on programming code and mathematical reasoning data, which is believed to boost the model’s logical and problem-solving abilities.

After pre-training, each model underwent supervised fine-tuning and Direct Preference Optimization (DPO) on instruction-following datasets. This post-training alignment teaches the model to follow user instructions and conversational cues effectively, and it acts as an RLHF-like process that aligns the model output with human preferences (while using a more stable direct optimization objective). The result is that Qwen 2 instruct models can respond helpfully and safely in interactive settings without additional prompting tricks. (For example, Qwen 2 was fine-tuned on single-turn and multi-turn chat data, so it understands roles like system, user, assistant in a conversation context.)

Vision and Audio Extensions: Although Qwen 2’s core release focuses on text-based LLMs, the architecture has been extended to support multimodal capabilities in the Qwen family. Alibaba has released Qwen2-VL, a vision-language model that can interpret images alongside text, and Qwen2-Audio, an audio-language model for speech or audio understanding. These variants inherit the base Qwen 2 architecture (in smaller sizes like 2B or 7B parameters) and are fine-tuned on image-text and audio-text data respectively.

For example, Qwen2-VL-7B can take an image as input and generate a textual description or answer questions about the image. From a developer’s perspective, these multimodal models enable tasks such as image captioning, visual question answering, or transcribing and analyzing audio using the same family of models. The multimodal extensions maintain compatibility with the Qwen 2 tokenizer and can be accessed via Hugging Face as separate model checkpoints (Qwen/Qwen2-VL-7B-Instruct). While not every Qwen 2 use-case will require vision or audio features, it’s notable that the Qwen ecosystem is evolving towards an “omni-modal” AI that integrates text, vision, audio, and even video (as seen in the later Qwen2.5-Omni release).

Performance & Benchmarks

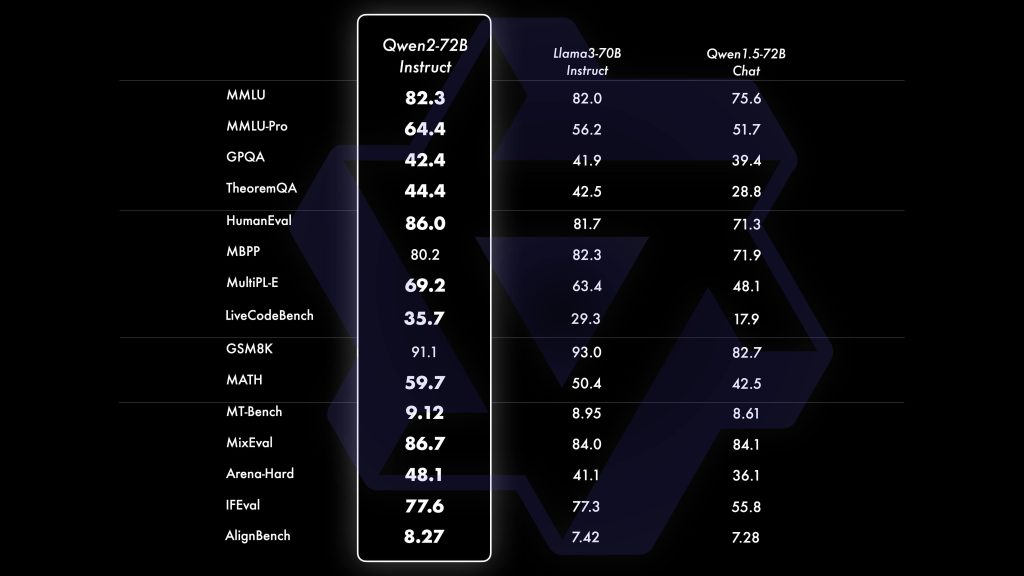

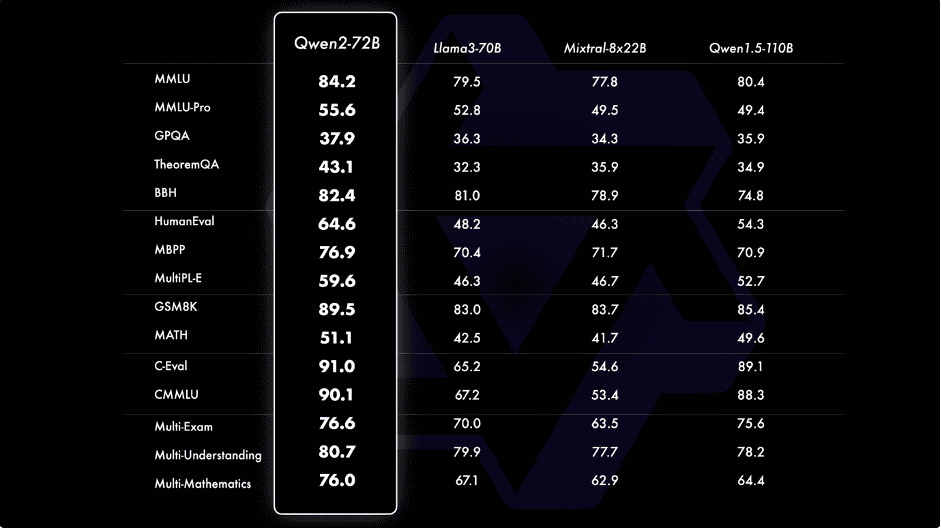

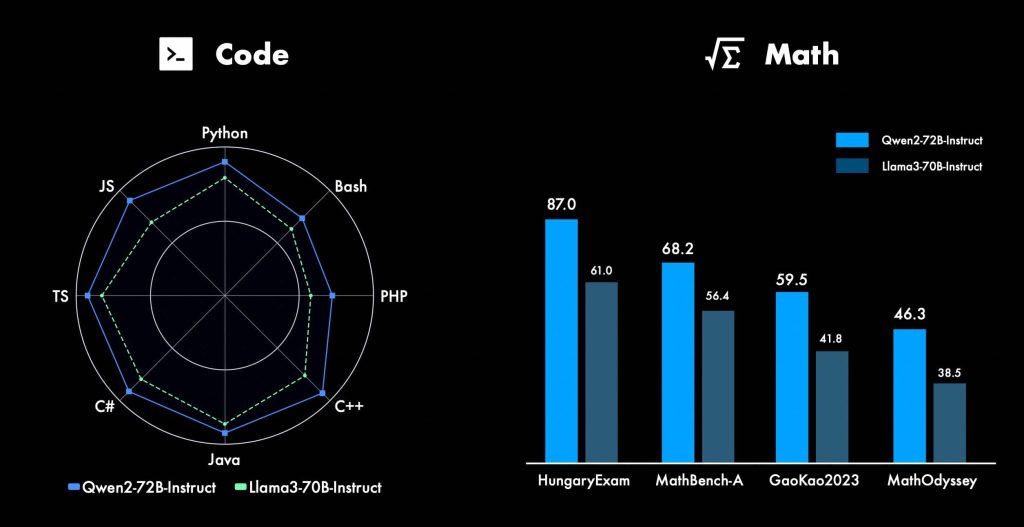

Qwen 2 exhibits state-of-the-art performance among open LLMs in its class, and it is competitive with many proprietary models on a range of benchmarks. Official evaluations from the Qwen team show that the largest model, Qwen2-72B-Instruct, achieves excellent results on both chat/oriented tests and knowledge benchmarks.

For instance, Qwen2-72B-Instruct scores 9.1 on MT-Bench (an advanced multi-turn chat evaluation) and 48.1 on the Arena evaluation (Hard category), indicating very strong conversational ability. Its coding prowess is reflected in a 35.7 score on LiveCodeBench, a competitive coding benchmark.

Meanwhile, the base pre-trained model Qwen2-72B (without instruction tuning) demonstrates broad knowledge and reasoning skills: it scored 84.2 on the MMLU academic exam benchmark (5-shot), 89.5 on GSM8K math word problems, 64.6 on HumanEval programming challenges, and 82.4 on the Big-Bench Hard (BBH) suite. These numbers place Qwen2-72B at or near the top of the open-model leaderboard as of late 2024, indicating a closing gap with the best closed models in knowledge, math, and coding domains.

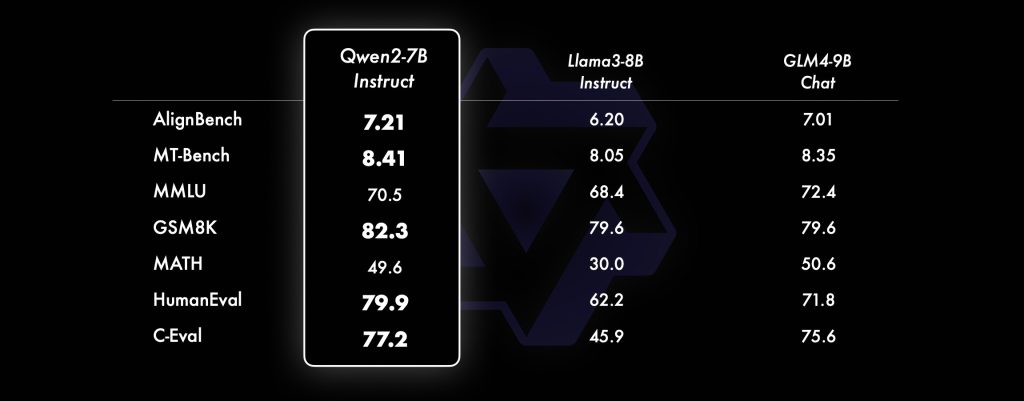

Even the mid-sized models in the Qwen 2 family perform impressively given their parameter counts. For example, the Qwen2-7B-Instruct model (7.6B params) reaches about 70.3% accuracy on MMLU, which surpasses many other open models of similar size and even some larger ones. It also excels in coding tasks (HumanEval and MBPP) and multilingual evaluations – notably, Qwen2-7B achieved over 83% on the Chinese C-Eval exam, reflecting the benefit of its multilingual training focus.

The smallest models (1.5B and 0.5B) naturally have lower absolute performance, but they have been noted to handle basic tasks and follow instructions surprisingly well given their size. Those tiny models enable use-cases where memory or compute is extremely limited (e.g. on a smartphone or IoT device) with the trade-off of limited reasoning depth.

All benchmark results cited are from official evaluations and should be interpreted accordingly. They indicate that Qwen 2 has particularly strong capabilities in code generation and math reasoning (thanks to targeted data enrichment during training) and maintains robust performance across languages (due to the multilingual 7+ trillion token pre-training).

In practical terms, developers can expect Qwen 2’s largest model to produce high-quality outputs for complex tasks, while the 7B model is a solid choice for many applications that require a balance between capability and efficiency. For more detailed comparisons, the Qwen technical report shows Qwen 2 outperforming previous Qwen versions and other open LLMs on a wide array of tasks.

(Per the project guidelines, we avoid direct model-to-model comparisons in this guide; instead, we focus on Qwen 2’s individual merits.)

Developer Use Cases

Qwen 2 is a general-purpose language model family, which means it can be applied to a broad spectrum of AI tasks. Below we highlight key use cases for developers, along with notes on how Qwen 2 can add value in each scenario:

Text Generation and Summarization: Qwen 2 can generate human-like text for content creation, report writing, and summarizing long documents. Developers can use it to produce articles, marketing copy (keeping the tone professional as needed), or to condense lengthy texts into concise summaries. The model’s long context capability (especially in 7B and 72B variants) is a major advantage for summarization of large files or transcripts, since you can feed very long texts into the model in one go. Using the instruction-tuned model, you simply prompt Qwen 2 with a directive like “Summarize the following document…” and the relevant text, and it will attempt to produce a coherent summary.

Chatbots and Conversational Agents: Qwen 2-Instruct models are well-suited for building chatbots, virtual assistants, and AI agents that interact in natural language. They have been fine-tuned on multi-turn dialogue, enabling them to maintain context over a conversation and follow user instructions or queries. You can integrate Qwen 2 as the brain of a chatbot to answer customer questions, provide technical support, or even role-play as a persona in an interactive fiction game. Furthermore, Qwen 2 supports tool use and function calling (in the Qwen-Agent framework), meaning advanced agents can be built where the model decides to invoke external tools/APIs based on the user’s request. Combined with the model’s knowledge (general and domain-specific), this makes Qwen 2 a strong foundation for AI assistants and autonomous agent frameworks.

Retrieval-Augmented Generation (RAG) for Q&A: With its large context window and strong comprehension, Qwen 2 is excellent for knowledge-based Q&A systems. In a RAG pipeline, you can use Qwen 2 to synthesize answers from retrieved documents or database records. For example, given a user query, your system can fetch relevant paragraphs from a knowledge base and prepend them to Qwen’s prompt, and the model will then generate an answer grounded in that information. Because Qwen 2 can handle very long inputs, you can supply many retrieved documents at once (potentially dozens of pages) within the 32K or 128K token limit. This enables building enterprise search assistants or documentation chatbots that draw on large collections of data. The model’s multilingual ability also means it can perform RAG in languages other than English, assuming your retrieval corpus is multilingual.

Code Assistance and Generation: Software developers can leverage Qwen 2 for programming support – tasks such as generating code snippets, explaining code, or even acting as a pair-programmer in an IDE. Qwen 2 was trained on a substantial volume of source code (in multiple languages), resulting in high coding benchmark scores. It can produce functions or classes given a description, suggest how to fix a bug, or translate code from one language to another. Integration-wise, you could use Qwen 2 in a VS Code extension or a CLI tool to provide on-demand code completions or documentation. The Qwen family also includes specialized model variants like Qwen-Math and Qwen-Coder that are further fine-tuned for mathematical reasoning and coding tasks. Those can be employed if a project demands even more focused performance in those areas. In general, the 7B model is capable of basic coding help, but for complex programming tasks (especially ones requiring understanding large code context or generating lengthy code), the 72B model or a coder-specialized model would give the best results.

Data Processing and Content Transformation: Qwen 2 can assist with various NLP data processing tasks: text classification, information extraction, format transformation, etc. For example, you can prompt the model to extract key entities from a document (people, dates, product names), convert unstructured text into JSON format, or categorize customer feedback into topics. While these tasks can sometimes be done with smaller fine-tuned models, using Qwen 2 with an appropriate prompt can achieve high accuracy without needing task-specific training. The model’s ability to follow instructions means you can guide it with clear examples or formatting requirements. Developers might use Qwen 2 as a flexible “text processing engine” in pipelines – e.g. feed it an email and ask it to output a summary plus the sentiment of the email, all in one go. Qwen 2’s multilingual support is beneficial here as well, since it can process text in many languages for global applications.

Embeddings and Semantic Search: Although Qwen 2 is primarily a generative model, you can also use it (particularly the base model) to generate embedding vectors for semantic similarity tasks. By extracting the hidden states or using a pooling of the model’s last layer (some open-source projects provide adapters for this), Qwen 2 can produce high-dimensional embeddings that capture semantic meaning of texts. These embeddings can power tasks like semantic search, clustering, or recommendation systems. For instance, you could embed a collection of documents using Qwen 2 and build an index to find which documents are most relevant to a query (vector search). Note that generating embeddings with a 72B model is computationally heavy; for efficiency, the smaller Qwen 2 models or a dedicated embedding model might be preferable. Alibaba has also released Qwen-Encoder models optimized for embedding tasks, but if needed, the generative Qwen 2 can double as an encoder for domain-specific use (with the advantage of being trained on very large data, hence capturing general semantics well).

Enterprise Automation: In enterprise settings, Qwen 2 can be the cornerstone of automation workflows that involve understanding or producing language. This includes drafting automated reports, analyzing large volumes of internal documents, assisting in customer service automation, and integrating with business tools. For example, Qwen 2 could be used to summarize sales reports at the end of each week, generate meeting minutes from transcripts, or populate forms by extracting information from emails. When connected to other enterprise systems, a Qwen 2-powered agent could execute actions like querying databases or scheduling events based on natural language instructions (via tool usage plugins). Its alignment training (DPO) makes it proficient at following user instructions closely, which is valuable in structured business processes. Due to its open-source license and on-premise deployability, companies can use Qwen 2 to automate tasks without sending sensitive data to third-party APIs, maintaining data privacy. Many enterprises also fine-tune such models on their proprietary data to specialize them – Qwen 2’s open nature allows full fine-tuning or parameter-efficient tuning (e.g. LoRA) to imbue the model with domain-specific knowledge (financial, legal, medical, etc.) for deeper automation use cases.

Python Integration Examples

One of the most common ways to work with Qwen 2 is via the Hugging Face Transformers library. Alibaba has published the Qwen 2 model weights on Hugging Face Hub, making it straightforward for developers to download and use them in Python. In this section, we’ll walk through code examples for loading a Qwen 2 model, generating text, and adjusting inference parameters. (Ensure you have an appropriate GPU environment if you plan to run the larger models, or use a smaller model for testing on CPU.)

Note: Make sure to install

transformers>=4.37.0to get built-in support for Qwen 2 models. Also installaccelerateif you intend to leverage faster GPU dispatch or model parallelism.

1. Loading the Qwen 2 Model and Tokenizer – Use the AutoModelForCausalLM and AutoTokenizer classes to load a pre-trained Qwen 2 model from Hugging Face. For example, to load the 7B instruction-tuned model:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "Qwen/Qwen2-7B-Instruct" # instruct (chat) variant of Qwen2-7B

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name,

device_map="auto", # automatically allocate layers to GPU(s)

torch_dtype="auto") # use float16 if supported

model.eval() # set model to evaluation mode (disable dropout)

In the code above, we specify the instruct version of the model (recommended for text generation tasks, as it’s already aligned with human instructions). The tokenizer will handle encoding text to token IDs and decoding IDs back to text. We use device_map="auto" to automatically place the model on available GPU(s) (this requires the accelerate library) – for a smaller model like 7B, this will typically load entirely on a single GPU. If you are loading a very large model (e.g. 72B) on multiple GPUs or on CPU, you might need to adjust the device_map or use techniques like 8-bit quantization to fit it in memory. Once loaded, the model can be used like any AutoModelForCausalLM for text generation.

2. Generating Text from the Model – After loading, you can prompt the model and generate outputs using the generate method. Here’s a basic example of text generation with custom parameters:

# Define an input prompt for the model

prompt_text = "Qwen2, write a brief introduction about yourself and your capabilities."

# Tokenize the prompt and get input IDs

input_ids = tokenizer(prompt_text, return_tensors='pt').input_ids.to(model.device)

# Generate output continuation

output_ids = model.generate(input_ids,

max_length=100, # maximum tokens in the generated output (including prompt)

temperature=0.7, # lower temperature for more focused output

top_p=0.95, # nucleus sampling to limit to top 95% probability mass

do_sample=True, # enable sampling (if False, it would do greedy search)

eos_token_id=tokenizer.eos_token_id)

# Decode the generated tokens to text

generated_text = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(generated_text)

In this snippet, we provide a prompt asking Qwen2 to introduce itself. The model.generate() call uses sampling parameters: a temperature of 0.7 to inject some randomness (lower values make output more deterministic), and top_p=0.95 for nucleus sampling (the model will sample from the smallest set of tokens whose cumulative probability is 95%). We also set a max_length to prevent the model from producing unbounded output. We include the eos_token_id so that generation can stop if the model outputs the end-of-sequence token (the Qwen tokenizer’s EOS token). The result, generated_text, will contain the model’s response to the prompt.

For instance, the output might look like: “Hello! I am Qwen2, a large language model developed by Alibaba Cloud. I have been trained on a vast range of text and code, allowing me to assist with tasks such as answering questions, generating content, coding, and more. I’m designed to follow instructions and can handle multiple languages and very long documents…” (The actual text may vary, but it should be along these lines given the prompt.)

3. Interactive Inference Loop – For building a chatbot or interactive prompt loop, you can repeatedly feed user inputs to the model. Below is a simple REPL (read-eval-print loop) example illustrating a continuous conversation with Qwen 2:

# Interactive loop: type a message and get a model response

print("Start chatting with Qwen2 (type 'exit' to quit):")

while True:

user_input = input("User: ")

if user_input.strip().lower() in ("exit", "quit"):

break

# Format the input for a chat model

prompt = f"[USER]{user_input}\n[ASSISTANT]"

input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to(model.device)

output_ids = model.generate(input_ids, max_new_tokens=256, temperature=0.8, top_p=0.9)

response = tokenizer.decode(output_ids[0][input_ids.shape[-1]:], skip_special_tokens=True)

print(f"Assistant: {response}")

This loop simulates a conversation where each iteration takes the user’s input, appends an [ASSISTANT] tag (assuming the model was trained on some prompt format with role tags – Qwen’s chat models typically understand roles like [USER] and [ASSISTANT] or the <s> system/user tags internally), and generates a continuation. We then decode only the newly generated portion (excluding the prompt tokens) to get the assistant’s answer. You might need to adjust the prompt formatting to exactly match what Qwen 2’s instruction model expects – refer to Qwen 2 documentation for the preferred chat template (some instruct models simply take a conversation history in JSON or a specific string format). The above example is a simplification; in practice, you may maintain the conversation history and include it in each prompt for multi-turn memory. The loop uses a moderate temperature and top_p to keep the dialogue creative but mostly relevant. Type “exit” to break out of the loop.

4. Pipeline API (Alternative): If you prefer a higher-level interface, you can use Hugging Face’s pipeline for text generation. For example:

from transformers import pipeline

pipe = pipeline("text-generation", model="Qwen/Qwen2-7B-Instruct", device_map="auto")

result = pipe("Explain the Pythagorean theorem in simple terms.", max_new_tokens=100, do_sample=False)

print(result[0]["generated_text"])

This will handle the tokenization and generation under the hood, returning the generated text. The pipeline is convenient, but using the model and tokenizer directly (as shown in steps 1–3) gives more control, especially for advanced use cases like multi-turn chats or custom stopping criteria.

Prompting & Inference Best Practices

To get the most out of Qwen 2, developers should follow some best practices for prompting and configuring the model’s inference. Here are several tips and guidelines:

Use Instruction-Tuned Models for Queries: Always start with the -Instruct (aligned/chat) version of Qwen 2 for any task that involves following a prompt or user instruction. The base models are unaligned and may produce incoherent or undesired outputs if used directly for generation. The instruct models have been fine-tuned to respond helpfully and safely to human inputs, so you’ll generally get far better results with them for Q&A, chat, or any instruction-driven task.

Provide Clear Instructions and Context: Qwen 2 will respond best when given a clear prompt. If you want a certain style or format, include that in the prompt (e.g. “List the steps to… in bullet points” or “You are an expert consultant, answer in a formal tone: …”). For multi-turn conversations, include a system role message at the start to establish the assistant’s identity or rules (for example: “System: You are a helpful financial advisor bot…”). This helps steer the model’s responses in the desired direction. Leverage the model’s long context by providing any relevant background information or data in the prompt, rather than expecting the model to know specifics not in its training data.

Adjust Decoding Parameters Thoughtfully: The temperature and top_p (and top_k) settings significantly affect Qwen 2’s output. For factual or deterministic tasks (like arithmetic or code generation), use a lower temperature (e.g. 0.2–0.5) and consider turning off sampling (set do_sample=False to use greedy or beam search). This makes the model more focused and likely to produce the “most probable” completion. For creative tasks (story generation, brainstorming), a higher temperature (0.7–1.0) with nucleus sampling (top_p ~ 0.9–1.0) can yield more diverse and imaginative outputs. Monitor the generated text length with max_new_tokens to prevent run-on outputs. If the model tends to ramble or repeat, you can also employ repetition penalty or stop sequences (if certain tokens or phrases should terminate the generation).

Utilize Long Context Wisely: While Qwen 2 can handle very long inputs, feeding an extremely large prompt (say 100K tokens) will be slow and memory-intensive. Use the long context capability for cases where it truly adds value – e.g., analyzing a long legal document or maintaining a lengthy dialog history. When working with long contexts, chunking the input and using retrieval (as in RAG) might still be more efficient. If you do provide tens of thousands of tokens to the model, ensure your inference infrastructure (GPU memory or vRAM) is sufficient, and consider using optimized inference engines (like vLLM or Hugging Face’s Text Generation Inference server) that are designed for high-throughput long-context handling. Empirically, Qwen 2 with DCA performs well up to its limit (64K/128K), but always test on your specific input sizes to gauge speed and coherence of outputs.

Leverage Few-Shot Prompting if Needed: Although Qwen 2 is instruction-tuned, providing a few examples in the prompt can further improve performance on certain structured tasks. For instance, if you want the model to output data in a specific JSON format, you might show a short example of an input and the desired JSON output in the prompt. The model can then follow that pattern more reliably. With Qwen 2’s large context, you have plenty of room to include multiple examples. Just be mindful that very long prompts with many examples will leave fewer tokens for the model’s answer (due to the max_length limit).

Monitor and Post-Process Outputs: As with any LLM, Qwen 2 may occasionally produce incorrect or nonsensical answers (hallucinations) or content that needs filtering. It has undergone alignment training to reduce harmful or biased outputs, but it’s not infallible. Developers should implement appropriate checks in critical applications. For instance, if using Qwen 2 to generate code, it’s wise to run tests or static analysis on the output. If using it for customer-facing chatbots, include a moderation layer to catch any disallowed content. Qwen 2 will typically follow instructions not to produce certain content (and the open models have some safety fine-tuning), but being an open model, it may not be as tightly restricted as some closed APIs. Always review outputs especially for applications in sensitive domains.

Experiment with Specialized Qwen Variants: Alibaba’s Qwen family includes models tuned for specific modes (like Qwen2-Math for complex mathematical reasoning or Qwen2-Coder for programming help). If your use case is heavily focused in one of these areas, consider using these specialized models as they may yield better accuracy. For example, Qwen2-Math might handle step-by-step proofs or algebra problems better than the general model. Similarly, Qwen2’s vision and audio models require different prompting (e.g., providing an image tensor or audio features to the model along with a question) – refer to their documentation for how to format inputs. In summary, pick the model and prompting style best suited to your task, and don’t hesitate to try different approaches (the flexibility of prompting is one of the strengths of using LLMs like Qwen 2).

Deployment Options (Local, Cloud, and API)

Developers have multiple options to deploy and run Qwen 2 depending on their resources and requirements:

Local GPU Deployment: You can run Qwen 2 on your own hardware if you have sufficient GPU resources. The smaller models (0.5B, 1.5B) are easily run on CPU or modest GPUs (they require on the order of 1–3 GB of VRAM, so even a laptop GPU can handle them). The 7B model typically needs a GPU with ~14–16 GB memory for full 16-bit precision; with 8-bit quantization it can fit in about 8 GB or less, making it runnable on consumer gaming GPUs. The largest 72B model is resource-intensive: it may require 4× A100 40GB GPUs or similar to load in full precision. However, techniques like quantization (int8/int4) and CPU offloading can help – for instance, using a 4-bit quantized version of Qwen2-72B (community-provided on Hugging Face) you might run it on a single high-end GPU, albeit with slower inference. Tools like Hugging Face Accelerate and DeepSpeed can partition the model across multiple GPUs automatically (device_map="auto" as shown earlier). There are also user-friendly interfaces such as Oobabooga’s Text Generation Web UI and other Web UIs that support Qwen models, allowing you to deploy a local web service for Qwen 2 with chat interface. Running locally gives you maximum control and privacy (no data leaves your environment), which is a big advantage for enterprise use. Just plan for the necessary compute – e.g., start with 7B or 1.5B if you don’t have powerful GPUs, then scale up as needed.

Cloud Deployment (Alibaba Cloud Model Studio): Alibaba offers Qwen 2 models through its Model Studio service, which allows you to host and consume the model via cloud endpoints. With Model Studio, you can avoid managing the infrastructure; instead, you can call Qwen 2 via an API (similar to how you would call OpenAI’s API). To use this, you typically need to create an Alibaba Cloud account, enable Model Studio, and obtain an API key. Model Studio provides a selection of Qwen models (often branded as Qwen-7B, Qwen-Max, etc. in the console) that you can deploy or use on demand. Notably, Model Studio supports an OpenAI-compatible API for Qwen, meaning you can use OpenAI client libraries or REST calls with an endpoint URL and your API key to get chat completions. For example, using the official OpenAI Python SDK, you can set the base URL to Alibaba’s endpoint and set model="qwen-plus" (or another Qwen model name) in the openai.ChatCompletion.create() call. The request and response format (with roles “system”, “user”, “assistant”) is the same as OpenAI’s ChatGPT API, which makes integration quite straightforward. Below is a conceptual example of calling Qwen 2 via the API:

import openai

openai.api_key = "YOUR_ALIBABA_CLOUD_API_KEY"

openai.api_base = "https://dashscope-intl.aliyuncs.com/compatible-mode/v1" # endpoint for Singapore region (intl)

response = openai.ChatCompletion.create(

model="qwen-plus", # example model name on Model Studio (instruction-tuned Qwen model)

messages=[

{"role": "system", "content": "You are a helpful coding assistant."},

{"role": "user", "content": "Write a Python function to check if a number is prime."}

],

temperature=0.7,

top_p=0.9

)

answer = response['choices'][0]['message']['content']

print(answer)(In this snippet, "qwen-plus" is a placeholder model name used in Alibaba’s docs; it would correspond to one of the Qwen 2 models available via the service. Check Model Studio’s documentation for the exact model identifiers, as they may update with new versions like Qwen2.5 or Qwen-14B.)

The cloud deployment is ideal if you need to scale on demand or integrate Qwen into a web application without hosting the model yourself. It also provides access to the latest Qwen model variants (Alibaba frequently updates their cloud models, e.g. Qwen2.5 or Qwen3 might be available). Keep in mind that usage of the cloud API may incur costs, and you should also ensure compliance with any usage policies (especially if processing sensitive data, since it’s running on Alibaba’s servers).

Hybrid Approaches and Other Platforms: Apart from Alibaba Cloud, you can deploy Qwen 2 on other cloud providers or machine learning platforms. For instance, you could set up a VM with GPUs on AWS, Azure, or Google Cloud and run the model there (similar to local deployment but on cloud hardware you control). Another option is to use Hugging Face Inference Endpoints or Spaces – Hugging Face allows hosting models as APIs or demo apps, which can be convenient for lightweight use or sharing a prototype. There are also community solutions like OLLAMA for running and managing local LLMs with ease, and ModelScope (an Alibaba-supported model hub) which provides Docker images and CLI for Qwen models. Advanced users might integrate Qwen 2 into LangChain or LlamaIndex pipelines for building agents and retrieval-augmented systems; these frameworks have connectors for custom LLMs loaded via Hugging Face. Given Qwen 2’s popularity, it’s supported by most major open-source inference tools – for example, vLLM (by UC Berkeley) can serve Qwen 2 with optimized throughput (especially beneficial for long context requests), and Text Generation Inference (TGI) from Hugging Face can also deploy Qwen with features like multi-client serving and token streaming. In summary, Qwen 2 is flexible: you can run it on-premises for full control, or consume it as a managed service for convenience. Choose the deployment path that fits your team’s expertise, budget, and latency requirements.

Limitations & Considerations

While Qwen 2 is a powerful model, developers should be aware of its limitations and design considerations:

Resource Intensity: Like all large LLMs, Qwen 2 (especially the 72B model) demands significant computational resources. Inference is slower and more memory-heavy as the model size and context length increase. For example, generating long outputs with the 72B model at 128K context can be extremely slow without specialized infrastructure. If low latency is crucial, smaller Qwen2 models or distillations might be preferable. Also, running the MoE model (57B-A14B) requires a framework that supports MoE routing – standard transformers inference may not fully utilize it efficiently, so check for MoE support or convert it to a dense approximation if needed.

Accuracy and Hallucinations: Although Qwen 2 achieves high benchmark scores, it is not 100% accurate on all facts or math problems. It can still hallucinate – meaning it may produce confident-sounding statements that are false. This is a common issue with LLMs. Qwen 2’s increased training on factual data and the DPO alignment help mitigate this, but testing and validation are necessary for critical applications. Avoid blindly trusting the model for factual queries without verification (for instance, for a QA system, consider integrating a retrieval step to ground answers in real data).

Output Length Management: Qwen 2 does not inherently know when to stop speaking unless it encounters an end-of-sequence token or is instructed to be concise. When asking it to produce a response, especially for open-ended prompts, use the max_new_tokens (or max_length) parameter to control the length. If very long responses are desired (e.g., generating a full article), you might need to set this high, but be cautious – the model could go off-topic if it generates too much without guidance. In interactive settings, it’s often useful to limit responses to a few paragraphs to maintain a back-and-forth dialog.

Biases and Ethical Considerations: Qwen 2 was trained on a vast corpus from the internet, which likely includes biases and stereotypes present in that data. The model might occasionally produce outputs that are biased or culturally insensitive. Alibaba’s alignment training would have tried to reduce harmful biases, but no model is perfect in this regard. Developers should put in place content filters or review processes if Qwen 2 is used in user-facing applications. This is especially important for domains like healthcare or law – the model might output something that sounds authoritative but reflects a bias or is not legally sound, etc. Use Qwen 2 as an assistant, not an infallible authority.

License and Usage Terms: Qwen 2 is open-source (Apache 2.0 licensed), which means you can use it freely in commercial products. This is a major advantage over some other LLMs that have restrictive licenses. However, note that the Apache license applies to the model weights and code, not to any data you might feed into it. You are responsible for ensuring compliance with data privacy laws and not using the model for prohibited purposes. Also, if you fine-tune Qwen 2 on your own data and redistribute the model, be sure to credit the original model and abide by the license. In summary, from a licensing perspective, Qwen 2 is very permissive, but always double-check if there have been any updates or additional usage guidelines from Alibaba (for instance, the model card might have some ethical AI considerations to follow).

Comparability and Versioning: Keep in mind that Qwen 2 is one generation of the Qwen family. Alibaba has already introduced Qwen 2.5 and Qwen 3 in subsequent developments, which bring further improvements. If absolute state-of-the-art performance is needed, you might look at those newer versions. That said, each version may have different model sizes or requirements (Qwen2.5, for example, includes an “Omni” multimodal model and even larger parameter counts). When developing, ensure you’re using the intended version – sometimes community hubs have many similarly named models (e.g., Qwen2 vs Qwen2.5). Stick to the official repositories (the Hugging Face Qwen organization) to avoid confusion. And be prepared that newer checkpoints might be released; fortunately, they usually maintain similar APIs, so upgrading tends to be straightforward (often just swapping the model checkpoint name).

Fine-Tuning Complexity: If you plan to fine-tune Qwen 2 on custom data, be aware that full fine-tuning of a model like 72B is a non-trivial task requiring expert knowledge and significant GPU resources. However, techniques like LoRA (Low-Rank Adaptation) or QLoRA (which fine-tune a 4-bit version) can make it feasible to fine-tune large models on a single GPU or a small cluster. There are community tutorials on fine-tuning Qwen models using such methods. Also, Alibaba might provide specific parameter-efficient finetuning recipes (they mention tools like Axolotl, etc., in their docs). It’s recommended to use an instruction-tuned model as the base for further fine-tuning if your use case is instruction-driven, to preserve the alignment. And always validate the fine-tuned model rigorously, as it can sometimes learn undesirable artifacts from small datasets (like verbatim memorization or tone changes).

By keeping these considerations in mind, you can mitigate potential issues and ensure that Qwen 2 serves your application reliably and responsibly.

FAQs for Developers

What makes Qwen 2 different from Qwen 1 or Qwen 1.5?

What are the available Qwen 2 model variants and sizes?

Qwen organization (Qwen/Qwen2-7B-Instruct). The instruction-tuned models are usually the ones with “-Instruct” in their name, which are recommended for most applications.What is the maximum context length of Qwen 2?

max_position_embeddings is set appropriately. Always verify memory usage when going for very large contexts.Can Qwen 2 be used for languages other than English?

How does the instruction tuning affect Qwen 2’s usage?

What are the hardware requirements to run Qwen 2?

For 0.5B and 1.5B models, these are very small and can run on CPU or edge devices (they might use around 1–2 GB RAM). They are suitable for mobile or real-time IoT scenarios.

The 7B model is around 7.6 billion parameters (about 6.5B int32 weights excluding embeddings). In 16-bit precision that’s roughly 15 GB of memory needed. A single NVIDIA 3090 or 4090 (24 GB VRAM) can handle it easily, even a 16 GB GPU can with some memory optimizations. With 8-bit quantization, you could bring it down to ~7–8 GB and possibly run on a 12 GB GPU.

The 57B (MoE) model effectively loads 57B parameters, which is huge (~115 GB in fp16). However, since only 14B are active per token, some memory-saving is possible at inference if not all experts are loaded at once. In practice, to run this model, you would likely need a multi-GPU setup (e.g., 8×32GB GPUs) or use CPU offloading and lots of system RAM. The MoE model is more of a research model; many users might skip it in favor of the dense 72B unless they have a specific reason to exploit MoE.

The 72B model at bf16 is around 145 GB of model data, which is beyond any single GPU. You’d typically distribute it across 4 or 8 GPUs. For instance, 8×A100 80GB could hold it with room, or 8×A100 40GB might just barely manage with optimizer tricks. If you use 4-bit quantization (GPTQ int4), the 72B can be reduced to around 40 GB, which some intrepid users have run on a dual-GPU machine. It’s still quite heavy. Using model parallelism (via Accelerate or DeepSpeed zero-inference) is key for 72B.

In summary, 7B is the largest that’s easy for a single GPU, and 72B requires server-grade resources or cloud instances. Always consider throughput as well – larger models not only need more memory but also are slower per token. You can mitigate this by batching requests or using faster transformer implementations (FlashAttention, etc. are presumably used in Qwen2’s implementation).

Is Qwen 2 available through an API like OpenAI’s models?

model name and a list of messages (system/user/assistant). This will return a completion similar to how OpenAI’s ChatCompletion works. The documentation indicates model names like “qwen-plus” or specific version identifiers to use in the API call. There may also be a simpler online playground in Model Studio where you can test prompts directly on the browser. If you prefer not to handle the model yourself, this API is a convenient way to use Qwen 2 in applications – just keep in mind you are dependent on Alibaba’s service availability and pricing. Alternatively, community APIs (like through Hugging Face’s Inference API or other third-party hosted instances) might exist, but the official way is via Alibaba Cloud’s API or by hosting it yourself as discussed.