Qwen3-Coder is a state-of-the-art large language model (LLM) specialized for coding tasks, developed by Alibaba Cloud’s Qwen team. It belongs to the Qwen3 series of models and is tailored for advanced code generation, intelligent code completion, automated debugging, and even “agentic” coding tasks where the AI can perform actions like running tools or modifying files.

The flagship model, Qwen3-Coder-480B-A35B-Instruct, uses an enormous Mixture-of-Experts architecture with 480 billion total parameters (about 35B active per inference). Despite this massive scale, it’s designed to integrate seamlessly into developer workflows and IDEs, offering powerful assistance across many programming languages and scenarios.

In practical terms, Qwen3-Coder can serve as a developer’s AI pair-programmer – able to write new code from scratch given a prompt, suggest completions for partially written code, find and fix bugs with explanations, and even refactor or optimize existing code upon request.

Unlike generic chat models, Qwen3-Coder has been heavily trained on programming content (about 70% of a 7.5 trillion token pretraining corpus is code) and fine-tuned with techniques like execution-based feedback. This results in a model that excels at writing correct, executable code and reasoning about complex software tasks. Whether you need to generate a Python script, debug a tricky C++ error, or perform multi-step coding tasks with tool use, Qwen3-Coder is equipped to help.

In the sections below, we’ll explore its architecture, capabilities, supported languages, integration examples, and best practices for getting the most out of this advanced coding AI.

Architecture Overview

At a high level, Qwen3-Coder’s architecture and training regimen are designed to push the limits of coding AI performance. Here are some key points about its design and training:

- Mixture-of-Experts (MoE) at Unprecedented Scale: The model uses a MoE transformer architecture with 480B parameters in total, of which 35B are active in any given forward pass. Internally it has 160 expert subnetworks, with 8 experts activated per query (hence ~8 × 4.4B = 35B parameters used). This design allows extremely high capacity without proportionally increasing runtime, enabling better handling of diverse coding tasks and knowledge areas. The gating mechanism routes prompts to the most relevant experts, improving both quality and efficiency for code-related queries.

- Extended Context Window: Qwen3-Coder supports an extended context length of up to 256K tokens natively, far beyond the typical 4K or 8K tokens of earlier models. In fact, with specialized “YaRN” extrapolation techniques, it can handle up to 1 million tokens of context. This means it can ingest entire codebases, large files, or lengthy conversations without forgetting earlier details. For developers, the long context enables working with repository-scale inputs – you could provide multiple source files or a huge code diff, and Qwen3-Coder can reason about all of it in one go. It’s optimized for repository-scale understanding, making it ideal for tasks like analyzing a whole project’s architecture or reviewing a massive pull request.

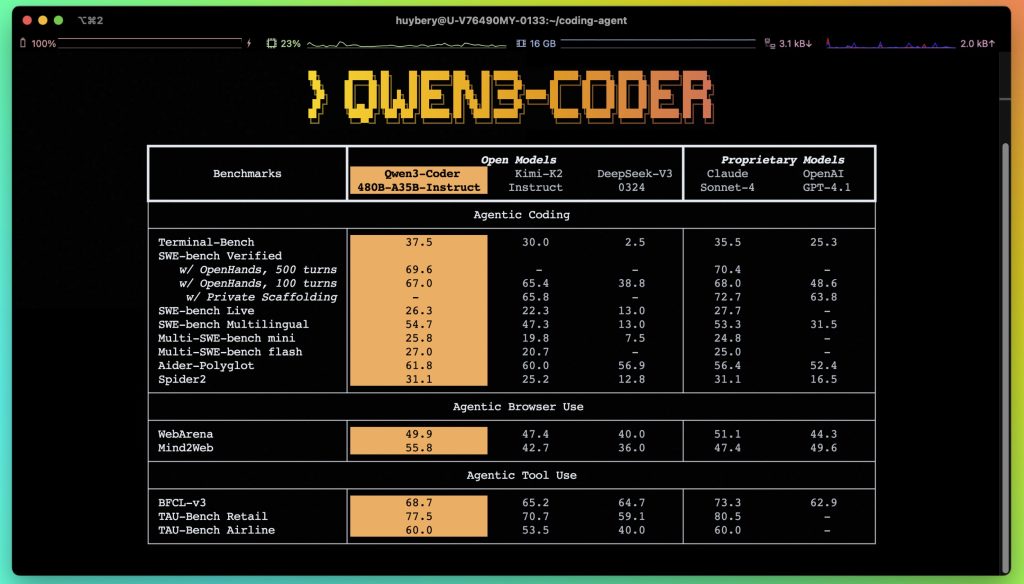

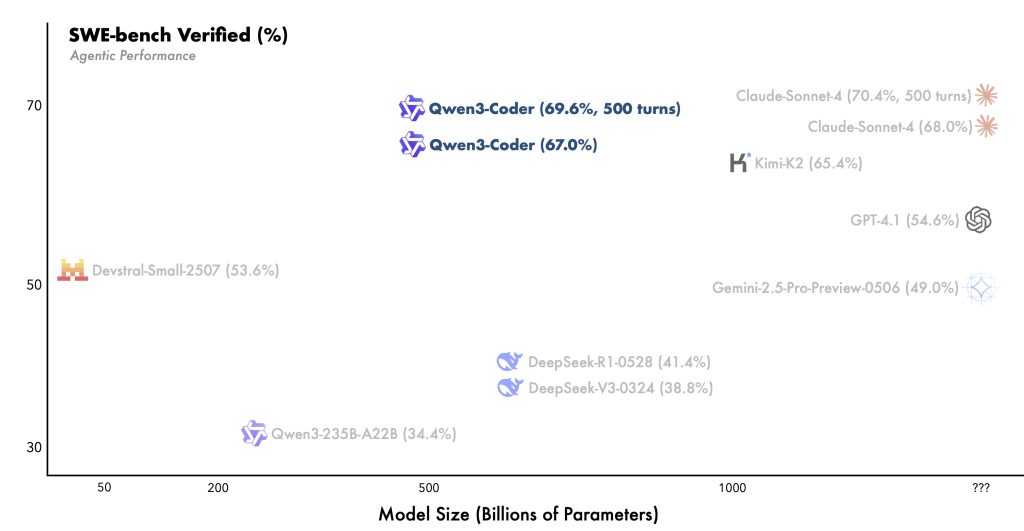

- Training with Code-Centric Reinforcement Learning: Beyond standard next-token prediction pretraining, Qwen3-Coder underwent intensive post-training via reinforcement learning on coding tasks. Notably, the team applied an “execution-driven” RL approach: the model was challenged with writing code to solve problems and then running the code against test cases, using the results as feedback. By scaling up this Code RL on a broad set of programming challenges (hard-to-solve but easy-to-verify problems), Qwen3-Coder learned to produce code that not only compiles but executes correctly for the given requirements. This significantly boosted its success rate in code generation and debugging tasks. Furthermore, long-horizon RL was used to train agentic behavior: the model practiced multi-turn interactions involving planning, tool use, and decision-making to solve real-world software engineering tasks. For example, in a simulated coding environment it learned to plan a sequence of steps – like reading an error message, deciding to call an API or tool, then adjusting the code – to ultimately satisfy the task objectives. This training yielded strong performance on benchmarks like SWE-Bench (a software engineering challenge suite), setting new state-of-the-art among open models for multi-step coding tasks.

- Instruction Tuning and Function Calling Format: Qwen3-Coder’s -Instruct variants are fine-tuned to follow human instructions and conversational prompts, making them suitable for interactive use (similar to ChatGPT style). Special care was taken to enable function/tool calling capabilities – the model can output a structured function call when a tool is needed, instead of just plain text. A custom function call format was designed for consistency, and a specialized tool-parser is provided to interpret these calls. This architecture choice allows Qwen3-Coder to act as an “agent” that not only generates code, but can also invoke external functions (more on this in the Agentic Workflows section).

Despite these advanced features, Qwen3-Coder retains the general natural language understanding and reasoning abilities of its base model. It wasn’t trained in a way that sacrifices other domains; in fact, it deliberately “retains strengths in math and general capabilities” inherited from the base Qwen-3 model. In summary, the architecture combines an enormous knowledge capacity, very long context handling, and specialized training for coding & tool use. This makes Qwen3-Coder a uniquely powerful backend for developer assistants and code automation.

Supported Languages and Capabilities

One of Qwen3-Coder’s standout qualities is its broad multilingual programming support. According to the developers, it can understand and generate code in 358 different programming languages and file formats. This includes all the popular languages you’d expect – Python, JavaScript, Java, C, C++, C#, Go, Ruby, PHP, TypeScript, SQL, Swift, and so on – as well as many domain-specific or esoteric languages.

For instance, Qwen3-Coder’s knowledge spans everything from web and scripting languages (HTML/CSS, Shell, Batch files), to low-level or hardware description languages (Assembly, Verilog/SystemVerilog), mathematical and data science languages (R, MATLAB), and even quirky languages used in programming puzzles or academia (yes, it even knows Brainfuck and LOLCODE as listed in its support!). This incredibly broad training means you can throw practically any source code at it and get useful responses – it can explain obscure code or help port code from one language to another.

In addition to pure programming languages, Qwen3-Coder is aware of coding frameworks and libraries, as these are often mentioned in code. For example, it can generate a snippet using Python’s TensorFlow or Java’s Spring framework if asked, or complete a function using a C++ STL container. Its understanding of APIs and library usage comes from the vast pretraining on open-source repositories.

Beyond language support, let’s highlight some core capabilities Qwen3-Coder offers developers:

- Understanding Code Semantics: The model doesn’t treat code as random text – it has learned syntax and semantics. It can parse code logic in a given snippet, summarize what a function does, or trace through code to find logical errors. This is extremely helpful for tasks like code review and documentation.

- Multi-Turn Dialogue about Code: Because it’s an instruct/chat model, Qwen3-Coder supports conversational interactions. You can engage in a back-and-forth dialog: for example, first ask it to write a function, then ask follow-up questions about that code, request modifications, etc. It will remember the context (up to the huge 256K token limit) and respond accordingly. This iterative approach is similar to having a smart AI pair programmer who refines the solution with you.

- Robust Reasoning and Math: Thanks to retention of general LLM abilities, Qwen3-Coder can handle logical reasoning or math within code prompts. It can analyze algorithm complexity, prove the correctness of a solution, or perform calculations (especially when those are needed to produce correct code). It was even benchmarked to have strong general problem-solving skill, not just code syntax.

- Large-Scale Codebase Handling: With its long context window, Qwen3-Coder can ingest large codebases or multiple files at once. It can answer questions like “given these 10 files, where is the bug causing the crash?” or “refactor these classes to use a new API” because you can provide all relevant source code in the prompt. This capability sets it apart from smaller-context models – it’s built to be a true assistant for big projects, not just isolated coding puzzles. In enterprise use, one could feed an entire repository or a massive log file to the model and get meaningful analysis or generation across that broad context.

In short, Qwen3-Coder is both wide and deep in its support: wide in terms of languages/technologies covered, and deep in terms of understanding and reasoning about code. Next, we’ll dive into its core coding abilities in practice.

Core Coding Abilities

Qwen3-Coder was built to assist with virtually every aspect of programming. Let’s break down its core abilities – code generation, completion, debugging, and refactoring – and discuss how it performs each, with examples.

Code Generation from Natural Language

Code generation is the task of writing new code based on a natural language description. Qwen3-Coder excels at this. Given a prompt like “Write a quicksort algorithm in Python”, it can produce a complete, correct implementation of quicksort in Python, typically with well-formatted code and even comments. The model was trained on a large volume of programming problems and examples, so it has seen many algorithm implementations and can reproduce them or adapt them as needed.

For instance, if you ask:

User: “Generate a Python function to compute the factorial of a number using recursion.”

Qwen3-Coder will output something like:

def factorial(n: int) -> int:

"""Compute the factorial of n recursively."""

if n < 0:

raise ValueError("Negative input not allowed")

if n == 0 or n == 1:

return 1

return n * factorial(n-1)

It writes the function with proper base cases and error handling. Because of the instruction tuning, it often follows best practices (like including a docstring or type hints if prompted). The model’s training with execution-based feedback helps here – it tends to generate code that passes likely test cases. In fact, Qwen3-Coder was reinforced on “hard-to-solve, easy-to-verify” tasks where generating correct output was the goal, so it has a bias toward code that actually works, not just code that looks plausible.

Multi-language generation: You can specify the language in your prompt and Qwen3-Coder will switch accordingly. For example, “Implement quicksort in C++ using templates” will yield a C++ template function for quicksort. Because it knows 350+ languages, you could even do something unconventional like, “Write hello world in Brainfuck” – and it will do so (with the correct sequence of ><+- etc.). This is useful if you need to generate code in a less common language or migrate code between languages. The quality in each language will depend on how common it is (it’s most fluent in popular languages), but even for rarely used ones, it often can produce syntactically valid code.

Contextual awareness: If you provide context along with the request, the model will use it. For example, “Using the class defined above, write a method to_json() that serializes its fields.” – Qwen3-Coder can take into account the class definition you gave earlier in the conversation. It effectively does contextual code generation, which is great in larger projects: you can feed related code (e.g. a data model class) and ask it to generate a companion piece (e.g. a function that uses that class). The long context window means even if the class was defined hundreds of lines earlier or in another file given in prompt, the model still has it in working memory.

Code Completion and Autocompletion

Code completion is slightly different from free-form generation – here the model is given a partial snippet and asked to continue or fill in the rest. Qwen3-Coder can act as an intelligent autocompletion engine, much like how IDEs or tools like Copilot work, but with more semantic understanding.

For example, if you prompt it with the start of a function:

def find_max(arr):

"""Return the maximum element in the array."""

max_val = float('-inf')

for x in arr:

if x >

and ask it to complete, Qwen3-Coder will continue with something like:

if x > max_val:

max_val = x

return max_val

It correctly infers that the user was writing a loop to find the maximum and completes the logic. It also might notice any naming or style cues (like using max_val instead of max to avoid shadowing, etc.).

The model supports IDE integration for streaming completions as well. When used in an editor plugin, Qwen3-Coder can suggest the next few lines or entire blocks of code as you type. Its understanding of programming context (indentation, scope, variable names) helps it produce relevant completions rather than generic ones. In practice, developers have integrated Qwen3-Coder with VS Code to get inline suggestions. One open-source VS Code extension called Cline (an AI coding agent plugin) supports Qwen3-Coder as a backend, enabling local autocompletion and even autonomous code editing within the IDE. Users report that with the 30B model running locally, Cline can complete code in a large project with only a few seconds of latency, effectively acting like an AI pair programmer in your editor.

Completion is not limited to extending the end of a file – Qwen3-Coder can also fill in code in the middle if you provide the appropriate prompt (for example, leaving a # TODO in a function and asking it to fill it). It will use the surrounding context to figure out what’s needed. This is powerful for boilerplate code generation: you can lay out a rough structure and let the AI fill in the mundane parts.

Debugging and Bug-Fixing

One of the most valuable uses of Qwen3-Coder is debugging existing code. The model can analyze code and pinpoint errors or suggest fixes, often along with explanations. This capability is enhanced by its training: Qwen3-Coder was specifically reinforced on tasks that involve execution and verification, which includes finding mistakes in code and correcting them. It’s also adept at parsing error messages or stack traces if you include those in the prompt.

A typical debugging session might look like this:

User: “The following Python function is supposed to return the maximum of a list, but it doesn’t work for all inputs. Can you find the bug and fix it?\n

python\ndef find_max(arr):\n max = 0\n for x in arr:\n if x > max:\n max = x\n return max\n\nFor example,find_max([-5, -2, -9])returns 0, which is wrong.”

Assistant (Qwen3-Coder): “The issue in this code is that it initializes max to 0. This works for arrays with non-negative numbers, but if all numbers in arr are negative, the function will incorrectly return 0 (since 0 remains larger than any negative number). The bug can be fixed by initializing max to a value that doesn’t bias the result, e.g., the first element of the array or float('-inf'). Here’s the corrected function:

def find_max(arr):

if not arr:

raise ValueError("Empty array")

max_val = arr[0] # start with the first element

for x in arr:

if x > max_val:

max_val = x

return max_val

```”

In this response, Qwen3-Coder first **explained the root cause** of the bug (initializing to 0) and then provided a corrected code snippet. This kind of reasoning – understanding why the output was wrong and how to fix it – is where the model’s training on “easy to verify” tasks pays off. It knows a correct solution must handle all cases, so it recognized the edge case of all-negative input.

Qwen3-Coder can also debug by reading **error messages**. If you supply a compilation error or a stack trace along with the code, it will interpret the error and suggest a fix. For instance, in C++ if you get a template substitution failure, you can paste the error log; the model will analyze which line likely caused it and why, and propose changes. Similarly for Python exceptions – provide the traceback and it can often identify the offending line and logic.

Another powerful aspect is multi-file or context-aware debugging: because of the long context, you can give Qwen3-Coder *multiple* pieces of a program (e.g. two modules that interact) and ask it to find the bug in the interaction. It can track values and function calls across files. This is something traditional static analysis often fails at, but a large-context model can do in a holistic way.

### Refactoring and Code Optimization

Beyond writing new code and fixing bugs, Qwen3-Coder can help **refactor** and improve existing code. Refactoring might include tasks like renaming variables for clarity, restructuring functions to reduce duplication, improving algorithmic efficiency, or updating a code snippet to use a different library or API.

For example, you might ask: *“Refactor the following Java method to be more readable and use Java 8 streams where appropriate.”* – and provide a block of Java code. Qwen3-Coder will then output a revised version of that method, perhaps breaking down a complex loop into smaller functions, or replacing an old-style loop with a `Stream` operation, while preserving the functionality. It often also explains its changes if prompted to do so.

The model’s strength in refactoring comes from its broad knowledge of **coding best practices** and modern idioms in many languages. It has seen clean code examples as well as messy ones, so it can infer what “more readable” or “more Pythonic” might mean, for instance. It also knows about performance considerations – e.g., it might suggest using a dictionary for lookups instead of a list if it recognizes a certain pattern, or using tail recursion optimizations if available.

Another refactoring use-case is **code modernization**: e.g. “Here is some legacy Python 2 code, convert it to Python 3 and modern practices.” Qwen3-Coder can handle that by adjusting print statements, removing old libraries, using f-strings, etc. Similarly, it can help port code from one framework to another (like converting TensorFlow 1.x code to TensorFlow 2.x, or switching a piece of code to use a different HTTP library).

**Optimization** tasks are also in scope. If you have a working solution but need it faster or less memory-intensive, you can ask Qwen3-Coder to optimize it. It might unroll a loop, use bit operations, or suggest a more efficient algorithm that it knows. Of course, it’s an AI so it won’t always find the absolute best solution, but it often provides a solid improvement or at least a different perspective that you can build upon.

One thing to note: when refactoring large blocks or multiple files, it’s often useful to do it interactively – e.g., ask Qwen3-Coder to refactor one part, then review the output and possibly ask for further changes. Because it’s conversational, you can incrementally guide the refactoring process to match your style and requirements.

## Using Qwen3-Coder in Python (Integration Examples)

Qwen3-Coder is available through multiple interfaces, making it convenient to integrate into your development workflow or applications. In this section, we’ll show how to use the model programmatically in Python – both via the open-source model weights (using Hugging Face Transformers) and via an API.

### Loading the Model with Hugging Face Transformers

If you have the hardware resources, you can download the Qwen3-Coder model and run it locally using the Transformers library. The largest 480B model is extremely demanding (it requires multiple high-memory GPUs or TPU pods), but a smaller 30B variant is also available which can be run on a modest server or high-end PC with enough VRAM (or using 4-bit quantization for even lower requirements). Here’s a minimal example of loading Qwen3-Coder and generating text with it using Transformers:

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "Qwen/Qwen3-Coder-480B-A35B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto" # automatically distribute across available GPUs

)

# Prepare a prompt for code generation

prompt = "## Task: Write a function that checks if a number is prime.\n# Solution in Python:\n"

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=200)

result = tokenizer.decode(outputs[0][inputs['input_ids'].size(1):], skip_special_tokens=True)

print(result)

In this snippet, we load the instruct-tuned model and tokenizer from Hugging Face Hub. We then craft a prompt that asks for a prime-checking function in Python (using a few comment tokens to set context, which Qwen3-Coder understands well). We generate 200 new tokens as completion. The device_map="auto" will load the model across GPUs if available – given the model’s size, this is often necessary (e.g., it might split layers across 4 GPUs automatically). The result printed would be the Python code for the prime check function. Note: To run this, make sure you have a sufficiently new transformers version (>=4.51). Older versions may not recognize Qwen3’s custom model type and could error out with a key error ('qwen3_moe'). Always use the latest tokenizer and model files provided, since Qwen3-Coder introduced new special tokens for tool use that the updated tokenizer handles.

Using the model locally gives you full control – you can adjust generation parameters (temperature, top_p etc.), use the model in a batch setting, or fine-tune it further if needed. However, running the 480B model requires extraordinary resources (think dozens of GB of VRAM at least, or using inference frameworks like DeepSpeed or Accelerate for sharding). The 30B model (sometimes called Qwen3-Coder-Flash) is much more tractable for local use, with quantized versions available that can run in under 40GB of memory. Many community tools (Ollama, LMStudio, text-generation-webui, etc.) have added support for Qwen3-Coder, so you can also use those to run it with less code.

OpenAI-Compatible API Integration

If you prefer to use Qwen3-Coder as a service (or don’t have the hardware to host it), Alibaba provides API access via Model Studio on Alibaba Cloud. The API is designed to be OpenAI API compatible, meaning you can use existing OpenAI SDKs/clients by just pointing them to the Qwen endpoint. This makes integration into existing applications very straightforward.

For example, using OpenAI’s Python library, you can set the endpoint and API key for Qwen3-Coder like so:

import openai

openai.api_base = "https://dashscope-intl.aliyuncs.com/compatible-mode/v1" # Qwen API endpoint (intl region)

openai.api_key = "YOUR_API_KEY_HERE" # Model Studio API key from Alibaba Cloud

response = openai.ChatCompletion.create(

model="qwen3-coder-plus",

messages=[{"role": "user", "content": "Write a Python function to compute Fibonacci numbers."}]

)

print(response["choices"][0]["message"]["content"])

Here we used model="qwen3-coder-plus", which is the hosted model name corresponding to the latest Qwen3-Coder (the “plus” variant refers to the full 480B model). Once configured, the usage is identical to calling OpenAI’s GPT models – you provide a list of messages with roles (user, assistant, etc.), and you get a response. The content of response["choices"][0]["message"]["content"] will be the code or answer from Qwen3-Coder.

The Alibaba Model Studio offers a generous free quota (for instance, 1M input and 1M output tokens free for new users) and then a tiered paid plan beyond that. The tiered pricing is based on context length – shorter prompts are cheaper, very long prompts (e.g. >128k tokens) cost more. This structure encourages using the long context capability when you truly need it, but you only pay extra when you go beyond certain thresholds.

It’s worth noting that OpenRouter (an API service that routes to various open models) also provides access to Qwen3-Coder. Through OpenRouter, you can call Qwen3-Coder-480B for free (at the time of writing) with an OpenAI-compatible interface. They handle finding a provider that can run your request. This is a convenient way to experiment with the model via API without dealing with infrastructure.

Between the local HuggingFace approach and the cloud API approach, developers have flexibility. For quick prototyping or light usage, the API might be easiest. For heavy lifting or sensitive code (where you want to keep everything on-premise), running the model on your own machines with the open source weights is the way to go.

Agent-Based Coding Workflows

A defining feature of Qwen3-Coder is its agentic capabilities – the ability to use tools and act autonomously in a coding workflow. In practice, this means the model can do more than just emit text; it can decide to call a function (tool) to perform an action like executing code, reading or writing a file, searching documentation, etc., as part of solving a problem. This opens up exciting possibilities for automating multi-step development tasks.

Function Calling and Tool Use

Qwen3-Coder supports a function calling API similar to OpenAI’s function call interface. You as the developer can define a set of tools (each tool has a name, description, and JSON schema for parameters). When you send a prompt, you include these tool definitions. The model can then choose to output a tool invocation instead of a direct answer, if it decides an action is needed.

For example, imagine you ask Qwen3-Coder: “Create a file named quick_sort.py and write a Python quicksort implementation into it.” – This is not a simple text generation request; it implies a file operation. With tool use enabled, Qwen3-Coder might respond with a structured function call like write_file rather than dumping the code in the chat. In fact, the Alibaba documentation shows this exact scenario: when asked to “Write a Python script for quick sort and name it quick_sort.py.”, Qwen3-Coder returned a tool call for the write_file function with the file path and content (the quicksort code) as arguments. The calling application would see this in the tool_calls field of the API response. The application can then actually create the file and write the provided content to it, and finally return control to the model (or user). This way, the code gets written to a file on disk as requested, demonstrating autonomous coding actions.

Other tools might let the model execute code, or run tests. You could define a run_code tool that executes a given snippet and returns the output. Qwen3-Coder could then solve a problem by writing some code, calling run_code, seeing the output or any error, and then adjusting its approach – essentially debugging itself. This is analogous to what it was trained for with long-horizon RL: multi-turn interactions with an environment.

Out of the box, Alibaba provides a CLI tool called Qwen Code that sets up a conducive environment for such agentic coding. Qwen Code is an open-source command-line tool (Node.js based) adapted from the Gemini codebase, customized to work with Qwen3-Coder’s function-calling format. With Qwen Code, you can simply type qwen in your terminal (after some setup) and get an interactive session where the model can use tools. Under the hood, you’ll configure it with your Qwen API key and endpoint or even run Qwen locally. The CLI takes care of routing the model’s tool call outputs to actual shell commands or file operations. This means you could say, “Create a new React app and add a component that fetches data from an API,” and the model might sequentially call tools to initialize a project, create files, etc. – turning a high-level request into concrete actions and code.

The VS Code plugin Cline we mentioned earlier also leverages Qwen3-Coder’s agentic abilities. Cline can execute commands in the VS Code terminal on the model’s behalf. So if Qwen3-Coder decides to run tests or format code, Cline will carry that out and feed the results back. Essentially, Qwen3-Coder + Cline together function like an autonomous coding assistant inside your editor, able to make changes and verify them. This is similar to closed-source systems like GitHub Copilot Labs or Replit’s Ghostwriter autopilot, but using an open model.

It’s important to note that tool use is optional – if you don’t enable it, Qwen3-Coder will just produce answers as plaintext. But enabling it unlocks these advanced workflows. The model was designed with a “specially designed function call format” to make tool integration smooth. When using the OpenAI-compatible API, you pass tools in a functions parameter (as JSON schemas), and the model may return a message of role assistant with function_call content. The Alibaba docs highlight that after the model returns a tool name and parameters, you execute the tool and then continue the conversation, providing the results back to the model (if the tool produced output). This loop continues until the task is complete.

Example Agentic Workflow

To make this concrete, let’s outline a possible real-world use case combining these pieces:

- Task: “Add a feature to an existing project” – Suppose you have a Python project and you want to add a new API endpoint and also update the documentation.

- Step 1 (User prompt): You instruct Qwen3-Coder in a high-level way: “Add a new endpoint

/statusto our Flask app that returns a JSON with version info. Also update the README with an example curl call.” You also provide the relevant files in the context (the Flask app code and README content, thanks to the long context ability). - Step 2 (Model analysis): Qwen3-Coder reads the Flask app code, identifies where endpoints are defined, and formulates a plan. It might decide: need to edit

app.pyto add a route, and editREADME.mdto add documentation. - Step 3 (Tool calls): The model outputs a

write_filecall forapp.pywith new code inserted (the new route function), and anotherwrite_fileforREADME.mdwith an added snippet. These appear as two function call responses. - Step 4 (Environment execution): Your agent controller (could be Qwen Code CLI or a custom script using the API) sees the tool calls, writes the changes to disk. Perhaps it then runs the app’s tests by invoking a

run_teststool or similar. - Step 5 (Feedback): Suppose one test failed because the version info was expected to be an int but you returned a string. The

run_teststool returns the failing test output to the model. - Step 6 (Model adjusts): Qwen3-Coder receives the test feedback and realizes it should cast the version number to int. It then returns another

write_filecall to modify the code accordingly. - Step 7: Repeat until tests pass. Finally, the model may output a message like “All done. The

/statusendpoint is added and documentation updated.” as a normal assistant message.

This kind of auto-coding loop is exactly what Qwen3-Coder was built to handle with its combination of tool usage and long-horizon planning. It’s essentially an AI agent that can carry out high-level development tasks with minimal human intervention.

Of course, such power should be used carefully – always review changes an AI makes to your codebase! But it can dramatically speed up rote work (like adding boilerplate endpoints, updating multiple files consistently, etc.) and let developers focus on the higher-level design.

Real Developer Use Cases

Let’s consider a few concrete scenarios where Qwen3-Coder can be applied in a developer’s day-to-day or in a software team’s workflow:

1. Implementing Features from Specs: A developer can paste a chunk of a design spec or user story and ask Qwen3-Coder to draft the implementation. For example, “Here’s the spec for the user authentication flow…” and the model can generate the code for login/logout, given the project’s existing code as context. This speeds up writing boilerplate or repetitive code aligned with a specification.

2. Code Review Assistant: During code reviews, Qwen3-Coder can be used to automatically analyze a pull request. A developer could feed the diff of a PR (which might be tens of thousands of lines, but still within 256K tokens) to the model and ask “Does this change have any potential issues or improvements?”. The model might catch things like missing error checks, potential bugs, or suggest more idiomatic usage of an API. It could even generate comments that the reviewer can then polish and post. Essentially, it’s like a second pair of eyes that know the code.

3. Debugging in CI/CD: Imagine a continuous integration system where if a test fails, Qwen3-Coder is invoked to analyze the failure and propose a patch. For instance, a failing test’s log and the relevant code could be given to the model. The model might then return a patched code snippet. While you wouldn’t auto-merge such a patch without review, it provides a quick starting point for developers to fix the issue. Over time, this could evolve into an automated remediation system for certain types of bugs.

4. Multi-Language Codebase Management: In a project that has multiple languages (say a backend in Java, frontend in TypeScript, some DevOps scripts in Bash, etc.), Qwen3-Coder can operate across the whole stack. You could ask it something like: “Our API changed in the backend, find all client code (TypeScript) that calls this API and update it accordingly.” The model can ingest both the Java and TS parts of the repo and identify the relevant pieces to change. It might output patches for each file. This cross-language understanding is extremely useful for large systems.

5. Learning and Documentation: For an individual developer, Qwen3-Coder can serve as a tutor. If you come across a code snippet in an unfamiliar language or framework, you can ask Qwen3-Coder “What does this code do?” or “Explain this in simpler terms.” and because of its training, it often provides accurate explanations. It can also answer conceptual questions (thanks to retained general knowledge) like “What is the difference between quick sort and merge sort?” or “Explain the actor model in concurrency,” which might come up while coding.

6. Writing Tests and Docs: It’s not only about code – the model can generate unit tests given a function (often a good practice to ensure correctness). For example, “Write pytest unit tests for the above function including edge cases.” It will produce test code. It can similarly draft documentation comments or even Markdown docs for your project. A developer can then verify and refine these rather than writing from scratch.

7. Agentic DevOps tasks: Thanks to tool use, Qwen3-Coder could be part of an automation pipeline. For example, using it with appropriate tools, one could ask: “Deploy my application to AWS.” The model might generate Terraform scripts or AWS CLI commands, call a tool to apply them, verify deployment, etc. This blurs the line between coding and operations – the AI can assist in writing code to perform ops tasks and execute them. (This is a more experimental use case, but within reach given the agent framework.)

Each of these scenarios has been tried in some form by early adopters of Qwen3-Coder. The common theme is that the model can significantly accelerate tasks that involve writing or reasoning about code, especially when those tasks can be specified in natural language. It’s like having a very knowledgeable junior developer who works at incredible speed and doesn’t mind doing tedious tasks – but still needs oversight for critical thinking and final decisions.

Limitations and Best Practices

While Qwen3-Coder is a cutting-edge tool, it’s important to be aware of its limitations and to follow best practices for optimal results:

Limitations:

Resource Intensive (for 480B model): The largest model is huge – running Qwen3-Coder-480B yourself requires massive computational resources (hundreds of GB of memory for full inference). Most users will prefer either the 30B model locally or using the cloud API for the 480B. Even the 30B model is not tiny; plan accordingly for hardware or use optimized runtimes/quantization.

Latency: With great scale comes some latency. Even with GPU acceleration, generating hundreds of thousands of tokens (in a long context scenario) or using the MoE model might be slower than smaller models. In interactive settings, you might experience a few seconds delay for medium-length outputs and longer for very large outputs. This is generally acceptable for many coding tasks, but for rapid autocomplete keystroke-by-keystroke, you might use the smaller model or accept slightly slower suggestions than, say, a local code indexer. The development of quantized versions and inference optimizations is ongoing to improve this.

Accuracy is High but Not Perfect: Although Qwen3-Coder was trained to produce correct and executable code, it’s not infallible. It may sometimes produce code with subtle bugs or that doesn’t handle an edge case. It can also hallucinate outputs – for example, referencing a library function that doesn’t exist or assuming a variable of a certain type. Always review and test the model’s output, especially before using it in production. The advantage is that if you do catch an issue, you can feed it back for the model to fix (the interactive loop), but this requires vigilance.

Security Considerations: If you enable tool use, be cautious about which tools you allow the model to call and on what input. The model could attempt to execute arbitrary code or shell commands if prompted maliciously. In a controlled environment (like your own machine or a sandboxed container), this is manageable, but you wouldn’t want to hook Qwen3-Coder up to production infrastructure with full permissions and then let anyone prompt it. Treat it like you would treat a script running with your privileges – limit its scope when possible (for instance, maybe only allow file read/write in a temp directory, or execution in a sandbox).

No Hard Guarantee of Intent: The model will do its best to follow instructions, but if instructions are ambiguous or conflict (e.g., “write code that does X” vs actual code context that suggests Y), it might produce confusing results. It doesn’t truly understand your project’s intent beyond what’s given in the prompt, so sometimes the suggestions might be off-target. Prompt clarity is key to mitigating this.

Best Practices:

Provide Clear, Specific Prompts: The more specific you can be in your request, the better the output. Instead of “optimize this code”, you might say “optimize this code for speed and clarify variable naming.” If generating a new function, mention any constraints or preferred libraries. When asking for debugging help, include the error message and highlight the problematic behavior. Context is model’s fuel – give it what it needs.

Use System/Role Messages for Guidance: If using the chat format, you can include a system message to set the tone or rules. For example, a system message like: “You are an expert Python developer. Provide only the code solution without extra commentary unless asked.” can make the assistant focus and format output as desired. Qwen3-Coder follows these instructions well since it’s an instruct model.

Iterate with the Model: Don’t expect a giant complex task to be done in one shot. Break problems down and use multiple turns. For instance, first ask it to outline an approach, then verify the outline, then generate code for each part. This stepwise approach often yields better and more manageable outputs than one massive request.

Take Advantage of Long Context Wisely: Just because you can feed 200K tokens doesn’t always mean you should. Identify what’s relevant for the task. If only certain files are needed for a bug fix, provide those rather than the whole repo. The model will focus better with pertinent information, and you’ll avoid unnecessary token usage (which could incur cost on API and slow down processing).

Keep Sensitive Code Local: If you have proprietary code, you might opt to use the local model deployment instead of an API, to avoid sending code to a third-party server. Alibaba’s cloud promises security, but general best practice is to not share highly sensitive code with any external service. Fortunately, Qwen3-Coder being open source means you have that choice.

Monitor Output Length and Quality: Sometimes the model might produce an excessively long answer or go on a tangent (e.g., overly detailed explanation). You can control this with parameters like max_tokens or by explicitly instructing the style (“give a brief answer” vs “explain in detail”). If it starts drifting, gently steer it with a follow-up instruction.

Update to Latest Versions: The Qwen team is actively improving the model and tooling. Make sure to use the latest model checkpoints and tokenizer. They have fixed issues (like a tool-calling bug in initial releases that was later patched). Also, keep your transformers library updated as they add support for Qwen features (like new attention mechanisms or quantization support).

By being mindful of these points, you can harness Qwen3-Coder effectively while avoiding common pitfalls.

FAQs for Developers

What model sizes of Qwen3-Coder are available?

Does Qwen3-Coder work in a chat/conversational mode or only one-shot?

<|im_start|> tokens) for multi-turn dialogues. When using the OpenAI API, you naturally use the chat format with role-based messages. Qwen3-Coder will maintain context across turns (up to the huge token limit) and you can clarify or refine queries in multiple rounds. The instruct fine-tuning ensures it responds helpfully to each user message. So you can treat it just like you would ChatGPT or similar, but specialized for code – ask a question, get answer, ask a follow-up, etc., in one session.Can I fine-tune Qwen3-Coder on my own codebase or domain?

What is “agentic coding” in one sentence? Do I need to use it?

What are the hardware requirements to run Qwen3-Coder locally?

By leveraging Qwen3-Coder’s powerful coding and reasoning abilities, developers and teams can automate tedious programming tasks, gain instant expertise across multiple languages, and even explore autonomous coding agents. This article has provided a deep dive into what Qwen3-Coder offers and how to use it effectively. As with any AI tool, the key is to integrate it thoughtfully – let it handle the heavy lifting of code generation and analysis, while you guide it with your intent and critical judgment. Happy coding with Qwen3-Coder!