Qwen3-Max is the latest flagship of Alibaba’s Qwen series of large language models (LLMs), distinguished by its massive scale (over 1 trillion parameters) and advanced capabilities in reasoning, coding, and agentic tasks. Released in 2025, Qwen3-Max represents a leap forward from previous Qwen AI models, integrating cutting-edge features to tackle complex technical problems.

It has been pretrained on an unprecedented 36 trillion tokens and can handle extremely long inputs – up to roughly one million tokens – far beyond most AI systems today. Unlike many smaller Qwen3 models which are open-source, Qwen3-Max is offered as a cloud API (closed-weight), targeting enterprise users who need maximum performance at scale.

This model currently ranks among the top global LLMs on benchmarks for coding, reasoning, and knowledge tasks, even surpassing some next-gen chatbots in certain evaluations. The result is a professional-grade AI system built for developers and researchers, emphasizing reliability and structured outputs over casual chat gimmicks.

In this article, we dive into Qwen3-Max’s architecture, capabilities, and integration workflows, providing a comprehensive guide for engineers looking to adopt it in enterprise AI applications.

Architecture and Technical Overview

At its core, Qwen3-Max follows a Transformer-based Mixture-of-Experts (MoE) architecture – a design that enables scaling to trillions of parameters without sacrificing efficiency.

The model is composed of many expert subnetworks, of which only a few are activated per query. This architecture, combined with Alibaba’s global-batch load balancing loss technique, ensures stable training even at extreme scales. In fact, the Qwen3-Max pretraining ran smoothly with no loss spikes or instabilities, avoiding the crashes or resets often seen in ultra-large model training.

The team credits this stability to their MoE design and training optimizations, which kept the loss curve consistently smooth without needing any manual resets.

To train Qwen3-Max on 36 trillion tokens, Alibaba employed a highly optimized distributed pipeline. Using the PAI-FlashMoE library for multi-level parallelism, they achieved a 30% higher model FLOPs utilization compared to the previous generation (Qwen2.5-Max). Notably, Qwen3-Max was trained with sequences up to 1M tokens in length using a custom “ChunkFlow” strategy that delivered a 3× throughput improvement over standard context-parallel training.

This allowed the model to ingest extremely long contexts during training, laying the groundwork for its long-context inference capabilities. Additional engineering techniques – such as SanityCheck and EasyCheckpoint – were used to improve fault tolerance, cutting downtime from hardware failures to about 20% of what earlier runs experienced. In summary, Qwen3-Max’s training process was remarkably efficient and robust, combining MoE scaling with novel optimizations to “just scale it” up to trillion-parameter territory.

In terms of model configuration, Qwen3-Max aligns with the design paradigm of the Qwen3 series. (While exact layer counts and expert counts for Qwen3-Max aren’t publicly disclosed, the open-source Qwen3-235B model uses 128 experts with 8 active per token, giving a sense of the MoE structure.) Like other Qwen3 models, it supports hybrid “thinking” and “non-thinking” modes within one model.

This means the model can perform step-by-step reasoning when needed or output direct answers when a quick response suffices – all within a unified framework. Crucially, Qwen3-Max introduces a “thinking budget” mechanism, letting developers trade off latency vs. reasoning depth by allocating more compute for harder queries. In effect, one can dial up the model’s internal reasoning for complex tasks or dial it down for faster but simpler responses, a unique feature to balance performance and speed during inference.

Three main variants of Qwen3-Max have been mentioned by the Qwen team: Qwen3-Max-Base, the raw pretrained MoE model; Qwen3-Max-Instruct, which is instruction-tuned for general-purpose interactions (this is the version available via API); and Qwen3-Max-Thinking, a special reasoning-optimized version still in development. Qwen3-Max-Instruct is the primary model for most users – it’s already deployed on Alibaba Cloud and accessible through Qwen Chat – whereas Qwen3-Max-Thinking is an upcoming expert model that leverages additional techniques (like an internal code interpreter) for maximal problem-solving capability.

Both versions share the same underlying architecture, but the Thinking variant is being trained to push the envelope on deep reasoning tasks (more on this in the next section). Importantly, all Qwen3-Max APIs are OpenAI-compatible: developers can use the standard OpenAI Chat Completion API format to query the model, making integration straightforward for those familiar with OpenAI’s ecosystem. In summary, Qwen3-Max’s architecture combines ultra-large scale MoE with innovative training methods and a flexible reasoning framework, resulting in a model that is at once massively powerful and pragmatically engineered for enterprise AI workloads.

Performance Characteristics and Reasoning Depth

Despite its “max” scale, Qwen3-Max is not just about size – it delivers strong performance across a broad range of challenging tasks, often rivaling or exceeding other frontier models.

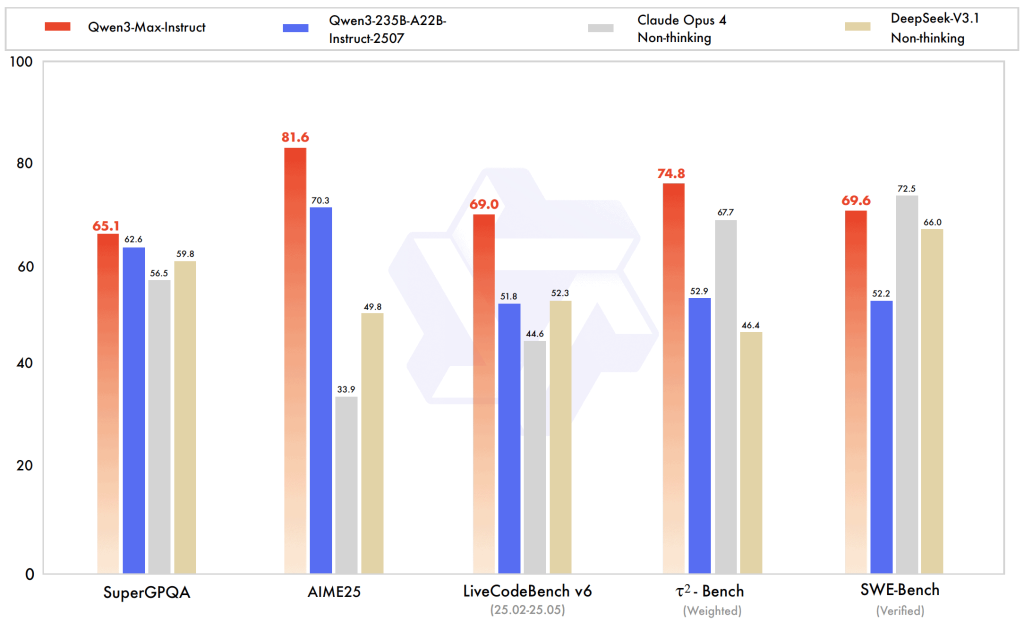

On general knowledge and language understanding benchmarks, the instruct-tuned Qwen3-Max ranks among the top systems globally. For instance, the preview version achieved a top-three spot on the LMArena Text leaderboard, placing just behind the latest models from OpenAI and Anthropic. This suggests that Qwen3-Max-Instruct can handle diverse queries, complex instructions, and multi-turn dialogues at a level comparable to the best chatbots available. Early internal tests indicated it reaches around ~79% accuracy on broad instruction-following evaluations, which is in line with other state-of-the-art general-purpose LLMs (within a few points of the absolute leader).

In practice, users report that Qwen3’s answers are factual and to-the-point, if sometimes less “chatty” or creative than those of more entertainment-oriented models. This trade-off reflects Qwen3-Max’s design focus on enterprise use cases – it favors precision and clarity over storytelling, making it extremely reliable for factual and technical prompts.

Reasoning and problem-solving are particular strengths of Qwen3-Max. Thanks to its hybrid mode design, the model can perform complex multi-step reasoning within a single conversation turn, effectively generating and following a chain-of-thought internally.

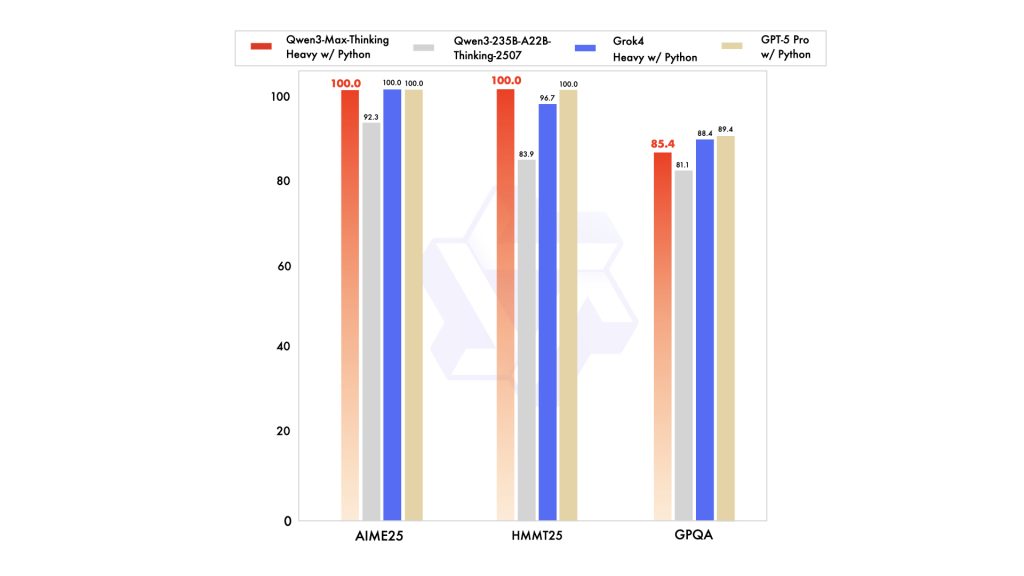

Even the base instruct model, without specialized tuning, has shown an ability to “shift” into a more methodical reasoning style when faced with hard problems. For example, on an internal benchmark of extremely difficult logical puzzles (Arena Hard prompts), Qwen3-Max scored 86.1, dramatically higher than many competitors and even outperforming a fast version of Anthropic’s Claude on that test. This demonstrates that Qwen3-Max can parse intricate problems and produce step-by-step solutions – a promising sign for its forthcoming Thinking variant. The specialized Qwen3-Max-Thinking model, which is under active training, is slated to push reasoning performance even further. Early results are astounding: by incorporating a code interpreter and using parallel compute during inference, Qwen3-Max-Thinking achieved perfect 100/100 scores on challenging math contests like AIME-25 and HMMT (Harvard-MIT Math Tournament).

Hitting 100% on these expert-level math benchmarks is virtually unheard of, and even top-tier models like GPT-5 Pro only recently matched that level. It suggests that with tool-use (e.g. writing and executing code for calculation) and unlimited “thinking time,” Qwen3-Max can solve extremely complex problems with absolute accuracy.

On coding and software engineering tasks, Qwen3-Max is among the world’s strongest models. In the SWE-Bench Verified coding benchmark (which involves solving realistic programming challenges), Qwen3-Max-Instruct scores 69.6, placing it firmly among the top-performing coding assistants.

This is comparable to or better than most open-source models and only a few points shy of the best proprietary models as of late 2025. Qwen3-Max has demonstrated an ability to generate correct, well-structured code for difficult problems, even handling multi-file or multi-step coding tasks.

Alibaba reports that on their LiveCode test suite, Qwen3-Max produced correct solutions more often than other open models designed for coding. It even serves as the default AI assistant in a popular coding IDE (the AnyCoder tool), underscoring its reliability in real development workflows. The model can follow detailed instructions within code prompts (e.g. “don’t modify these specific functions”) and output code in various languages (Python, JavaScript, Rust, etc.) with appropriate syntax and structure. While certain proprietary models still hold a narrow lead in absolute coding benchmark numbers, Qwen3-Max’s combination of raw coding ability and huge context window make it exceptionally powerful for large-scale programming tasks.

Developers can feed entire project files or extensive API documentation into Qwen3-Max and get coherent code or refactoring suggestions that take the full context into account.

Another area where Qwen3-Max excels is tool use and agentic behavior. The model was specifically tuned to interact with external tools (APIs, code execution, web browsing, etc.) in a reliable way. In the Tau2-Bench evaluation – which tests how well an AI can plan steps and call tools to solve user goals – Qwen3-Max-Instruct delivered a breakthrough score of 74.8, outperforming other frontier models on this metric.

This indicates an unusually high proficiency in multi-step tasks like retrieving information, using calculators, or following procedural instructions. Qwen3-Max can not only generate a plan (a sequence of actions) but actually produce the correct tool invocation syntax or code to carry out each step. For example, it might decide to call a weather API if asked about climate data, or execute a small Python snippet if asked to perform a calculation. The high Tau2-Bench result aligns with user feedback that Qwen’s agentic outputs are structured and reliable, avoiding random mistakes in tool formatting. It’s clear that beyond plain conversation, Qwen3-Max was built to serve as a decision-making backend system, capable of handling workflows with minimal human intervention.

This agentic strength will further increase with the Thinking variant, which explicitly integrates a Python interpreter for solving tasks – essentially allowing the model to write and run code as part of its reasoning process.

In summary, Qwen3-Max exhibits state-of-the-art performance in areas critical for enterprise AI: from understanding complex instructions and generating code, to performing logical reasoning and orchestrating multi-step tool usage. It may not tell the fanciest jokes or stories (its style is intentionally “no-nonsense”), but when it comes to accurate, goal-directed intelligence, Qwen3-Max is among the very best.

Empirical evaluations across diverse benchmarks (knowledge Q&A, math problem solving, coding challenges, etc.) show Qwen3-Max to be highly competitive against both open and proprietary peers. For developers, this means Qwen3-Max can be trusted to tackle hard technical problems and produce solutions that are correct, well-structured, and ready for integration into real applications.

Multilingual and Long-Context Capabilities

One of the standout features of Qwen3-Max (and the Qwen3 family in general) is its extensive multilingual support. The model was trained on a corpus covering 119 languages and dialects, a dramatic expansion from the 29 languages supported by the previous Qwen2.5 generation.

It has strong capabilities in major languages like English, Chinese (simplified and traditional), Arabic, French, Spanish, Russian, etc., as well as many less-resourced languages. This includes not only human languages but also programming languages – Qwen3 has been exposed to a variety of code and can follow instructions or generate output in Python, Java, C++, and more. The Qwen3 series has achieved state-of-the-art results on multilingual benchmarks, demonstrating both cross-lingual understanding and translation abilities.

For example, the Qwen3 embedding models excel on MTEB (Multilingual Text Embedding Benchmark) for semantic search across languages, and by extension Qwen3-Max can accept a query in one language and respond in another, or translate technical content reliably. This makes Qwen3-Max a compelling choice for global enterprises that operate in multiple languages – the model can power chatbots or analytics that seamlessly switch between English, Arabic, Chinese, etc. without losing context or accuracy. By open-sourcing smaller multilingual Qwen3 models, the team also ensured that developers can fine-tune or evaluate the multilingual capabilities.

Compared to monolingual models, Qwen3-Max stands out for its broad language coverage combined with deep knowledge in each, enabling use cases like multilingual customer support, cross-lingual document analysis, and international research collaboration.

The other marquee capability of Qwen3-Max is its support for ultra-long context lengths. Thanks to specialized position encoding techniques, Qwen3-Max-Preview was demonstrated with an input context window of about 256–258 thousand tokens, and in principle it can be extended up to 1 million tokens (nearly the length of a few books) with appropriate scaling.

This is among the largest context windows of any LLM in 2025, putting Qwen3-Max in a rare class of models that can essentially “read” an entire novel or codebase in one go. By comparison, most popular models (like GPT-4 or PaLM 2) max out at 100k tokens or less. In practical terms, a ~256k token window means Qwen3-Max can ingest hundreds of pages of text – for example, an entire legal contract, a full technical manual, or months of chat history – and still generate coherent responses that reference any part of that input.

Enterprise users can leverage this to analyze long documents without chunking, or to maintain conversation state over many interactive turns. In fact, Alibaba reports that Qwen3-Next (a related model) supports 256K natively and can reach 1M tokens with modifications, and Qwen3-Max uses a similar approach (hinted by their training on 1M-token sequences).

Under the hood, Qwen3-Max employs rotary position embedding (RoPE) scaling techniques to handle long contexts. The Qwen team has validated methods like YaRN (Yet another RoPE Extension) to successfully push context lengths to 131k and beyond in their open models.

For developers using Qwen3 models locally, this involves either modifying the model config (adding a rope_scaling factor) or using inference frameworks that support extended context. Notably, the Alibaba Model Studio API supports dynamic long-context handling by default, so if you query Qwen3-Max via the official API, it will automatically manage position encodings for large inputs.

The ability to scale context dynamically is a key enabler for retrieval-augmented generation and knowledge-intensive applications (we’ll discuss those in the use cases). Additionally, Qwen3-Max employs a “context caching” mechanism to improve efficiency on very long dialogues. This feature allows the model to avoid re-processing the entire history from scratch on each new query. Instead, it can reuse internal states for the older portions of the context, significantly speeding up inference when handling multi-turn conversations or long documents. In other words, if you have a 200k-token conversation and you add one more question, Qwen doesn’t need to re-read all 200k tokens every time – it can cache and reuse the previous computation results.

This is crucial for latency and cost management, given that naive processing of such long inputs would be extremely slow and expensive.

Overall, Qwen3-Max’s long-context and multilingual capabilities open up use cases that are infeasible for typical LLMs. You can feed it a large bilingual corpus and ask detailed questions that require cross-referencing across languages. You can input an entire code repository (hundreds of thousands of lines) and get a summary or find specific issues. You can maintain an ongoing dialog with an AI agent that retains context over months of interaction.

These abilities make Qwen3-Max particularly suitable for enterprise knowledge management, long-form content generation, and tasks like legal or scientific analysis that involve very large texts. As one analysis put it, “Alibaba’s trillion-parameter flagship model supports a native 258K context window (extendable to 1 million tokens) and delivers blazing-fast performance…”. This combination of capacity and speed at scale is a differentiator for Qwen3-Max in the LLM landscape.

Developer Features and Integration

Qwen3-Max is built with developers in mind, offering a number of features and tools to facilitate integration into real-world systems. First and foremost, the model’s API is fully compatible with OpenAI’s API schema. This means that if you have code that currently calls OpenAI’s chat/completions endpoint, you can switch to Qwen3-Max by simply changing the endpoint URL and API key. The request/response format (with roles like “system”, “user”, “assistant” and parameters like temperature) is the same.

For example, to query Qwen3-Max via Alibaba Cloud, you use a base URL like https://dashscope-intl.aliyuncs.com/compatible-mode/v1 with your API key, and specify model: "qwen3-max" in the payload. The ease of this integration lowers the barrier for developers to experiment with Qwen3-Max or even swap it into existing applications as a drop-in replacement for an OpenAI model. In addition, Alibaba provides a web interface called Qwen Chat (chat.qwen.ai) where developers can interact with Qwen3-Max-Instruct directly for testing and prototyping.

Beyond the API itself, Alibaba has released a suite of open-source tools around Qwen3 to support various developer needs. One such tool is Qwen-Agent, an SDK for building AI agents with Qwen3 models. Qwen-Agent comes with built-in templates and parsers for tool usage, so that developers can easily define new tools and have the model invoke them. Instead of writing complex prompt templates for each tool, you can configure an Assistant agent instance with a list of functions (tools) and Qwen-Agent will handle the prompting format internally.

The library supports both MCP (Model-Computed Prompt) tools – which are like command-line invocations that the model can call – and built-in functions such as a code interpreter or web fetcher. This greatly reduces the complexity of implementing AI agent workflows. For example, with a few lines of Python you can set up a Qwen agent that can tell the time or fetch web content using provided tool definitions. When you run the agent, Qwen3-Max will output a reasoning trace and the tool commands to execute; Qwen-Agent parses these and executes the actual tool, then feeds the result back into the model.

This cycle continues until the task is complete. By releasing Qwen-Agent, Alibaba has enabled developers to build complex autonomous agents (for IT automation, data processing, etc.) on top of Qwen3 with minimal effort.

Another developer-friendly feature of Qwen3 is the ability to toggle the “thinking mode” in open-source deployments. When using Qwen3 models locally (e.g. Qwen3-32B on Hugging Face Transformers), you can enable the output of the model’s internal chain-of-thought by setting a flag in the prompt or generation call.

The model will then produce a special <think> ... </think> block containing its reasoning steps, followed by the final answer. This is extremely useful for debugging and transparency – you can see why the model arrived at a certain answer, or detect where its reasoning went wrong. In the unified Qwen3 architecture, this thinking output is seamlessly integrated: you don’t need a separate model, just a special token sequence to trigger it. The Hugging Face Qwen3 integration provides a helper (enable_thinking=True) that automates this formatting.

For developers, this means you can programmatically switch between quick responses and thorough reasoning traces as needed. It’s worth noting that in multi-turn conversations, you should omit the <think> content from the history you pass back to the model, otherwise you’d be feeding the chain-of-thought back in (which isn’t necessary). The Qwen3 chat template provided in the open models already handles this by only keeping the final answer in the conversation history.

Structured output and formatting control are also areas where Qwen3-Max shines for developers. The model has been aligned to follow instructions about format strictly, which is crucial for enterprise applications that require e.g. JSON outputs or SQL queries as answers. The Qwen3 documentation suggests including specific format instructions in prompts to standardize outputs. For instance, for a math problem you might say: “Please reason step by step, and put your final answer within \boxed{}.” so the model will output the answer in a LaTeX box.

For multiple-choice questions, you can prompt: “Please show your choice in the answer field with only the choice letter, e.g., "answer": "C".” to ensure the model responds in a JSON with an answer key. Qwen3-Max is quite good at adhering to such instructions, producing clean and structured answers that can be directly parsed by programs. The model also has an emphasis on JSON-like formats for tool usage and outputs. Many of its internal tools (and agent interactions) use JSON structures, so Qwen is comfortable reading and writing JSON. This is beneficial for developers who want to consume the model’s output in their software pipelines – the answers can be made machine-readable with minimal prompt engineering.

In fact, one of the primary use cases highlighted for Qwen3-Max is “structured data processing and JSON generation”, indicating it’s tailored to tasks like turning unstructured text into structured outputs or filling forms and databases from natural language input.

Finally, Alibaba provides smaller Qwen3 models (0.6B to 14B dense, and 30B/235B MoE) under Apache 2.0 license, which developers can use for local testing, fine-tuning, or as fallbacks. While Qwen3-Max itself isn’t open-source, you can experiment with the open Qwen3-14B or Qwen3-32B to get a feel for the model’s behavior, then call Qwen3-Max via API when you need the maximum power.

The Qwen3-Embedding and Qwen3-Reranker models (ranging from 0.6B to 8B) are also available for building RAG systems (retrieval-augmented generation) – these are models specialized in producing vector embeddings and reranking search results. Using them in conjunction with Qwen3-Max (for the final answer generation) allows developers to build an end-to-end Qwen-powered pipeline, all within the same ecosystem. The consistency between the models can improve overall performance, e.g. the embeddings are designed to work well with the generative model’s understanding.

Summing up, Qwen3-Max offers extensive developer support: from compatibility and integration (OpenAI-like API) to tooling (agent frameworks, open smaller models) and special features (controllable reasoning mode, structured outputs). This makes it not just a powerful model, but one that is usable and adaptable in an enterprise development context.

Enterprise Use Cases

Qwen3-Max is particularly suited for advanced AI applications in enterprise and research settings. Below we highlight several key use case categories and how Qwen3-Max’s capabilities address them:

AI Agents and Autonomous Tool-Use

One of the most exciting applications of Qwen3-Max is as the brain of AI agents that can plan, reason, and act to accomplish goals. With its strong tool-use proficiency, Qwen3-Max can serve as the central reasoning module for an agent that interacts with external systems. For example, consider an IT helpdesk agent that can take a user request, decide it needs to run diagnostics, execute some scripts (via a tool), then return the result to the user. Qwen3-Max has the planning ability to break down the task and the knowledge to use tools correctly (thanks to training and fine-tuning on agent tasks).

The model’s “agentic” skills enable it to operate with fewer human prompts – it can proactively determine steps towards a goal and carry them out independently. Enterprises can leverage this to automate complex workflows. For instance, a marketing analytics agent might automatically fetch data from a database, run an analysis, and generate a report, all through Qwen3-Max orchestrating those steps. The availability of Qwen-Agent SDK further streamlines building such agents.

Developers can define a suite of company-specific tools (APIs, database queries, etc.), and Qwen3-Max will integrate those into its reasoning. Compared to simpler chatbots, an agent powered by Qwen3-Max can handle multi-step transactions (like “Book me a flight using our internal travel API, then email me the itinerary”) with high success rates. This opens possibilities for backend automation in customer service, operations, and IT. Qwen3-Max’s combination of long context and reasoning means the agent can maintain state over a long session, recall prior instructions, and avoid redundant questions.

Early benchmarks (Tau2-Bench) already show Qwen3-Max outperforming other models in tool calling tasks, which bodes well for real deployments of AI agents that need reliability and correctness in every action.

Retrieval-Augmented Generation (RAG) Systems

In enterprise settings, it’s often crucial to ground LLM outputs in proprietary data – whether that’s internal documents, knowledge bases, or databases. Qwen3-Max is an excellent choice for building Retrieval-Augmented Generation systems, where the model is provided with relevant context retrieved from a data store and then generates an answer.

Qwen3’s ecosystem includes dedicated embedding and reranker models to facilitate this: you can use Qwen3-Embedding models to vectorize documents, Qwen3-Reranker models to refine search results, and then Qwen3-Max to produce the final answer. In fact, as a Medium article notes, “Qwen 3 for RAG is an open-source AI solution designed for Retrieval-Augmented Generation. It combines three main models: embedding models to find relevant documents, reranking models to sort the best results, and a powerful LLM to generate clear, accurate answers. Qwen 3 supports long context, multiple languages, and is easy to use, making it ideal for building smart search and question-answering systems.”. With Qwen3-Max’s 258K+ token context window, an enterprise RAG system can feed extremely large retrieved texts (multiple lengthy documents at once) into the model for synthesis.

This means the model can cross-reference information across an entire repository or analyze a full length scientific paper as context for a question. The multilingual nature of Qwen3-Max also helps in RAG – a user can query in one language while the documents might be in another, and Qwen can bridge that gap by understanding both. A concrete use case is a corporate knowledge assistant: employees ask a question and the system retrieves company policy documents or manuals, then Qwen3-Max reads those and gives a precise, cited answer. Because Qwen3 is adept at structured output, it can even return answers in a structured format (like an answer + source list in JSON).

And since the Qwen3 series emphasizes factual accuracy (with extensive training on knowledge and alignment with human preferences), Qwen3-Max tends to use provided context effectively and reduce hallucinations in its final answers. Companies can thus build high-accuracy QA bots, report generators, or research assistants on their private data by combining vector search (for retrieval) with Qwen3-Max as the generative brain.

Enterprise Automation and Decision Support

Many enterprise applications involve automating decisions or generating structured outputs from textual inputs. Qwen3-Max is well-suited for enterprise automation tasks such as report generation, form filling, and decision support systems. For example, in a finance company, Qwen3-Max could take in a pile of compliance documents (thanks to its long context) and output a summarized compliance report highlighting the key points and decisions needed.

Its ability to produce structured outputs (tables, JSON, XML, etc.) means it can fill the role of generating machine-readable reports or database records from natural language input. One listed use case for Qwen3-Max is “structured data processing and JSON generation” – think of feeding in semi-structured logs or emails and having the model output a standardized JSON record of important fields. This could dramatically speed up back-office workflows like parsing invoices, processing insurance claims (reading claim descriptions and extracting entities), or analyzing survey feedback. Qwen3-Max’s MoE-enhanced capacity ensures it can capture subtle details in input text (because different experts may specialize in different domains or formats). Additionally, Qwen3-Max can function as a decision support assistant for executives or analysts.

Given its training on a vast corpus including knowledge and reasoning, it can be asked for recommendations or risk analyses (e.g., “Based on this project proposal, what are potential risks and how to mitigate them?”). It won’t have company-specific knowledge unless provided, but it can reason through scenarios in a general way and produce a coherent, structured analysis. Because Qwen3-Max has been aligned with human preferences, it tends to produce helpful and well-organized explanations rather than just raw stream-of-consciousness. In enterprise use, this translates to clearer memos, checklists, or decision outlines that a human can then review. Enterprises can also integrate Qwen3-Max into their internal tools via the OpenAI-compatible API – for instance, into an Excel plugin to provide intelligent formula suggestions or into a CRM system to auto-summarize client interactions.

The bottom line is that Qwen3-Max acts as a force multiplier for knowledge workers: automating the grunt work of reading, summarizing, extracting, and formatting information, so human employees can focus on the final decision or creative tasks.

Research and Data Analysis

For research institutions or R&D departments, Qwen3-Max offers capabilities that can transform how data is analyzed and hypotheses are generated. With its strong showing in mathematical and logical reasoning, Qwen3-Max can tackle scientific problem-solving tasks that involve complex calculations or multi-step deductions.

Researchers can use the model to outline proofs, explore computational experiments, or even find errors in reasoning. In mathematics and science domains, Qwen3-Max’s performance is outstanding – as noted, it scored around 80.6% on the challenging AIME math competition in one evaluation, far above most models. This indicates it can handle advanced high-school to undergraduate level math problems reliably. A research team could leverage the Thinking mode (once available) to have Qwen3-Max deeply analyze a problem: because the model can integrate a code interpreter, it might even run small simulations or calculations to aid its reasoning.

For example, in a physics experiment design, the model could be prompted to consider various scenarios and compute expected outcomes using its internal tools. In data analysis, Qwen3-Max can ingest an entire dataset description or raw data (in textual form, or converted to a long text table) and provide insights. Although it’s not a structured database query engine, it can interpret textual data and find patterns described in text. Moreover, the model’s million-token context could allow feeding it large portions of an academic literature review or a full book, and then asking it analytical questions.

This could expedite literature surveys or help in hypothesis generation by connecting dots across papers (something a human might take weeks to do). Qwen3-Max is also multilingual, which in research is useful for reading papers in different languages or collating international findings.

Another research use case is code-assisted data analysis: Qwen3-Max can generate code to analyze data and then explain the results. For instance, a scientist could ask, “Given this data (attached CSV content), write a Python script to calculate the correlation and summarize the findings.” Qwen3-Max could produce the script (it’s a competent coder) and also describe in words what the correlation implies.

This marries its coding and reasoning strengths. In fields like bioinformatics or finance where both domain knowledge and programming are needed, a tool like Qwen3-Max is extremely valuable. It’s essentially a research assistant that can both compute and think, at scale. One current limitation is that Qwen3-Max itself is not multimodal (text-only), so for image or audio analysis, one would need the separate Qwen3-VL or Qwen3-Omni models. However, if data (like an image’s description or audio transcript) is converted to text descriptions, Qwen3-Max can work with those.

In summary, Qwen3-Max can significantly accelerate research tasks by taking over time-consuming analysis and providing logically reasoned outputs that experts can then verify or build upon.

Code Generation and System Design Reasoning

Software engineering stands to benefit greatly from Qwen3-Max’s capabilities. We’ve touched on its raw code generation skill, but beyond writing code, Qwen3-Max can assist in system design and architecture reasoning.

Developers can discuss high-level design ideas with the model, using it as a sounding board that has ingested vast amounts of software engineering knowledge and best practices. For example, one could prompt Qwen3-Max: “I need to design a scalable microservices architecture for an e-commerce platform. Help me outline the components and their interactions.” The model can produce an organized design proposal, perhaps even suggesting APIs, databases, and considerations for reliability (leveraging the knowledge it has from training on technical content).

Because Qwen3-Max is instruction-tuned and aligned, it often provides reasoned justifications for its design decisions, not just bullet points. It might say, for instance, “Use an event-driven communication between Service A and B to decouple dependencies, which aligns with CQRS patterns for scalability.” This can greatly help less-experienced engineers or even experienced architects who want a second opinion. Qwen3-Max’s long memory allows it to take into account a lengthy project description or a set of requirements (even a full specification document) and then incorporate all that context into the design.

On the coding side, Qwen3-Max can be integrated into development workflows for code review, documentation, and refactoring. Its ability to handle huge context windows means you could feed in an entire code file (or multiple files) and ask the model to explain what the code is doing, or to find potential bugs. Qwen3-Max will read through it and provide a detailed explanation or identify problematic sections, since it can keep track of all the functions and their relationships. This is far more effective than small-context code models that might miss cross-file issues.

Qwen3-Max’s training on reasoning also helps in debugging scenarios – it can logically trace through what a piece of code should do versus what it’s actually doing, potentially spotting inconsistencies. The model’s proficiency in structured output can be used to generate documentation in a consistent format (for instance, outputting a JSON or markdown summary of each function in a codebase). In planning and agile processes, Qwen3-Max can assist in writing user stories or test cases from high-level descriptions.

And for system troubleshooting, it can analyze logs (given the logs as text input) and hypothesize causes of failures. All these tasks benefit from Qwen3-Max’s large context (to see the whole picture), reasoning (to connect cause and effect), and knowledge base (to recall known error patterns or solutions). Some early users even reported creative uses like generating an entire small game or complex algorithm from a single prompt – Qwen3-Max is capable of multi-step code generation that demonstrates creativity within the bounds of logic (e.g., generating a voxel 3D garden simulation code).

With the cost of the API, one might not use Qwen3-Max for every piece of trivial code (smaller open models could handle simple tasks), but for mission-critical or very complex code generation tasks, Qwen3-Max is an invaluable tool that can save significant development time and reduce errors.

In all the above use cases, it’s important to note that Qwen3-Max acts best as a copilot or assistant, rather than a fully autonomous agent without human oversight (at least in these early stages). Enterprises will still employ human experts to verify outputs, enforce constraints, and handle edge cases.

However, by integrating Qwen3-Max into these workflows, organizations can achieve much greater scale and efficiency, whether it’s handling hundreds of customer queries in parallel, analyzing terabytes of text data, or writing thousands of lines of code for a new feature.

Python Integration Examples

To get started with Qwen3-Max as a developer, you can either use the Hugging Face Transformers library for open-source Qwen3 models or call the Qwen API for the full Qwen3-Max. Below are examples of both approaches.

Using Hugging Face Transformers (Open Models)

The open versions of Qwen3 (up to 235B-parameter MoE, or the 32B dense model) can be loaded via Hugging Face and run on your own hardware (with sufficient GPU memory). This is useful for development and testing. For example, let’s load the Qwen3-32B model and have it generate a response to a prompt:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "Qwen/Qwen3-32B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# Define a user prompt

prompt = "What are the benefits of having a 1-trillion-parameter AI model in enterprise applications?"

# Format the input as a chat (Qwen uses a chat template with roles)

messages = [{"role": "user", "content": prompt}]

text_input = tokenizer.apply_chat_template(messages, add_generation_prompt=True, enable_thinking=False)

# Tokenize and generate

inputs = tokenizer(text_input, return_tensors="pt").to(model.device)

output_ids = model.generate(**inputs, max_new_tokens=200)

response = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(response)

In this snippet, we load the Qwen3-32B model and tokenizer. We then prepare a chat-style prompt with the user’s question. Qwen models expect a specific format for dialogue – using tokenizer.apply_chat_template ensures the prompt is formatted with system/user/assistant tokens as needed (here we use the default system prompt and just include the user content). We set enable_thinking=False to get a direct answer without the <think> reasoning content for simplicity. The model then generates up to 200 tokens of response.

Finally, we decode the output tokens to get the text answer. If you run this (with appropriate hardware), the model will produce a detailed answer listing benefits of trillion-parameter models (such as improved accuracy, ability to handle diverse tasks, etc.). Using the open models this way lets you prototype prompts and understand Qwen’s behavior. Keep in mind the 32B model is much smaller than Qwen3-Max, so Qwen3-Max via API will be even more capable (and might output longer, more nuanced answers). For local deployment of Qwen3 models, you can also use optimized inference engines like vLLM or DeepSpeed to handle the long context more efficiently.

Using the Qwen API (OpenAI-Compatible)

For Qwen3-Max itself, you’ll access it through the Alibaba Cloud API. The Qwen team has made the API interface compatible with the OpenAI Python SDK for convenience. If you have the openai Python package, you can use it to call Qwen3-Max by specifying the API base URL. Here’s an example:

import os

import openai

# Set your API credentials and endpoint

openai.api_key = os.getenv("ALIYUN_API_KEY")

openai.api_base = "https://dashscope-intl.aliyuncs.com/compatible-mode/v1" # Qwen's endpoint

# Send a chat completion request

response = openai.ChatCompletion.create(

model="qwen3-max",

messages=[

{"role": "system", "content": "You are an expert assistant specialized in AI."},

{"role": "user", "content": "Give a short introduction to the Qwen3-Max model."}

]

)

answer = response["choices"][0]["message"]["content"]

print(answer)

In this code, we configure the openai library to use Alibaba’s DashScope service endpoint (which hosts Qwen) and our API key. Then we call ChatCompletion.create with model="qwen3-max", providing a system message to set context and a user message. The rest of the call works exactly like it would for OpenAI’s API – the response will contain choices with messages. The printed answer will be a concise introduction to Qwen3-Max, as requested. Using the OpenAI SDK makes integration into existing Python applications seamless. Under the hood, this sends an HTTP POST to the Qwen API endpoint with the given payload.

REST API Example

For production integration, you may prefer to call the Qwen3-Max API directly via HTTPS (without an SDK). The API expects a JSON payload and returns a JSON response. Below is an example using curl to illustrate the HTTP request format:

curl -X POST https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "qwen3-max",

"messages": [

{"role": "user", "content": "How can Qwen3-Max be used in a customer service application?"}

],

"temperature": 0.7,

"max_tokens": 300

}'

This curl command sends a chat completion request to the Qwen3-Max API. We include the authorization header with our API key, and a JSON body specifying the model, an array of messages, and some optional generation parameters (temperature, max_tokens). The API will respond with a JSON object containing the model’s answer. A typical response might look like:

{

"id": "cmpl-abc123...",

"object": "chat.completion",

"created": 1697300000,

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Qwen3-Max can be deployed as an intelligent chatbot to assist customers. It can understand customer queries (even lengthy or complex ones) and provide accurate, context-aware responses. For example, in a customer service application, Qwen3-Max could retrieve relevant knowledge base articles to answer a user's question, ask clarifying questions if needed, and output the answer in a clear and helpful manner. Its long context window allows it to remember the entire conversation history, so customers don't have to repeat information. Additionally, Qwen3-Max’s structured output capability means it can format answers (like step-by-step instructions) cleanly. Overall, it would improve response times and consistency in customer support while handling multi-turn dialogues effectively."

},

"finish_reason": "stop"

}

]

}

The content in the assistant’s message is the answer from Qwen3-Max. As shown, the model gave a multi-sentence explanation of how it can be used in customer service, leveraging its strengths (understanding long queries, retrieving info, multi-turn memory, structured answers). This response JSON structure is similar to OpenAI’s; you can parse it and extract the answer content easily in your application.

In a production setting, you would typically use an HTTP client in your language of choice (for example, Python’s requests library, JavaScript fetch, etc.) to send such requests. The key is to include your API key in the header and follow the endpoint format. Alibaba’s pricing for Qwen3-Max API is around $6.4 per million output tokens, which is competitive given the model’s capability (for comparison, some other providers charge ~$10 per million for their top models). Ensure you properly stream or paginate the outputs if they are large, and handle any network errors or rate limits as per Alibaba Cloud’s API guidelines.

With the above examples, developers should be able to integrate Qwen3-Max into applications either by self-hosting smaller Qwen models for experimentation or by directly calling the hosted Qwen3-Max for production usage. Next, we’ll cover some best practices for prompting Qwen3-Max to get the most out of it.

Prompting Best Practices

Working with a model as powerful as Qwen3-Max still requires careful prompt design to achieve optimal results. Here are some best practices for prompting Qwen3-Max in developer-oriented scenarios:

Use System Messages for Context/Role: Since Qwen’s API supports the “system” role, you can provide a system message to prime the model with context or persona. For instance, setting {"role": "system", "content": "You are a helpful coding assistant."} will bias Qwen3-Max to give answers in that style. This is useful for domain-specific tuning (like making it act as a cybersecurity expert or a friendly customer support agent).

Leverage the Thinking Mode When Needed: In the open-source Qwen models, you can explicitly enable thinking mode to get chain-of-thought. While the API version doesn’t expose a direct flag, you can often induce more reasoning by instructing the model accordingly. For example, including “Let’s think step by step.” in the user prompt may encourage Qwen3-Max to internally reason more (though it typically doesn’t show the <think> content via API). Use this for complex problems where you want the model to take its time reasoning. Conversely, for straightforward queries, you can tell it “Answer briefly and directly.” to get a faster, concise reply.

Control Temperature and Decoding: For Qwen3-Max, a temperature around 0.6–0.7 is often a good starting point. Lower values (0.2–0.5) make the output more deterministic – useful for factual Q&A or when you need consistent JSON formats. Higher temperature can make the model more creative or exploratory, which might be useful in brainstorming or design discussions, but could also introduce rambling. Qwen3’s documentation suggests using slightly different sampling for thinking vs non-thinking modes (e.g. non-thinking: temp ~0.7, top_p 0.8). As always, experiment with these parameters to suit your task.

Specify Output Format Clearly: Qwen3-Max is very responsive to format instructions. Always clarify in your prompt if you need a certain format. For example, “Provide the answer as a JSON object with fields solution and explanation. Only JSON, no extra text.” Qwen will usually comply and give you exactly that format. When benchmarking or using in automated pipelines, this is vital. The Qwen team recommends standardizing prompts for certain tasks: e.g., for math problems include a phrase about final answer in \boxed{}, for multiple-choice ask for the answer in an "answer": "X" JSON field. Following those guidelines yields consistent outputs that are easy to parse.

Utilize Multi-Turn Conversations for Clarification: Qwen3-Max can carry context across many turns, so take advantage of that. If the user query is ambiguous, you can program the assistant to ask a follow-up question rather than guessing. This can be done by prompting it (in a system message or as an example) with something like: “If the user’s request is unclear or incomplete, ask a clarifying question before answering.” In customer service or consulting applications, this leads to more accurate help. Qwen3-Max’s long memory ensures it won’t lose track of the conversation when it gets an answer to its clarification question.

Avoid Including Undesired Content in History: As noted earlier, if you use the thinking mode and get <think> outputs, do not include those in the next turn’s history that you send back to the API. Only include what the assistant actually said to the user. This avoids confusing the model or leaking the chain-of-thought to the user. The same goes for any system messages or hidden prompts – keep them separate and don’t inadvertently reveal them later in the conversation turns.

Test Prompts with Smaller Qwen Models: Since Qwen3-Max usage has cost, you can first test and refine your prompt on Qwen3-14B or Qwen3-32B (open models) locally. They share the same format and general behavior. Once you get a good result, switch to Qwen3-Max via API for the final run – the output quality will likely improve, but the prompt behavior should remain consistent.

Be Mindful of Long Context: While Qwen3-Max can handle very long inputs, always check if that much context is truly needed for a given query. Including irrelevant information can still confuse any model. If you give it 200k tokens of text, ensure that relevant pieces are clearly indicated (perhaps via section headings or an index). If possible, trim the context to what’s necessary using a retrieval step. Qwen3-Max will try to use all information given, but if the prompt is too cluttered, it might pick up wrong cues. Good prompt hygiene (like telling the model “the following are notes from different sources” or structuring the prompt with labels) can help it navigate large inputs more effectively.

Following these practices, developers have reported that Qwen3-Max is very controllable and obedient to instructions, especially compared to earlier models. It rarely goes off on tangents unless asked to be creative, and it sticks to the requested format diligently. This makes it a reliable component in systems where predictable output is important (e.g., an automated report generator that must produce valid JSON for another system to consume). By investing time in prompt design and instructions, you can harness the full power of Qwen3-Max while avoiding common pitfalls like verbose irrelevancies or format deviations.

Limitations and Considerations

While Qwen3-Max is a top-tier model, developers should be aware of its limitations and design trade-offs:

Closed-Source and Access: Qwen3-Max’s model weights are not publicly released, meaning you cannot self-host this 1T-param model or fine-tune it on your proprietary data. Access is only via Alibaba Cloud’s API (or their platform interfaces). This introduces dependency on an external service and potential data privacy considerations. Alibaba has enterprise offerings for secure cloud usage, but organizations with strict on-prem requirements might find this limiting. The smaller Qwen models are open-source, so one workaround is to fine-tune a smaller Qwen on your data and use Qwen3-Max for inference on general reasoning.

Cost and Throughput: As of 2025, using a trillion-parameter model is expensive. Although Qwen3-Max’s API pricing (~$6.4 per 1M tokens) is lower than some competitors, it can still add up with huge contexts or heavy usage. Also, inference is relatively heavy – Qwen3-Max is optimized and “blazing fast for its size”, but it’s inevitably slower than smaller models. For interactive applications, you may need to use streaming (the API and open SDK support streaming token output) to partially mitigate latency. In batch processing scenarios, consider whether all tasks truly need Qwen3-Max or if a mix of model sizes can be used to control cost (e.g., filter easy queries to a 14B model, use Qwen3-Max for the hard ones).

Context Window Limits: The effective context window in the current Qwen3-Max release is around 256k tokens for input (with output tokens on top of that). This is enormous, but not the full 1M that was used in training – the higher lengths (up to 1M) require using rope scaling techniques that might degrade performance or require special handling. In practice, feeding more than a couple hundred thousand tokens might see some quality drop or increased latency. And while 256k is plenty for most cases, certain edge cases (e.g., analyzing an entire code repository with millions of lines, or training data extraction attacks) could hit that limit. Always ensure your input encoding stays within allowed limits; the model won’t process beyond that and the API might reject overly large requests.

Multimodality: Qwen3-Max itself is text-only. It cannot directly process images, audio, or video. Alibaba has introduced separate models like Qwen3-Omni (30B) for multimodal inputs (text + vision + audio) and Qwen3-VL for vision-language tasks, but those are distinct from Qwen3-Max. If your use case requires image analysis or speech, you’d either need to use those specialized models or convert that data to text (which has limits in detail). The integration of modalities is an active area of development, and future versions might merge these capabilities, but currently plan accordingly (e.g., use Qwen3-Max for reasoning and a vision model for image recognition in a pipeline).

Creativity and Style: By design, Qwen3-Max is tuned for precision and efficiency over open-ended creativity. Users and independent reviewers have noted that it is not as “conversational or creative” as some other models in freeform dialogue. It tends to be a bit formal and terse, focusing on facts. This is ideal for business use (no unnecessary fluff), but if your application is a creative writing assistant or a friendly companion bot, Qwen3-Max might come off as too serious or dry. It can certainly produce fiction or jokes if prompted, but its strength is clearly in technical accuracy. For any content where a bit of personality or flair is needed, you may have to explicitly prompt it to adopt a style, or use a different model geared towards that. The flip side is that Qwen3-Max is less likely to hallucinate fanciful facts or go off-script, which is a positive for factual applications.

Knowledge Cutoff and Updates: Like all pretrained models, Qwen3-Max has a knowledge cutoff (likely sometime in 2025 given its release). It may not know very recent events or highly specific new information unless provided in the prompt. Alibaba can update the model or fine-tune it periodically, but as a user, you should be mindful that Qwen3-Max’s knowledge might be a few months out-of-date. In enterprise settings, this is usually fine because you’ll provide context or use RAG for company-specific data. But for open-domain questions about latest news, Qwen3-Max might not have that information. Also, ensure to provide current data for time-sensitive queries (e.g., stock prices, today’s weather) via tools or context, as the model won’t have it inherently.

Safety and Alignment: Qwen3-Max has undergone alignment training to follow instructions and avoid toxic or harmful outputs (Alibaba likely uses their Qwen3-Guard and other mechanisms for safety). However, the exact safety tuning is not fully transparent. As a developer, you should implement your own content filtering in high-stakes applications. The model generally refuses clearly disallowed requests, but subtle biases or inaccuracies can still occur, especially in subjective or sensitive topics. Always test the model’s behavior on a range of inputs relevant to your domain – if it’s a healthcare assistant, see how it handles medical advice; if it’s a financial tool, see how it handles investment suggestions. Review the outputs to ensure they meet your compliance and ethical standards. Since Qwen3-Max is relatively new, its safety profile might not be as extensively battle-tested as something like ChatGPT, so caution and human oversight are advised for applications with legal or ethical implications.

Competition and Lock-in: Avoid becoming overly reliant on Qwen3-Max-specific features in case you need to switch models later. While Qwen3-Max is top-notch now, the AI field moves fast. The good news is Qwen follows OpenAI API standards, so swapping to another model (OpenAI, Google, etc.) is mostly a matter of endpoint change – but specialized things like Qwen’s thinking mode or certain prompt templates might not directly transfer. Design your system to be somewhat model-agnostic if possible, using abstraction layers for the LLM calls. This will also allow you to A/B test Qwen3-Max against others over time to ensure you’re using the best tool for the job.

By keeping these considerations in mind, you can mitigate potential issues and harness Qwen3-Max effectively. In many cases, the pros (powerful reasoning, long context, tool use) far outweigh the cons for enterprise uses, which is why Qwen3-Max is generating a lot of interest as a serious AI workhorse. Just be sure to integrate it in a way that accounts for its closed nature and to complement it with other tools or human oversight where necessary.

Developer FAQs

How can I access Qwen3-Max and obtain API credentials?

https://dashscope-intl.aliyuncs.com/compatible-mode/v1 (DashScope is Alibaba Cloud’s API gateway for models). You then send requests with model: "qwen3-max" in the payload. If you prefer a UI, you can also use the Qwen Chat web interface for interactive access, but for integration into applications, the REST API is the way to go. Note that Qwen3-Max may currently be in a preview or controlled access phase, so ensure your Alibaba Cloud account has the necessary service enabled. As of late 2025, the Qwen3-Max-Instruct model is available via API, while the Qwen3-Max-Thinking variant might be released later when it’s fully trained.Is Qwen3-Max open source? Can I run it on my own servers?

What is the difference between Qwen3-Max-Instruct and Qwen3-Max-Thinking models?

qwen3-max-thinking) or a mode of the API. In the open-source smaller models, you simulate some of this by using enable_thinking=True to get chain-of-thought output, but that’s not the full heavy model, just the unified mode of the base model. In summary, Max-Instruct = fast, single-pass, for general use; Max-Thinking = slower, multi-step, for advanced reasoning. Most developers will default to Instruct unless a use case explicitly demands the extra rigor of Thinking mode.How do I use Qwen3’s “thinking mode” or chain-of-thought in my application?

tokenizer.apply_chat_template(..., enable_thinking=True) which will cause the model’s output to include a <think> ... </think> section containing its reasoning. After generation, you need to split this out from the final answer. The Qwen model card provides sample code to find the </think> token and separate the thinking content. When enable_thinking=False, the model will internally still reason (it always can), but it won’t show the reasoning explicitly; it will produce just the answer. In the API (Qwen3-Max-Instruct), there is no direct flag to get the chain-of-thought as output (and indeed the model might not even generate the reasoning text at all to save tokens, unless it’s needed for the answer). The Thinking variant, when available, might give you the reasoning steps or at least use them behind the scenes. If your goal is to use chain-of-thought to improve transparency or correctness, a common approach is to prompt the model to “show your reasoning then answer”. Qwen3-Max may then output something like: “Reasoning: (some steps)… Answer: (final answer)”. You’d have to parse that format. However, do note that in many production scenarios, you don’t want to reveal the raw reasoning to end-users as it could be confusing or verbose. It’s mostly a tool for developers to trace the model’s logic. Qwen-Agent, for instance, captures the thinking steps to decide tool use, but only the final answer is returned to the user. So, in summary: with open models, use the built-in template to enable thinking mode and parse the <think> block; with the API model, there isn’t an official way to get the thinking content, but you can approximate by prompting or by waiting for the dedicated thinking model release.What is the maximum context length of Qwen3-Max and how do I work with such long contexts?

"rope_scaling": {"rope_type": "yarn", "factor": 4.0, ...}) or passing arguments to vLLM or other servers. In Alibaba’s API, they mention dynamic YaRN is supported out-of-the-box, so presumably if you supply a very large input, the backend will handle it (within allowed limits). To work with such long contexts: you need to ensure your tokenizer can encode all that text (the Qwen tokenizer is sentencepiece-based and should be able to encode lengthy inputs as a list of tokens). Memory usage is a concern – generating with a 256k input will use a lot of GPU memory (for open models) or cost more tokens (for API pricing). So don’t use the full context unless necessary. If you have a huge document, you might consider segmenting it and using retrieval to pull relevant parts, which is often more efficient than dumping everything in. However, if you truly have a use for it (e.g., analyzing a long legal contract in one go), Qwen3-Max is one of the few models that can do it. Just send the entire text as a single message (or multiple messages totalling the length) and ask your question referencing it. The model will try to make use of all parts. Also, be mindful of the output length – by default, Qwen3 models allocate some space for output tokens. The open Qwen3 config uses max_position_embeddings = 40960 (which effectively plans for ~32k input + ~8k output). When using extended contexts, you should increase the max output length accordingly if you expect a long answer. Hugging Face generation allows up to 32768 new tokens by default for Qwen3 (and suggests up to ~38k for hardest tasks). In the API, you can set max_tokens to control how large an answer you want. If you have a very long input, likely you’ll want a summary or specific answer, not an equally long output, so usually output length is much shorter than input.Does Qwen3-Max support functions or plugins (like OpenAI function calling)?

{ "function": "get_weather", "location": "XYZ"}”, and Qwen will likely comply. Alibaba might introduce an official function calling API later, since they aim for compatibility, but at the moment you’d implement it at the application level or use Qwen-Agent. As for plugins (in the sense of browsing or other tools that OpenAI plugins allow), Alibaba’s approach is that you integrate the tools yourself using Qwen-Agent or custom logic. Qwen3-Max can browse the web if you give it a tool that performs HTTP requests (some examples in the community have done this). But there isn’t a plugin “store” like OpenAI’s. In summary, no built-in function calling param yet, but the model can handle tool use via prompting or the Qwen-Agent library. You have full flexibility to define how it should output a function call, and it will do so, which you then execute and feed results back.What kind of hardware or infrastructure is recommended to use Qwen3 models?

By understanding Qwen3-Max’s design and following the above guidelines, developers and AI engineers can effectively incorporate this state-of-the-art LLM into their systems.

Qwen3-Max stands out for its blend of sheer scale, advanced reasoning, and practical integration features – making it a compelling choice for those building the next generation of AI-driven applications in the enterprise domain.

With careful prompt engineering and system design, Qwen3-Max can serve as a powerful AI engine for everything from intelligent assistants and autonomous agents to large-scale analytics and decision support tools.

In summary, Qwen3-Max is not just a big model – it’s a big step towards more “thinking”, versatile AI solutions for real-world use.