Qwen3-Next is a next-generation large language model (LLM) from Alibaba’s Qwen family, designed for cutting-edge applications in AI systems development. It targets developers and engineers building autonomous agents, long-context reasoning pipelines, and enterprise AI solutions.

Qwen3-Next emphasizes agentic planning, multi-step reasoning, ultra-long context handling, and tool-use integration, pushing beyond the capabilities of previous Qwen AI models. It represents a future-oriented architecture that scales both model size and context length efficiently.

Unlike earlier models that required separate versions for chat vs. reasoning, Qwen3-Next integrates “thinking” and “non-thinking” modes in one framework, dynamically adapting to complex queries with internal reasoning when needed. Empirical evaluations show Qwen3 achieving state-of-the-art results on code generation, mathematical reasoning, and agentic tasks, competitive with much larger models, while also expanding multilingual support from 29 to 119 languages.

In summary, Qwen3-Next offers an enterprise-grade LLM platform with unprecedented context depth and intelligent orchestration abilities, making it ideal for advanced AI agent development and complex automation workflows.

This guide provides a comprehensive technical overview of Qwen3-Next’s architecture, reasoning enhancements, tool use capabilities, and integration patterns. We use a documentation-style approach suited for experienced developers, including code examples (Python and REST API) and best practices for prompt engineering.

The goal is to enable you to effectively leverage Qwen3-Next for building autonomous agents, large-scale retrieval systems, coding assistants, and more, with a clear understanding of its internals and how to deploy it in practice.

Architecture Insights

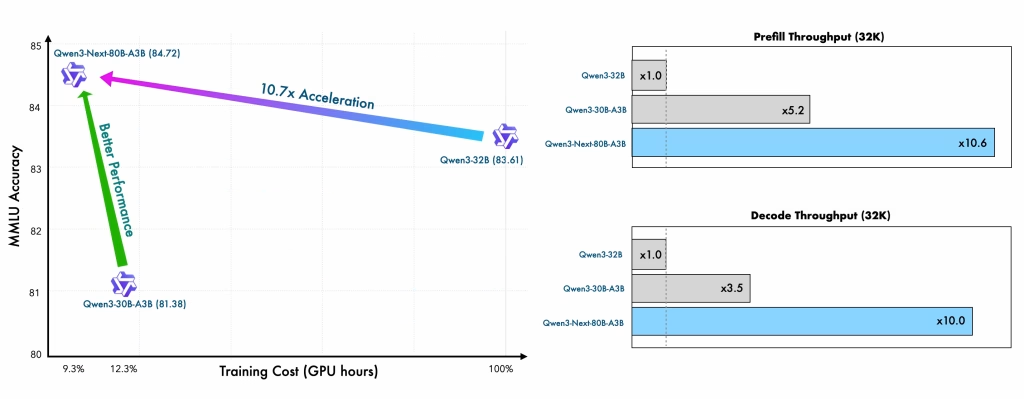

Qwen3-Next introduces a novel hybrid Mixture-of-Experts (MoE) Transformer architecture optimized for both long context and parameter efficiency. The model has 80 billion parameters in total, but only 3B parameters are activated per token thanks to its high-sparsity MoE design.

This means Qwen3-Next delivers the capacity of a massive model while achieving the compute cost of a much smaller model (roughly 10× lower FLOPs per token in MoE layers). The MoE module consists of 512 expert networks (plus a shared expert), with only 10 experts gated “on” for each token processing step. A gating network routes each token’s representation to the most relevant experts, heavily leveraging high-speed GPU interconnect (e.g. NVLink) to minimize communication overhead.

This sparse routing architecture is key to Qwen3-Next’s ability to scale up model size without a proportional increase in inference latency.

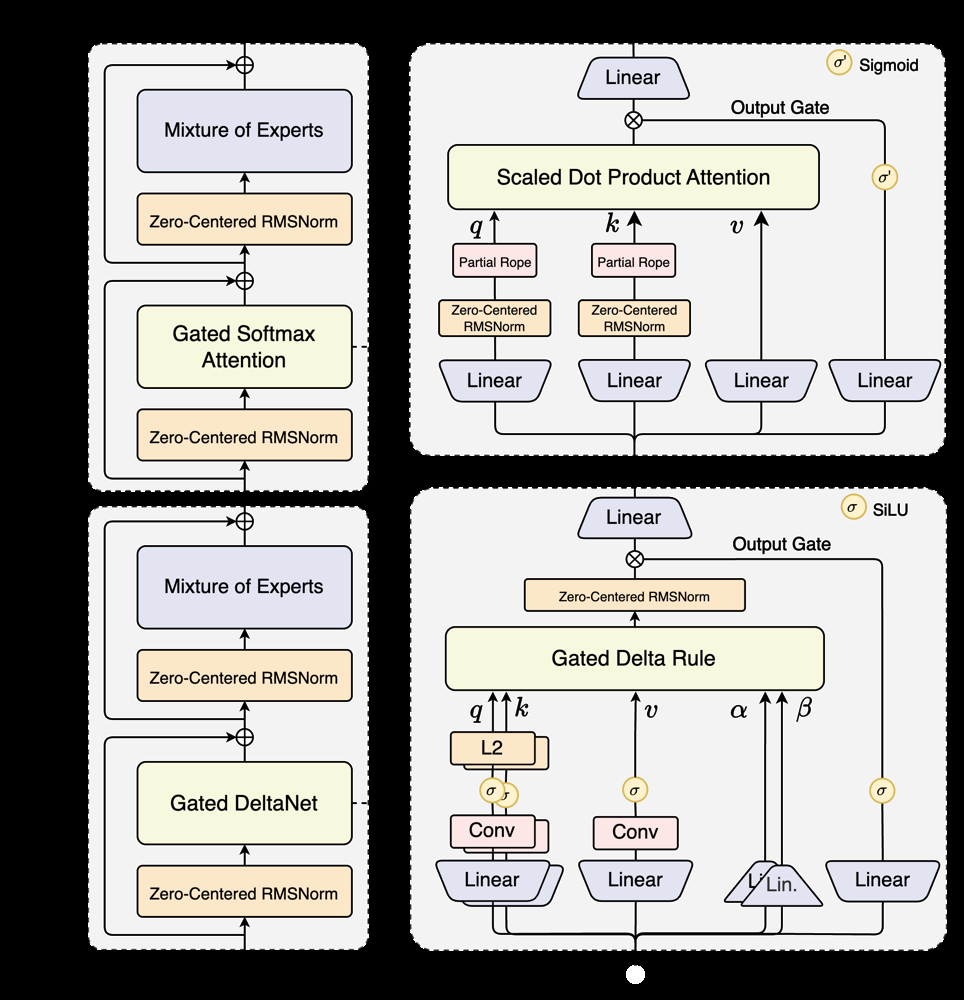

Hybrid Attention Mechanism: Standard self-attention layers are interleaved with a custom linear attention module to efficiently support ultra-long sequence lengths.

Specifically, Qwen3-Next’s 48 Transformer layers follow a 4-to-1 pattern: three consecutive layers use a Gated DeltaNet (a linear attention mechanism) and the fourth uses a Gated Softmax Attention (a full scaled dot-product attention with gating). In other words, 75% of the layers employ a linear-time attention for scalability, and 25% use regular attention to preserve modeling fidelity for shorter-range dependencies.

The “gated” aspect means each attention block has an output gate (with sigmoid or SiLU activation) that modulates the contribution of that layer’s output. This hybrid layout allows Qwen3-Next to process extremely long sequences (hundreds of thousands of tokens) with nearly linear memory and compute scaling. It avoids the quadratic blow-up of standard attention by using efficient DeltaNet layers that focus on local changes (deltas) in the sequence, interspersed with occasional full attention to maintain global coherence.

Additionally, Qwen3-Next adopts Gated Query/Key Value Attention (GQA) in its full-attention layers (with 16 Q heads and 2 KV heads) and applies Rotary Position Embeddings (RoPE) to only a subset of dimensions, further optimizing long-context modeling.

Beyond attention and MoE, Qwen3-Next includes various stability optimizations at the architectural level. It uses zero-centered RMSNorm normalization (with weight decay) throughout, which has been shown to stabilize training, especially in deep or MoE models. The model also employs a technique called Multi-Token Prediction (MTP) during pre-training. MTP allows the model to predict multiple tokens in parallel during training, improving training efficiency and also enabling faster inference by generating chunks of tokens together (when supported by the inference stack).

Notably, Qwen3-Next can generate multiple tokens per forward step, although using this in practice requires specialized decoding support (discussed later). All these innovations are integrated under an Apache 2.0 license, as Qwen3-Next is fully open-source and available to the community for extension and fine-tuning.

In summary, Qwen3-Next’s architecture is built for scale and speed: a sparse MoE for parameter scaling, and a hybrid attention scheme (with gated linear attention + periodic full attention) for context scaling. This allows the model to natively support context windows up to 262,144 tokens (256K) and beyond, which is orders of magnitude greater than typical LLMs.

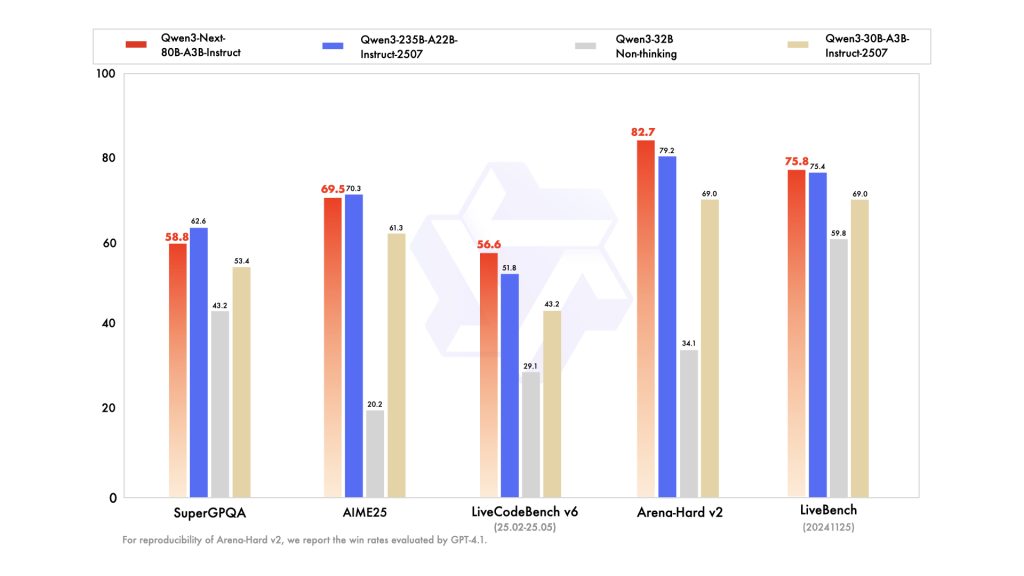

The design choices are carefully balanced to retain strong performance — for example, the 80B Qwen3-Next matches or exceeds the quality of a dense 235B model on many benchmarks, thanks to these efficiency gains. We’ll next explore how these architectural features translate into improved reasoning and agentic capabilities.

Reasoning Enhancements (Long-Context & Planning)

One of Qwen3-Next’s core strengths is its ability to perform complex reasoning over long contexts and to engage in multi-step “agentic” problem solving. The model has been explicitly optimized for “thinking” – i.e., generating and following chains of thought – and can maintain coherence over extremely long dialogues or documents.

Thinking Mode Integration: Unlike some systems that use separate models or prompts for chain-of-thought reasoning, Qwen3-Next supports a unified approach. The Qwen3 series introduced an internal thinking mode for complex multi-step reasoning and a non-thinking mode for direct responses, all within one model. In practice, this means the model can dynamically switch into a step-by-step reasoning process when faced with a complex query, then produce a final answer – without the developer having to swap to a different “solver” model.

In the Qwen3-Next-80B- Thinking model variant, the chain-of-thought is even made explicit: it outputs special <think>...</think> blocks that contain its intermediate reasoning steps. These can be logged or parsed by an agent framework (and omitted from the user-facing answer). The Instruct variant of Qwen3-Next has this capability internally but does not display the <think> blocks (it only gives the final answer). The integration of thinking mode enables Qwen3-Next to handle multi-hop questions, complex logical puzzles, or planning tasks much more effectively, as it can allocate “mental scratchpad” space for working through the problem.

Agentic Planning: Building on the thinking capability, Qwen3-Next excels at agentic planning and tool use. The model can generate a series of steps or actions to solve a problem, especially when paired with an agent loop that executes those steps. For instance, Qwen3-Next can determine that it should first search for information, then perform a calculation, then formulate an answer – essentially implementing the ReAct-style reasoning (Reason and Act) within its output. The “Thinking” model is particularly tuned for this; it can output intermediate JSON or code that calls a tool (e.g., a search query or a calculator function) and then incorporate the tool’s result into its subsequent reasoning.

In fact, Qwen3’s technical report notes that it supports Chain-of-Thought (CoT) and Tool-Integrated Reasoning (TIR) techniques in certain tasks (e.g., the Qwen2.5-Math model used CoT, Program-of-Thought, and tool use to solve math problems). Qwen3-Next generalizes this approach: it has been trained on data that include structured reasoning and tool usage traces, so it natively “knows” how to plan multi-step solutions and interact with external functions in a text-based manner.

Long-Context Memory: Thanks to its 256K+ token context window, Qwen3-Next is capable of maintaining a form of memory over very long conversations or documents. The model can refer back to details mentioned tens of thousands of tokens earlier, enabling truly extended dialogue or analysis sessions. More importantly, Qwen3-Next is designed to be memory-aware – it can focus on relevant parts of the context and not “drift” over long inputs. This is aided by the Gated Delta Networks in its architecture, which help the model prioritize salient information in very long sequences.

In practical terms, Qwen3-Next can ingest entire books or multi-document knowledge bases and perform reasoning or question-answering across them without external summarization (assuming sufficient computational resources for the context size). The model card reports that Qwen3-Next-80B shows significant advantages on ultra-long context tasks up to 256K tokens compared to earlier models. Developers can therefore build Retrieval-Augmented Generation (RAG) systems where Qwen3-Next reads a large set of retrieved documents (possibly the top 100 results from a search, concatenated) and reasons over them collectively, reducing the need for aggressive truncation of context.

To further extend beyond 256K, Qwen3-Next supports positional scaling strategies. Specifically, the developers validated that using RoPE scaling via YaRN (Yet another RoPE method) can extend context lengths to 1 million tokens. By adding a rope_scaling: {"rope_type": "yarn", "factor": 4.0, ...} in the configuration, and using inference frameworks that support it, the context window can be scaled approximately 4× beyond 256K (since 256k * 4 = ~1M). This allows Qwen3-Next to handle extremely large inputs like entire codebases or years of chat history, with the model focusing on local context and slowly updating its internal state as it moves through the text. Such capabilities open the door to new classes of applications, like processing streaming data or performing continuous learning within a session.

In summary, Qwen3-Next is built not just to store a lot of context, but to reason intelligently across that context. It merges high-level planning abilities with low-level long-sequence processing. For developers, this means the model can serve as the “brain” of an autonomous agent – handling everything from reading lengthy instructions and documentation, to breaking down tasks into sub-tasks, to calling APIs or tools to get additional data, and then synthesizing a final solution.

Next, we’ll examine how Qwen3-Next specifically facilitates tool use and function calling in these agentic workflows.

Tool Use & Agent-Oriented Capabilities

Qwen3-Next is particularly adept at interfacing with external tools and APIs, making it ideal for building AI agents that can perceive, act, and reason with real-world data. The model’s training and design place heavy emphasis on structured tool invocation. In fact, Qwen3 excels in tool-calling capabilities, according to the developers. To leverage this, Alibaba provides an open-source library called Qwen-Agent, which wraps Qwen3 models with a tool-using agent framework. Qwen-Agent comes with built-in templates and parsers for tool calls, significantly reducing the complexity of writing your own agent prompt from scratch.

Using Qwen-Agent (or a similar agent orchestrator like LangChain or Transformers agents), you can define a suite of tools that Qwen3-Next is allowed to use. Tools might include functions for web search, database queries, computations, code execution (a “code interpreter”), or custom business APIs. Qwen-Agent allows you to declare these tools in a configuration (e.g., an MCP config file or Python list) with each tool’s description and invocation method.

Once configured, the Assistant agent will prompt Qwen3-Next with an augmented instruction set, enabling the model to call a tool by outputting a special formatted message (such as a JSON block or a <tool> tag) that the agent framework intercepts. The framework executes the tool (e.g., fetches a URL or runs some code) and then feeds the tool’s output back to the model for the next step. This loop continues until the model indicates it has a final answer.

For example, with Qwen-Agent you might define a “fetch” tool and a “datetime” tool. Qwen3-Next can then respond to a user request like “Summarize the latest updates from the Qwen blog.” by first emitting a tool call: {"tool": "fetch", "arguments": ["https://qwen.ai/blog/..."]} (the actual format may differ). The agent executes the fetch tool to get the blog content, returns it to the model, and Qwen3-Next then reads it (thanks to long context) and produces a summary. All of this happens in a single coherent chain of thought. The Qwen-Agent documentation snippet shows exactly such a workflow: the user asks for the latest developments of Qwen (with a URL provided), and the agent (bot.run) orchestrates Qwen3-Next to fetch that URL and then respond.

Under the hood, Qwen3-Next has been fine-tuned to understand function calling syntax. It can output JSON or code that represents a function call when appropriate. In Hugging Face Transformers terms, Qwen3-Next’s Thinking model can act like a ReAct agent, producing reasoning and an action (function call) in a single response.

Alibaba’s implementation uses special tags or formats which Qwen-Agent encapsulates, so you don’t necessarily see raw JSON in the prompt – the library handles prompt templating and response parsing for you. The key point for developers is that Qwen3-Next is capable of deciding which tool to use and when, guided by either few-shot examples or system instructions. This greatly simplifies building autonomous systems: the model itself plans the API calls or database queries needed to fulfill a complex task, rather than requiring all logic to be hard-coded.

Some notable tool-use features of Qwen3-Next and its ecosystem include:

- Built-in Tools: Qwen-Agent provides some built-in tool implementations such as a

code_interpreter(to execute Python code in a sandbox) and possibly others like mathematical solvers. These are readily available for the model to use, expanding what it can do out-of-the-box. For example, for coding tasks, the model might choose to run a code snippet using the code interpreter tool and then use the result in its answer – enabling a form of program-of-thought verification. - Custom Tools: Developers can integrate custom tools by defining them in the config or registering them with the agent. Qwen3-Next is flexible – as long as the tool is described clearly (what it does, input/output format), the model can learn when to invoke it. This means you can hook Qwen3 up to internal APIs, cloud services, or hardware devices, essentially turning it into a controller for your environment.

- Multi-step Tool Chains: Qwen3-Next can perform multi-step tool chains within a single query. It might call a search tool multiple times with different queries as it hones in on an answer, or call one tool to get data and another to analyze it. The agentic planning ability combined with long context allows it to retain intermediate results from one tool call and feed them into the next action.

Overall, Qwen3-Next serves as a powerful agent engine: it can combine textual reasoning with external actions fluidly. This opens up advanced use cases like Autonomous Research Agents (browsing and aggregating information), DevOps assistants (monitoring systems and executing commands), or Workflow automators (orchestrating multi-API processes) – all driven by natural language goals given to the model. In the next sections, we will demonstrate how to integrate Qwen3-Next in practice, including how to run the model, call it via API, and craft prompts to utilize these capabilities.

Performance Considerations & Scaling

Deploying a model as advanced as Qwen3-Next requires understanding its performance characteristics and resource requirements. Despite its 80B size, Qwen3-Next is engineered for efficient scaling – both in training and inference – but one must still plan for substantial computational resources when using it at full capacity.

Resource Requirements: Qwen3-Next-80B-A3B in its native form is a large model that typically requires multiple high-end GPUs for fast inference. The 80B with MoE architecture is somewhat different from a dense 80B model: the model is split into many expert sub-networks. In practice, running the model with all 512 experts will likely be distributed across at least 8 GPUs (e.g., 8×80GB A100 or H100). In fact, the model’s performance scales with GPU interconnect bandwidth – NVIDIA’s technical report notes that Blackwell (H100) NVLink fabric (1.8 TB/s) is ideal to handle the MoE communication overhead.

If you deploy on a single GPU or a machine without such interconnects, the MoE routing could become a bottleneck. That said, if needed, one can disable MoE and use a subset of experts or run a smaller Qwen3 model (like a 7B or 14B dense model from Qwen3 series) for development and then scale up to 80B for production. There are also intermediate models: e.g., a Qwen3-32B dense or a Qwen3-30B-MoE variant exists, which could be more tractable to run.

Memory-wise, an 80B model typically demands ~60GB of GPU memory in 16-bit precision. Qwen3-Next provides optimized checkpoints, including an FP8 quantized version on Hugging Face (noted as “-FP8” model variants). FP8 is a format supported on Hopper (H100) GPUs that can drastically reduce memory usage and increase speed, with minimal impact on model quality. Using the FP8 variant (or an 8-bit quantization via Transformers library on other GPUs) can bring the memory footprint down to perhaps 40GB or less, making it feasible to run on a single large GPU for experimentation.

Moreover, Qwen3-Next can be loaded with device_map="auto" in Transformers to span multiple GPUs automatically (as shown in the code example below), or served via distributed inference frameworks.

Inference Throughput: Thanks to the MoE and hybrid attention design, Qwen3-Next can achieve very high token throughput compared to dense models of similar quality. For instance, Qwen3-Next-80B-Instruct matches a dense 235B model’s performance while using 10× less compute per token for contexts beyond 32K. In internal benchmarks, the base 80B (non-instruct) outperformed the older Qwen3-32B dense model with only 10% of the training cost and 10× faster inference on long inputs. However, to realize these benefits, one should use optimized inference backends.

The developers recommend using vLLM or SGLang for serving Qwen3-Next. These frameworks support features like continuous batching, efficient context handling, and possibly the multi-token generation (MTP) that vanilla Hugging Face generation does not yet fully support. By using an optimized server, you can achieve significantly higher throughput (tokens/sec) and handle the 256K context without running into slowdowns that a naive implementation might have.

Scaling Context: Another consideration is how throughput scales with longer contexts. Qwen3-Next’s linear attention means that memory and compute scale roughly linearly with input length, but the constant factors are still significant. Processing 100K+ tokens will consume a lot of time and memory. Therefore, for ultra-long contexts, you might want to adjust the context length limit at deployment. The official note suggests using a smaller max context (like 32K or 64K) if your server struggles to even start at 256K.

You can compile the model with a lower max_position_embeddings in the config to save memory when ultra-long context isn’t needed, and only enable the full length for specific cases. Also, using FlashAttention implementations (there are mention of a flash-linear-attention project) can speed up the linear attention kernel significantly, again improving long-context throughput.

Parallelism: Qwen3-Next can benefit from multi-GPU and even multi-node inference thanks to its MoE structure. Since different experts can reside on different GPUs, and only a subset need to be active per token, the model naturally lends itself to parallel execution – provided the framework supports it. The SGLang library and NVIDIA NeMo (through NIM) package have specifically integrated Qwen3-Next to run in parallel across an NVIDIA cluster. For most enterprise users, deploying via Docker or Kubernetes with these optimized containers will yield the best performance. If using Hugging Face Transformers directly, consider techniques like tensor parallelism (which HF Accelerate can set up) for the dense layers, and ensure efficient inter-GPU comms for MoE.

In summary, Qwen3-Next brings enterprise-grade performance but should be deployed with enterprise-grade infrastructure. Use 8-bit or 4-bit quantization for development on smaller GPUs, but switch to FP16/FP8 on multi-GPU servers for production to get maximum speed. Leverage specialized inference engines to exploit the model’s design fully – for example, only those can enable multi-token parallel generation and fused attention kernels that give Qwen3-Next its edge.

When scaled properly, Qwen3-Next delivers faster generation than many smaller LLMs on long texts, due to its algorithmic efficiency. Next, we’ll touch on the model’s multilingual and multimodal capabilities, which further broaden the scope of applications.

Multilingual & Multimodal Support

Multilingual Proficiency: Qwen3-Next inherits and extends the strong multilingual foundation of the Qwen family. The earlier Qwen2.5 supported ~29 languages; Qwen3 expanded that to 119 languages and dialects, making it one of the most linguistically versatile open models. This includes all major languages (English, Chinese, French, Spanish, Arabic, etc.) and many low-resource or regional languages. The model was trained on a colossal 15 trillion token dataset spanning diverse languages and domains, which yields excellent cross-lingual understanding and translation ability.

Benchmarks from the Qwen3 report show top-tier performance in multilingual evaluation: for instance, on a benchmark like MMLU-ProX (which includes non-English knowledge tests), Qwen3-Next-80B achieves ~76.7% vs. ~79.4% for a 235B model – a very competitive showing. It also does well on tasks like INCLUDE (in-context learning for multilingual dialogue) with ~79% accuracy. In practical terms, this means you can converse with Qwen3 in your language of choice, or even mix languages. It can follow instructions in one language and answer in another if asked (e.g., “Read this Chinese text and summarize in English”). The model’s large context also allows it to, say, translate or analyze entire documents in foreign languages in one go. For enterprise use, this broad language support is critical – Qwen3-Next can be used to build assistants and agents that serve a global user base with minimal performance disparity across languages.

One caveat: while the model is multilingual, certain languages or dialects with limited training data might still have lower fluency or accuracy. However, Qwen3’s expansion to 119 languages indicates a significant effort in covering even low-resource languages. In any case, developers should test the model on specific languages of interest.

Fine-tuning or few-shot prompting can further improve output in a target language (for example, providing a few examples of QA in a less common language to adapt style). Also note that extremely long context tasks in multilingual settings might require careful prompt design to keep the model on track if multiple languages are present (due to potential code-switching or confusion if instructions and content languages differ widely).

Multimodal Capabilities: In its current release, Qwen3-Next-80B (Instruct/Thinking) is a text-only model. However, Alibaba has demonstrated multimodal extensions in the Qwen lineup, and similar capabilities are expected in the Qwen3 generation. Notably, Qwen2.5-Omni-7B was an end-to-end multimodal model that could handle text, images, audio, and video inputs, and even generate text or speech outputs. This suggests that the architecture is designed to be modality-flexible. The Hugging Face collection indicates a Qwen3-VL (vision-language) and Qwen3-Omni in the works, which likely will combine Qwen3’s language understanding with image and audio processing. For example, Qwen3-VL might allow image inputs (for captioning or visual QA), given that Qwen2.5-VL-14B existed earlier. Qwen-Image is also listed, possibly a dedicated image generation model or an image understanding module.

While details are emerging, we can infer that multimodal workflows with Qwen3 will involve connecting the LLM with vision or audio encoders/decoders. If Qwen3-Omni follows Qwen2.5-Omni’s path, a single Qwen3-Omni model might natively accept multiple input types: e.g., an image and a question about it, or an audio clip for transcription, etc. In enterprise scenarios, this opens up use cases like processing scanned documents (vision + text), voice assistants (speech + text), or even video analysis (with a sequence of image frames and subtitles as context).

Currently, to use Qwen3-Next in a multimodal pipeline, one would integrate external models for the non-text part. For instance, use an OCR to get text from an image, then feed into Qwen3 for reasoning. Or use a speech recognizer to get text from audio, then let Qwen3 analyze it. As the Qwen3-VL and Omni models become available, these steps will condense. Alibaba’s research indicates the Omni model is end-to-end, meaning the same model can directly handle raw modalities.

Multimodal Output: On the output side, Qwen3-Next (text model) generates text only. But a companion model could generate speech (text-to-speech) or even images (there’s mention of Qwen3-Next being integrated in a platform that also does image generation). It’s plausible that Qwen3-Next could control an image generation tool via its agentic abilities – e.g., calling a “draw” tool with a description to create an image. This kind of multimodal orchestration is a powerful pattern: the LLM plans tasks that involve different modalities handled by specialized models.

In summary, Qwen3-Next is multilingual out-of-the-box, making it suitable for global applications. It’s also multimodal-ready: while the base model is text, it’s positioned within an ecosystem that handles vision, audio, and more. Developers should keep an eye on the release of Qwen3-VL and Qwen3-Omni for direct multimodal tasks. In the meantime, Qwen3-Next can serve as the “language reasoning core” that ties together various modality-specific modules in an agent. Next, we discuss concrete use cases that leverage these capabilities in real-world systems.

Core Developer Use Cases

Qwen3-Next’s advanced features unlock a wide range of high-impact use cases for developers. Below, we outline some core scenarios and how Qwen3-Next fits in:

Autonomous AI Agents: Qwen3-Next can power agents that plan and act to accomplish open-ended goals. Its agentic planning and tool-use skills mean you can build, for example, a research agent that takes a topic, searches the web, reads numerous articles (using long context), and synthesizes a report. The Thinking mode yields transparency in the agent’s reasoning steps, which is crucial for debugging and reliability. Qwen3-Next can maintain a complex agenda, remember past steps, and adapt on the fly, making it suitable for autonomous agents in environments like customer support bots that query databases or DevOps agents that monitor systems and execute commands.

Complex Enterprise Automation Workflows: In enterprise settings, tasks often span multiple systems and APIs. Qwen3-Next can serve as an intelligent orchestrator in RPA (Robotic Process Automation) or workflow automation. For instance, consider an automation: “When a support ticket is filed, summarize it, check internal knowledge base for similar issues, and draft a response.” A Qwen3-Next-based system could handle this end-to-end: read the ticket (long text), search the internal KB (via tool use), aggregate information (multi-step reasoning), and produce a formatted response. All these steps can be encoded as a single prompt/response cycle with Qwen3’s agent capabilities. Its long context ensures it can handle large forms or documents involved in enterprise processes.

Coding Assistants & System Design Reasoning: Qwen3-Next is highly capable in coding tasks (it outperforms older models on coding benchmarks like HumanEval and multi-language code tests). This makes it a great backend for an AI pair programmer or code review assistant. It can understand very large code files or multiple files given its context size (imagine pasting a 50k-line log or codebase excerpt for analysis). Moreover, with the Qwen3-Coder variant (a model fine-tuned specifically on code, likely available in the Qwen3 family), you can get even more specialized performance. Qwen3-Next can also reason about system architecture: e.g., you can prompt it with a description of a system and ask for threat modeling, or have it generate system design diagrams (via a tool to draw diagrams from text). Its planning ability means it can break down a complex coding task into steps – helpful for tasks like generating code, testing it (via the code execution tool), then fixing errors iteratively.

Backend Orchestration in AI Platforms: Qwen3-Next can act as the “brain” in a larger AI platform, orchestrating other models and services. For example, in a multi-agent setup, Qwen3-Next could be the high-level planner that delegates subtasks to specialized models: a vision model for image analysis, a smaller LLM for quick replies, etc. It can keep track of the overall conversation or workflow state (thanks to long context) and ensure consistency. In an AI-driven backend, Qwen3 might take user instructions and then generate a sequence of API calls to other microservices (similar to how tools are invoked). This is particularly useful for building natural language interfaces to complex software systems: the user says what they want, and Qwen3-Next generates the procedure to do it by calling backend functions.

Retrieval-Augmented Generation (RAG) with Structured Reasoning: Qwen3-Next is a prime candidate for RAG systems where the model must find and integrate information from external data sources. Because it can loop over tool use, you can implement a structured retrieval process. For instance, given a hard question, the model can issue a series of vector database queries (via a tool) for different facets of the question, read all retrieved passages (which could sum to 200k tokens, all held in context), analyze them, and produce an answer citing sources. It effectively can perform multi-hop retrieval: find something, read it, that spurs another query, and so on, within one session. The high context limit also reduces the need to pre-truncate or select top-3 passages; you could feed it dozens of relevant documents and let it figure out which parts matter. This yields more accurate and comprehensive answers in domains like legal, academic, or financial QA, where you want the model to consider all relevant data before answering.

Research-Grade Analytical Tasks: For data analysts or scientists, Qwen3-Next can be a powerful assistant to analyze large volumes of text or data. You could feed in the content of lengthy reports, or experimental logs, or a year’s worth of news articles, and ask the model to find patterns or summarize trends. It can hold an entire research paper (or several) in context and help with literature review by highlighting connections across them. In fields like medicine, it could review multiple patient records (de-identified) and answer complex queries that require synthesizing across those records. Its strong reasoning on math and logic also helps in scenarios like solving complex word problems, generating hypotheses and checking them against data (if paired with calculation tools). Essentially, Qwen3-Next can serve as an analyst AI that doesn’t back down no matter how much information you throw at it.

These use cases illustrate that Qwen3-Next is not just a chatbot – it’s a general-purpose reasoning engine that can be embedded into pipelines and applications. Developers can harness it to build solutions that were previously infeasible with smaller or less capable models (due to context or reasoning limits). In the next sections, we’ll demonstrate how to get Qwen3-Next running in your environment with code examples, and provide guidance on prompting and deployment.

Python Integration Example

Let’s walk through using Qwen3-Next in Python via Hugging Face Transformers. We assume you have access to the model weights (e.g., downloaded or via Hugging Face Hub). The model is quite large, so ensure you have a suitable hardware setup or use a smaller variant for testing.

First, install the latest Transformers library, as Qwen3 is supported in recent versions (the Qwen3 integration is in transformers main branch and will be in the latest release):

pip install git+https://github.com/huggingface/transformers.git@main

Now, here’s a Python snippet to load Qwen3-Next-80B and generate text:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-Next-80B-A3B-Instruct"

# Load tokenizer and model with device mapping (auto distributes across GPUs if available)

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

dtype="auto", # automatically chooses appropriate precision (FP16/FP8 if supported)

device_map="auto" # splits the model across available GPUs

)

# Prepare a prompt

prompt = "Explain the significance of the Fourier Transform in signal processing."

# Qwen3 uses a chat format, so we wrap the prompt in a messages structure:

messages = [

{"role": "user", "content": prompt}

]

# Convert to the model's chat format (this function applies Qwen's chat template under the hood)

text_input = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = tokenizer([text_input], return_tensors="pt").to(model.device)

# Generate a response

outputs = model.generate(**model_inputs, max_new_tokens=1024)

output_ids = outputs[0][len(model_inputs.input_ids[0]):] # skip the input prompt tokens

response = tokenizer.decode(output_ids, skip_special_tokens=True)

print(response)

In this example, we use the Instruct model to get a direct answer. The apply_chat_template call formats the message list into the proper prompt text expected by Qwen3 (including system instructions if any, and an end-of-prompt indicator). This is similar to how one would format prompts for ChatGPT or other chat models. The output response would contain Qwen3-Next’s answer about Fourier Transforms, likely a detailed explanation given the model’s knowledge depth.

A few things to note:

- Device Mapping: With

device_map="auto", Hugging Face will try to load different layers of Qwen3 onto different GPUs (or CPU if GPU memory is insufficient). Monitor your RAM/GPU usage; for 80B you ideally want multiple GPUs. If you only have one GPU with enough memory, you can setdevice_map=None(the default) to load fully on that GPU. - Precision:

dtype="auto"will load in FP16 by default if using a GPU. If you have the FP8 model and the hardware (NVIDIA H100), you could load in FP8 whichautomight do if it detects support. Otherwise, you can explicitly usetorch_dtype=torch.float16or even try 8-bit quantization viabitsandbytes(by addingload_in_8bit=True, though for MoE models 8-bit might be tricky). - Chat Format: Qwen3 expects a list of messages with roles (“system”/“user”/“assistant”). If you don’t use

apply_chat_template, you need to ensure the prompt is formatted correctly. The model card’s quickstart shows usage of this helper which is convenient. Internally, it likely prepends a system message like “You are an AI assistant…” if you haven’t provided one, and adds special tokens to indicate the assistant should start speaking. - Generation Parameters: Here we used

max_new_tokens=1024. Qwen3-Next can generate very long outputs (thousands of tokens) if asked. Ensure you set this to a reasonable number for your use case. Also be mindful of the total context: the sum of input tokens and output tokens should not exceed the context limit (e.g., if you have 250k tokens input, you can only generate ~12k tokens before hitting 262k total). - Streaming: In this basic example we just get the full output. For interactive or streaming use, you could generate step by step or use libraries like

transformers’ generate withstreaming=True(if supported). Alternatively, using vLLM’s server (described next) allows easy streaming of results.

The model’s output should be quite detailed and accurate for technical queries, given Qwen’s strong knowledge (MMLU ~90). If you find the response too verbose or not in the style you want, you can adjust prompts or generation settings (temperature, etc., discussed in Best Practices below).

REST API Example

To integrate Qwen3-Next into a web service or pipeline, you might prefer using a RESTful API. Fortunately, tools like SGLang and vLLM allow you to deploy Qwen3-Next as an OpenAI-compatible API endpoint. This means you can use the same API calls as you would with OpenAI’s chat completion API, but hitting your own server.

Let’s assume you have deployed Qwen3-Next on localhost:8000 with an OpenAI-like API (for instance, running SGLang serving, which by default uses the /v1/chat/completions route). We can then query it with a simple HTTP request. Here’s an example using Python’s requests library:

import requests

import json

API_URL = "http://localhost:8000/v1/chat/completions"

headers = {"Content-Type": "application/json"} # (Add Authorization header if your server requires it)

# Construct the payload as per OpenAI API format

payload = {

"model": "Qwen3-Next-80B-A3B-Instruct",

"messages": [

{"role": "system", "content": "You are a helpful coding assistant."},

{"role": "user", "content": "Write a Python function to check if a number is prime."}

],

"temperature": 0.7,

"top_p": 0.9,

"max_tokens": 256,

"stream": False # set True for streaming chunked responses

}

response = requests.post(API_URL, headers=headers, data=json.dumps(payload))

result = response.json()

print(result)

If the server is running correctly, result will be a JSON dict similar to OpenAI’s response format, for example:

{

"id": "chatcmpl-abc123...",

"object": "chat.completion",

"created": 1700000000,

"model": "Qwen3-Next-80B-A3B-Instruct",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "```python\ndef is_prime(n):\n if n < 2:\n return False\n for i in range(2, int(n**0.5)+1):\n if n % i == 0:\n return False\n return True\n```"

},

"finish_reason": "stop"

}

],

"usage": {"prompt_tokens": 20, "completion_tokens": 35, "total_tokens": 55}

}

This JSON contains the assistant’s answer in choices[0].message.content. In this case it produced a Python code block for the prime-checking function. You can then parse or use this content as needed in your application.

A few tips for using the REST API approach:

- Deployment: As mentioned, you can use SGLang or vLLM to host the model. With SGLang, you might run a command like

sglang serve --model Qwen/Qwen3-Next-80B-A3B-Instruct --port 8000. vLLM has similar commands to serve models behind an OpenAI-like API. Ensure your model weights are accessible (downloaded or provide a Hugging Face token if needed). - Model parameter: The API expects a model name. Use the exact name you have deployed. In our case, since we loaded the instruct model, we specify

"model": "Qwen3-Next-80B-A3B-Instruct". If you deployed the thinking model or a smaller variant, use that name. - Streaming: By setting

"stream": truein the payload, the server will likely send back a streaming response (chunked SSE). You would then iterate over the response stream in Python. This is useful for real-time applications to start showing the output before it’s fully complete. - Error handling: If your request is too large (too many tokens) or the server is under-provisioned, you might get an error or timeout. Always check for response status and handle accordingly. If using a reverse proxy or cloud function, ensure timeouts are configured to allow long responses.

- Rate limiting: Running Qwen3-Next is expensive. You may want to queue or limit concurrent requests. The OpenAI format has no built-in batch, but frameworks like vLLM will automatically batch multiple incoming requests if possible to improve throughput. Keep an eye on GPU utilization to adjust your server’s concurrency settings.

Using an OpenAI-compatible endpoint means you can easily integrate Qwen3-Next into existing apps that were built for GPT-3/4. Just change the API base URL and model name. This lowers the barrier to switching to Qwen for cost or openness reasons, while still using familiar APIs.

Prompt Engineering Best Practices

To get the most out of Qwen3-Next, careful prompt engineering is key – even for seasoned developers. Here are some best practices and tips, gathered from Qwen’s documentation and general LLM experience:

- Utilize System Messages: Qwen3 (especially the instruct model) responds to a system role message that can set the context or behavior. For example, including a system prompt like “You are an expert financial analyst AI. Answer with detailed reasoning.” can steer the model’s style and depth. System prompts can also be used to restrict behavior (for alignment/safety) or to provide high-level instructions for tool usage (although Qwen-Agent has its own way of injecting those). By default, if you don’t supply a system message, Qwen’s template might add a generic one. It’s often beneficial to be explicit about the role and persona you want the model to assume.

- Few-Shot Examples: For tasks where format matters (e.g., outputting JSON, or following a specific style guide), providing examples in the prompt can greatly improve consistency. Qwen3-Next has a massive context, so you can easily include a couple of demonstrations. For instance, if you want the model to answer questions with a rationale and then a conclusion, you could prepend: “Q: [question]\nThought: [chain-of-thought]\nA: [final answer]” as an example. However, note that Qwen’s instruct model by default will not show the chain-of-thought unless coerced. If you explicitly want to see reasoning, you might use the Thinking model or instruct it with something like “Let’s think step by step.” (which often triggers CoT behavior). The Qwen docs even mention specialized prompting for math problems and multiple-choice: e.g., “Please reason step by step, and put your final answer in a box like \boxed{final answer}.” or providing a JSON format for the answer in multiple-choice. These help standardize outputs.

- Controlled Sampling: Qwen3-Next, being a very knowledgeable model, can sometimes produce very lengthy outputs. It’s important to set generation parameters thoughtfully. The developers suggest a default Temperature ~0.7, Top-p ~0.8, Top-k 20 for a balance of creativity and coherence. A higher temperature (e.g., 1.0) might be used for creative tasks or when you want varied suggestions (but with risk of nonsense for factual queries). For deterministic needs (like coding answers), lower temperature (0 to 0.3) is better. They also mention using presence_penalty between 0 and 2 to avoid repetition loops. If you find the model repeating or rambling, a small presence penalty (like 1.0) can reduce that, but test carefully since high values can make it change topic or language oddly (the note warns of “language mixing” with too high penalty).

- Output Length Management: Always set

max_new_tokensor equivalent to a reasonable limit. Qwen3 can generate extremely long answers if the prompt implies it should (for instance, “Write a detailed book on X” could make it go on for tens of thousands of tokens). If you only want a summary paragraph, either explicitly ask for brevity or cap the tokens. The Qwen team recommends an output length of ~16k tokens for “most queries” for instruct models, which is already quite long. In interactive scenarios, you’ll typically want much less. Also consider using stop sequences if needed (like specifying the assistant should stop when it outputs a certain token or pattern). The model likely has an end-of-assistant marker in its format, but since we use it through controlled APIs, an explicit stop isn’t usually required unless you have a custom multi-turn format. - Standardize and Validate Outputs: For applications where the output format is critical (JSON, code, etc.), incorporate instructions in the prompt to only output that format. For example: “Output only a JSON object with the following fields… No extra commentary.” Qwen3-Next is pretty good at following structure (it was trained to generate structured outputs and JSON), but reminding it in the prompt helps. After generation, always validate the format (e.g., parse the JSON to ensure it’s valid, or run generated code in a sandbox to ensure it’s syntactically correct). If the output fails validation, you can feed it back with a system message saying “The output was not valid JSON, please correct it.” – the model is capable of self-correcting in a second pass.

- Long Context Prompts: If you provide a very long context (say a 100k token document), you should still guide the model’s focus with the prompt. Don’t just dump a huge text and say “What do you think?” – that might lead to superficial or drifting answers. Instead, ask specific questions about the content, or instruct it to divide the content into parts and analyze each part. Utilizing headings or markers in the long text can help (the model can scan for those). For example: “Below is a report with sections 1 through 5. Summarize each section, then give an overall summary.” By structuring your prompt, you make the task easier for the model to follow logically across long inputs.

- Handling Ambiguity and Errors: In a developer setting, sometimes the user’s query might be ambiguous or incomplete. Qwen3-Next, especially in instruct mode, will usually attempt an answer anyway. If you prefer it to ask clarifying questions or list assumptions, you need to prompt it to do so. A system prompt can encourage this behavior: “If the user’s request is unclear, ask clarifying questions before answering.” Similarly, if a tool fails or returns an error during an agent loop, Qwen3 might get confused unless prompted to handle it. You should design your agent prompt to handle tool exceptions (e.g., if a tool returns no result, have the model try an alternative approach or apologize). Testing these edge cases will improve the robustness of your Qwen3-powered system.

- Use “Thinking” model for Debugging: If you have access to Qwen3-Next-Thinking, you can use it during development to see the model’s reasoning steps (in

<think>blocks) which can be immensely helpful. For instance, if the model gives a wrong answer, the<think>trace might show it made a flawed assumption or skipped a tool invocation. You can then adjust your prompts or provide feedback. In production, you’d use the instruct model for clean outputs, but the thinking model is a great debugging aid.

By following these best practices, you can significantly enhance Qwen3-Next’s performance on your specific tasks. Prompt engineering for such a powerful model is as much about harnessing its strengths as it is about gently constraining its behavior to the task at hand. Next, we address some limitations to be aware of, and then wrap up with a FAQ to clarify common developer questions.

Limitations & Considerations

While Qwen3-Next is a state-of-the-art LLM, it’s important to recognize its limitations and plan around them:

Resource Intensity: As discussed, running Qwen3-Next-80B is non-trivial in terms of compute. Many developers won’t have the hardware to use it locally at full scale. Utilizing cloud GPU instances or Alibaba Cloud’s Model Studio (if available) might be necessary. There are smaller Qwen3 models (e.g., 0.6B, 4B, 7B variants) which are more feasible for local testing but will not have the full capabilities. One strategy is to prototype with a 7B or 4B model and then switch to 80B for final deployment. Also keep an eye on emerging techniques like LoRA fine-tuning or distillation that could produce a distilled smaller model for your domain – Qwen3’s technical report mentions leveraging flagship models’ knowledge to build smaller ones efficiently.

Latency: Even with optimizations, handling a 256K context or producing thousands of tokens will have non-negligible latency. Real-time applications (e.g., an AI assistant on a website) might find this challenging if long contexts are involved. You might need to implement progressive processing: for instance, summarize chunks of a long input with smaller models, then feed summaries to Qwen3 for the final reasoning. Or use streaming to at least start showing output to the user while the rest is generated. Users may not wait minutes for a response, so consider how to simplify tasks (maybe by focusing the context) when low latency is crucial.

Hallucination and Accuracy: Like any LLM, Qwen3-Next can hallucinate – especially on obscure queries or if it doesn’t find relevant info in its context. Its training does give it a huge knowledge base (MMLU ~90 suggests strong factual recall), but be cautious in high-stakes domains. Always have a verification mechanism if possible: e.g., if it provides an answer with sources, double-check those sources (since it could make up a citation). For code, run the code; for math, have it double-check using a calculator tool. The model’s tool use ability can mitigate hallucinations (because it can choose to fetch real data), but it’s not foolproof. In an enterprise scenario, you might combine Qwen3 with strict retrieval so it must base answers only on provided documents (to increase reliability).

Tool Use Constraints: While Qwen3 is great at calling tools, it will only do what it’s prompted/allowed to do. It doesn’t have an internal browse or execute ability without the external loop. This means if you forget to include a tool it needs, it might just stop with an incomplete solution or try to “imagine” the result of a nonexistent tool. It’s crucial to configure the agent with all expected tools and proper descriptions. Also, from a security perspective, be careful what tools you give it access to – for example, if you provide a shell execution tool to the model, ensure it’s sandboxed, as a misprompt or prompt injection could cause the agent to execute harmful commands. Always validate and sanitize user inputs in an agent setting, to prevent prompt injection attacks that could subvert the agent (this is an active area of research in agent safety).

Alignment and Safety: Qwen3-Next-Instruct has presumably undergone some alignment tuning (like RLHF) to follow instructions and avoid certain content, but as an open model, it may not be as heavily filtered as proprietary models. The existence of a Qwen3-Guard model in the ecosystem suggests a separate moderation model is provided. Developers should implement their own content filters or use Qwen-Guard to intercept any disallowed or sensitive outputs. This is especially important in enterprise use where compliance (e.g., not revealing personal data or avoiding legal advice etc.) is required. Test the model on prompts that might trigger unwanted content (edge cases) and apply safeguards accordingly.

Knowledge Cutoff: Qwen3’s knowledge is up to the data it was trained on (likely 2024 or early 2025 given Qwen2.5 came out in 2024). It won’t know about events after that. For up-to-date info, you must rely on retrieval (tool use with a search engine or database). This is standard, but worth noting – don’t expect it to know the latest news unless you feed that in as context.

Format of Input: Qwen3-Next expects input text (and possibly in future, other modalities). If you have structured data (tables, XML, etc.), the model can handle it (Qwen2.5 was noted to understand tables and JSON well). However, extremely large structured inputs might confuse the model unless asked properly. If you input a long JSON and ask the model to do something, ensure the prompt clearly states what to extract or compute. Also, the model might be more prone to errors near the end of a very long input (context dilution). Strategies like adding key information near the end of the prompt or splitting the task can help maintain accuracy.

Reliability of Extremely Long Generation: While Qwen3-Next can handle 1M token context with YaRN, the reliability of generation across that entire range isn’t guaranteed to be perfect. There may be position-wise biases or degradation (the Reddit discussion suggests skepticism about quality after 20k or 100k tokens without drifting). So, if you truly push to hundreds of thousands of tokens, watch out for the model losing track of earlier content or repeating itself. It’s an area of ongoing research to ensure uniform quality over ultra-long ranges.

In essence, treat Qwen3-Next as a powerful but heavy tool – leverage its strengths (long memory, reasoning, tool use) while designing your system to cover its blind spots (factual verification, safety filtering, resource management). With prudent use, you can build solutions that were previously impossible, but don’t skip validation just because the model is advanced.

Developer-Focused FAQs

What’s the difference between Qwen3-Next Instruct and Thinking models?

<think></think> tags, exposing its chain-of-thought. This is useful for agent use cases where you want to parse the model’s plan or for debugging. Instruct is generally for end-users, while Thinking is for agent developers. Under the hood, they share the same architecture; the difference is in fine-tuning and output formatting. They can achieve similar final performance on complex tasks, but Thinking might engage more step-by-step solving (and possibly use a bit more compute per query due to the extra content generated).What does “80B-A3B” mean in the model name?

80B-A3B indicates 80 billion parameters total, with ~3 billion active per token. It’s shorthand for the high-sparsity MoE design where only a small fraction of weights (3B) are used at a time. The actual implementation uses 512 experts with 10 activated (so actually 10/512 of the expert parameters per token, which sums to roughly 3B active since 80B/512*10 ≈ 1.56B – the remainder to 3B likely accounts for other layers that are always active). In short, A3B signals the sparse activation feature which makes the model efficient. You might also see references to “235B-A22B” in comparisons – that presumably was an internal 235B model with 22B active (more experts, more active, used as a baseline).How can I fine-tune or customize Qwen3-Next?

What hardware do I minimally need to run Qwen3-Next?

How does Qwen3-Next compare to models like GPT-4 or others?

Can Qwen3-Next handle images or audio input directly?

What is Qwen-Guard and how do I use it?

How can I optimize inference for long documents?

Is Qwen3-Next suitable for real-time applications (e.g., chatbots)?

We hope this FAQ clears up the practical concerns. Qwen3-Next is indeed an advanced system, and with this guide, you should be equipped to experiment and build with it effectively.

The combination of long-context processing, multi-step reasoning, and tool integration makes it a uniquely powerful platform for next-gen AI applications. Good luck with your Qwen3-Next projects, and happy coding!