Qwen3-Omni is a natively multimodal large AI model that can understand and generate text, images, audio, and even video content within one unified system. Developed by Alibaba Cloud’s Qwen team, it’s an end-to-end foundation model that delivers real-time responses in both written text and spoken audio (natural speech).

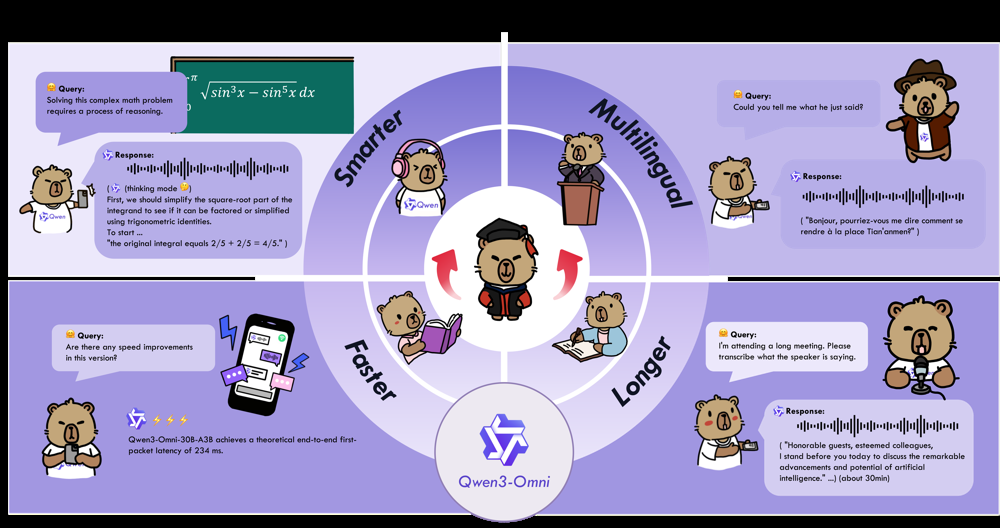

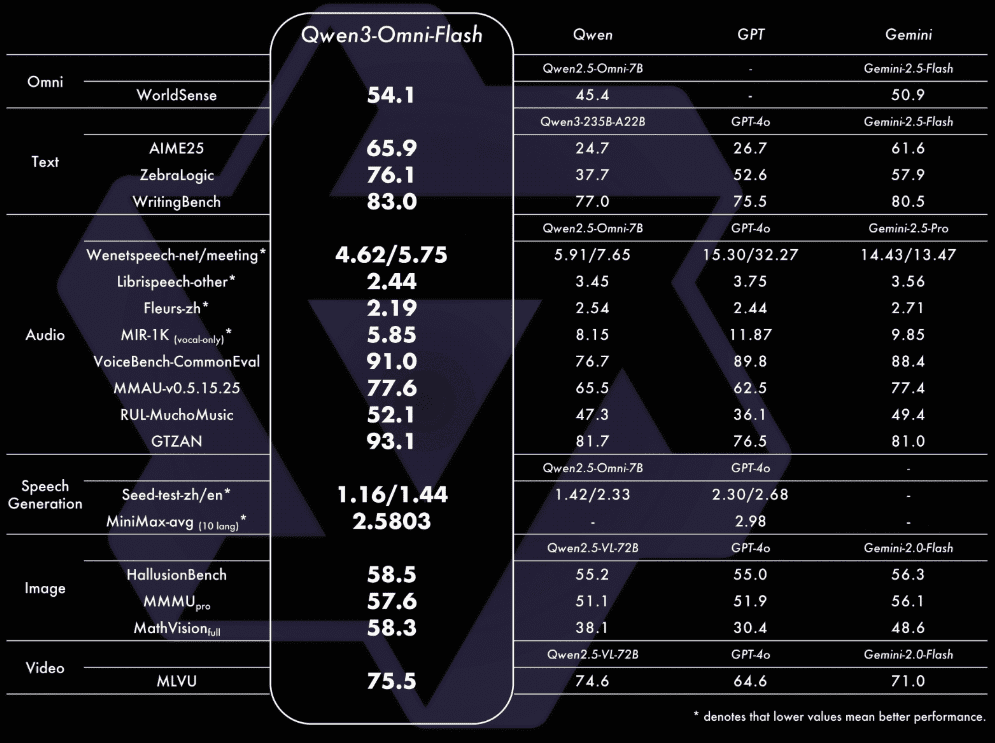

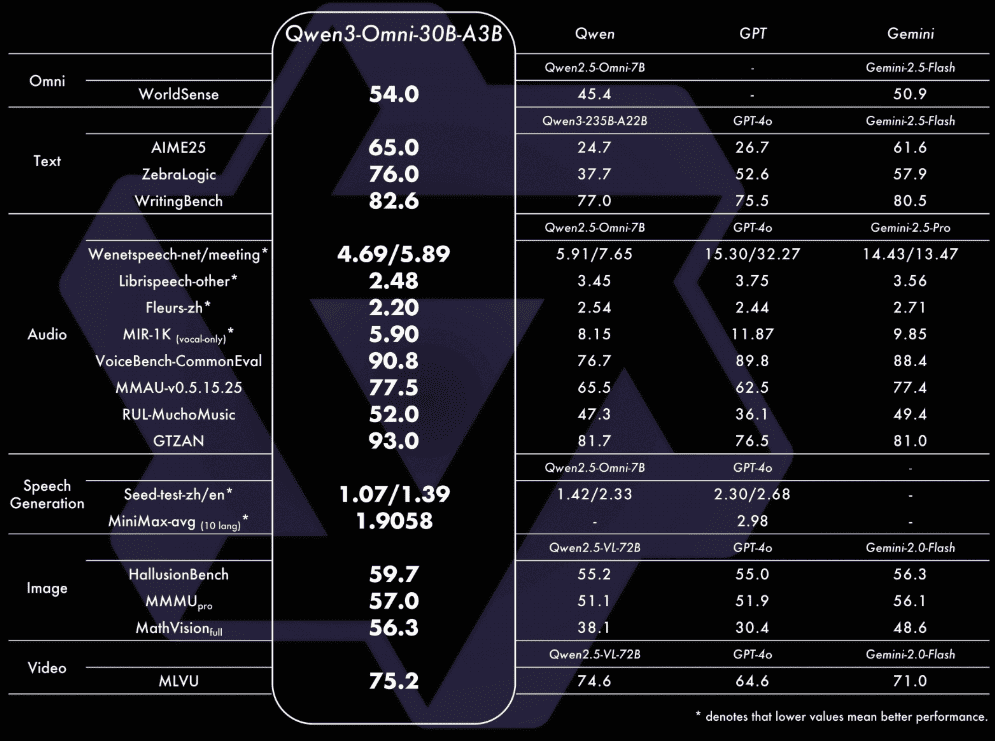

Unlike earlier multimodal models that often sacrificed performance in one modality to excel in another, Qwen3-Omni avoids such trade-offs – it matches state-of-the-art levels in each modality without degrading its language or vision skills. In other words, it performs as well as dedicated text-only or vision-only models of similar size, all while adding advanced audio and video understanding capabilities. It particularly shines in speech and audio tasks, where it has set new benchmarks on dozens of evaluations.

The model is open-source (Apache 2.0 licensed) and geared for enterprise use, enabling developers and researchers to build powerful multimodal applications with a single AI system.

At a high level, Qwen3-Omni can be seen as a general-purpose AI assistant that you can talk to, show things to, have listen to audio, or even give a video, and it will intelligently respond. For example, you could ask it to analyze an image and an audio clip together and get an answer in one short sentence.

Thanks to integrated training on text, images, audio, and video data, Qwen3-Omni can perform a wide range of multimodal tasks in a unified manner – from chatting about a topic (like a chatbot), to describing what’s in an image, transcribing and translating speech, analyzing video content, or even engaging in a mixed visual-and-audio dialogue with a user. The model supports extremely long inputs (tens of thousands of tokens), making it capable of understanding lengthy documents or extended audio recordings that span up to half an hour or more. In summary, Qwen3-Omni is an advanced AI model that combines text, vision, and audio understanding with multilingual interaction and real-time speech generation, all in one system.

This introduction provides a high-level overview – the sections below will dive into its architecture, supported modalities, key features, integration methods, and usage examples for developers.

Architecture Overview (Thinker–Talker Design)

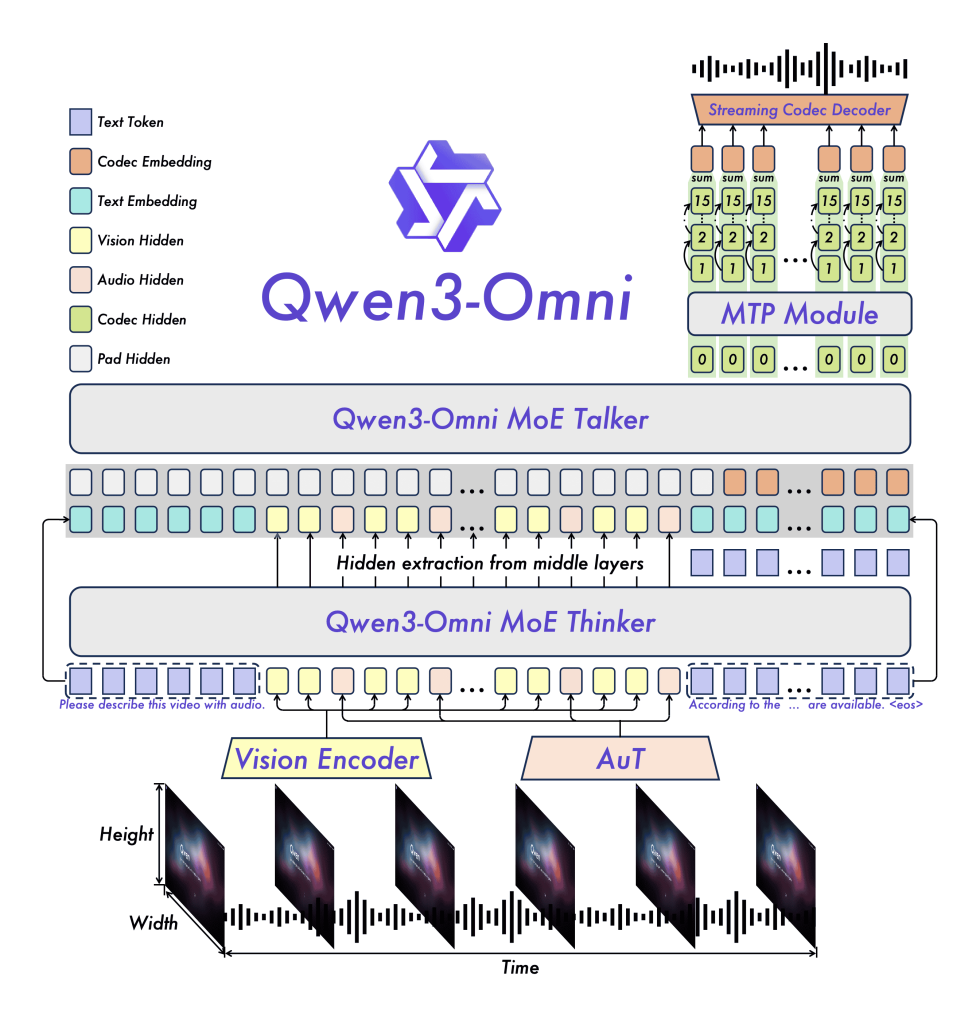

Internally, Qwen3-Omni adopts a novel Thinker–Talker architecture – essentially splitting the model into two cooperating expert modules. The Thinker is the “brain” of the model: it processes input from any modality (text, images, audio, video) and performs the core reasoning and text generation. The Talker is the model’s “voice”: it takes high-level representations from the Thinker and converts them into spoken output in real-time. This design allows Qwen3-Omni to generate fluent natural speech responses on the fly without interrupting the Thinker’s text-based reasoning. The two parts work in tandem during inference – the Thinker produces text (or intermediate tokens) and the Talker simultaneously renders them as audio speech, enabling streaming replies.

Several architectural innovations make this possible. Both the Thinker and Talker are implemented as Mixture-of-Experts (MoE) Transformers, which improves scalability and throughput (allowing high concurrency and faster inference).

For audio processing, Qwen3-Omni introduces a powerful Audio Transformer (AuT) encoder trained on an enormous 20 million hours of speech and audio data. This AuT module provides robust audio representations to the Thinker, replacing earlier off-the-shelf encoders (like Whisper) with a more flexible in-house model. On the output side, the Talker uses a multi-codebook decoding scheme: instead of generating raw waveforms (which is costly), it generates tokens in a discrete acoustic codec space. Specifically, it produces one codec frame per step and uses an MTP (multi-track predictor) to fill in additional codec codes for that frame. A lightweight ConvNet then converts these codec frames to the final waveform continuously.

This approach dramatically reduces latency – Qwen3-Omni can start playing back audio as soon as the first token is generated, achieving first-word latency as low as ~234 milliseconds in practice. In effect, the Talker is “streaming” out speech even while the Thinker might still be formulating the rest of the answer, making the system feel responsive in voice interactions.

Another advantage of the Thinker–Talker split is modular control. Developers can intercept or modify the Thinker’s text output before it goes to the Talker. For example, an external tool or safety filter could post-process the Thinker’s generated text (e.g. correct factual errors or censor sensitive content) and then feed the cleaned text into the Talker for speech synthesis. Because the Talker no longer strictly depends on the Thinker’s raw internal states, we have flexibility to inject or alter the final spoken response.

Moreover, the Thinker and Talker each can have their own system prompts and configurations, meaning the style of verbal delivery can be tuned separately from the content of the response. For instance, one could prompt the Thinker to produce a very concise answer, but prompt the Talker to speak it in a cheerful tone or a specific voice. This decoupling is extremely useful for enterprise deployments where consistent persona and tone are desired in speech output.

In summary, Qwen3-Omni’s architecture merges a top-tier multimodal Transformer (the Thinker) with a low-latency speech generator (the Talker). The use of MoE experts, the custom AuT encoder for audio, and the multi-codec streaming decoder are key engineering features that allow real-time, multimodal interaction without sacrificing accuracy. Figure 2 in the technical report illustrates this design, showing how inputs flow into the Thinker and how the Talker produces streaming audio frame-by-frame. This high-level overview sets the stage; next, we discuss what types of inputs Qwen3-Omni can handle and how it manages them.

Supported Input Types (Text, Images, Audio, Video)

Qwen3-Omni is an omni-modal model – it natively accepts four kinds of inputs: text, images, audio, and video. These can be used in combination (text plus another modality) to pose multimodal questions or tasks. Under the hood, the model uses separate encoders for different data types which all feed into the unified Transformer. Here’s a breakdown of the supported input modalities:

- Text: Qwen3-Omni handles natural language text like any large language model. You can input prompts, questions, or documents in text form and it will process them with its Transformer-based language understanding. The text tokenizer is based on Qwen’s vocabulary (~151k tokens), allowing it to handle a wide range of language content (including code or special characters, if needed). The model supports multi-turn dialogues as well – you can provide a chat history of user and assistant messages in text. This textual interface is fundamental for instruction-following tasks, conversations, and for combining with other modalities (e.g. an image with an accompanying text question).

- Images: Qwen3-Omni can directly analyze and understand images. Given an image input (for example, a JPEG or PNG), the model’s vision encoder (inherited from the Qwen3-VL vision-language model) will convert the image into a sequence of visual embeddings. The vision encoder is derived from SigLIP2 and has ~543M parameters, enabling strong image understanding and even basic video frame processing. With image input, the model can perform tasks like image captioning, object recognition, scene description, and visual question answering. For instance, you can show it a photo and ask “What is happening in this picture?” or “How many people are present?” and get a coherent answer. Qwen3-Omni’s capabilities include advanced visual tasks like OCR (reading text in images) and referring expression comprehension (e.g. grounding an object described by text in the image). The official examples demonstrate Image QA and visual reasoning, where the model answers arbitrary questions about an image’s content.

- Audio: Audio inputs (e.g. WAV/MP3 files) are another strength of Qwen3-Omni. The model can perform automatic

- speech recognition (ASR) – transcribing spoken words into text – across many languages. It also does general audio understanding: for example, classifying sounds, analyzing musical pieces, or detecting emotions/tone in speech. The audio is handled by the AuT encoder, which transforms an input waveform into a sequence of 128-dim Mel-spectrogram frames (at 12.5 Hz frame rate) for the Thinker to ingest. Impressively, Qwen3-Omni supports very long audio inputs; it can process audio recordings up to 30–40 minutes long in one go. This opens up use cases like transcribing entire meetings or podcasts in a single pass. In the model’s released “cookbook” examples, you’ll find demos of speech recognition (multi-language), speech translation (one language’s speech to another’s text or speech), audio captioning (describing a sound clip in words), and even music analysis. For instance, one example has the model listening to a coughing sound (

cough.wav) and an image, then answering what it hears and sees. - Video: Qwen3-Omni extends its vision+audio capability to video inputs (with or without sound). It can analyze video clips by processing frames (via the image encoder) and the audio track (via the audio encoder) in parallel, thanks to a time-aligned multimodal position encoding that keeps audio and visual tokens in sync. This means the model can understand temporal events – for example, answering questions about a video’s sequence of actions or describing a scene that unfolds over time. Cross-modal reasoning is a highlight: Qwen3-Omni can align what it hears with what it sees in a video. A demonstrated task is Audio-Visual QA, where the model answers questions like “Who spoke when the glass broke in the video?” by jointly interpreting the video frames and soundtrack. It can also do video captioning (generating a description of a video clip), video scene segmentation, and interactive video dialogues. The model’s training included a mix of images and video data to ensure it learned both static visual understanding and dynamic video comprehension. For example, given a silent security camera footage, Qwen3-Omni could summarize the activities; or given a how-to video with narration, it could answer questions by using both the visual steps and spoken commentary.

It’s worth noting that Qwen3-Omni typically expects a combination of text + at most one other modality per query (this is how the API is designed). In practice you will always include some text (like a prompt or question) along with any image/audio/video input to tell the model what to do with it. However, the open-source version is flexible – you can provide multiple modalities at once (e.g. one image and one audio together with text) in the same conversation turn.

In one example, the user provides an image of cars, an audio clip of coughing, and asks: “What can you see and hear? Answer in one sentence.” The model successfully combines both inputs to respond (it might say something like “I see several parked cars, and I hear the sound of someone coughing.”) This ability to integrate signals from different modalities distinguishes Qwen3-Omni from unimodal models. Table 1 below lists some representative tasks the model can handle out-of-the-box:

- Speech Recognition & Translation: transcribe spoken audio and translate it (e.g. English speech → Chinese text).

- Image/Video Captioning: generate descriptive captions for images or video clips (e.g. describe what’s happening or summarize scenes).

- Multimodal Question Answering: answer questions that refer to an input image, audio, or video, possibly requiring reasoning over time (for video).

- Document and OCR Analysis: read text from images (scanned documents, photographs of signs) and output the recognized text or answer questions about it.

- Audio Analysis: interpret non-speech audio (identify a musical genre, detect events from sounds, analyze multiple overlapping audio sources).

- Interactive Dialogue with Media: engage in a conversation where the user can speak or show something and the assistant responds conversationally about that media (e.g. “Here’s a picture of a product – what do you think?” followed by a spoken answer).

The supported input types are broad, making Qwen3-Omni a true any-to-any model (any input modality to either text or speech output). Next, we’ll discuss the model’s multilingual capabilities, which is another critical aspect, especially for global enterprise applications.

Multilingual Capabilities

One of Qwen3-Omni’s core strengths is its extensive multilingual support. This model was trained to interact in a large number of languages, both for text and speech. In total, it can handle 119 written languages in text-based interactions – essentially covering the majority of languages with significant web presence or user base. This includes not only English and Chinese (which make up a large portion of the training data), but also languages such as Spanish, French, German, Arabic, Hindi, Russian, Japanese, Korean, and many more. For text inputs, Qwen3-Omni can understand user prompts in these languages and respond appropriately in the same language (or a requested target language).

Beyond text, Qwen3-Omni is also a polyglot in speech. It supports speech recognition (ASR) in 19 languages/dialects, meaning you can give it spoken audio in any of those languages and it will transcribe/interpret it. Supported speech input languages include English, Mandarin Chinese, Korean, Japanese, German, French, Spanish, Portuguese, Italian, Russian, Arabic, Indonesian, Vietnamese, Turkish, Cantonese (Chinese dialect), Urdu, and others.

This range is quite unparalleled in open-source models – for example, it covers both Western and Asian languages and even right-to-left languages like Arabic. Many models in the past were English-centric; Qwen3-Omni was designed for multilingual deployments from the ground up.

On the output side, Qwen3-Omni can generate speech (audio responses) in 10 languages so far. Currently, the speech synthesis (Talker) component is capable of speaking: English, Chinese, French, German, Spanish, Portuguese, Russian, Japanese, Korean, and Italian. So if you ask a question in one of those, it can talk back fluently in that language. For languages outside the 10, the model would still understand the text and could respond in text, but not in audio (since the Talker hasn’t been trained for those voices yet).

The developers note that they plan to expand the speech output to more languages in the future, as the architecture supports it. Even with 10 languages, this is a big improvement over earlier versions (the previous Qwen-Omni had only 2 languages for speech output).

Another aspect of multilingualism is translation. Qwen3-Omni can act as a translator between many languages. For example, you could provide an audio clip of someone speaking French and ask the model to answer or summarize in English – it will perform speech recognition in French, understand it, and produce an English answer or translation.

The model’s training data likely included multi-language parallel texts and it demonstrates strong cross-lingual transfer. In benchmarks, it was shown to handle tasks like answering questions across different language inputs and outputs (cross-lingual QA) effectively.

From an enterprise perspective, this multilingual proficiency means Qwen3-Omni can be deployed in global contexts – a single model can serve users in different languages or handle content in multilingual documents. For example, an enterprise knowledge assistant built on Qwen3-Omni could answer employee queries in the employee’s native language, or process documents that contain a mix of English and non-English text. The model also allows persona customization in different languages: you can set system prompts to adjust tone or formality appropriate to each language (for instance, polite form in Japanese versus casual in English) and Qwen3-Omni will follow those instructions when generating responses.

Finally, Qwen3-Omni’s speech output comes with multiple voice options. In the cloud API, as of late 2025, there are 17 voice personas available for the Talker (covering different genders, tones, possibly accents). For example, voices named “Ethan” or “Cherry” can be selected for the audio output, as seen in usage examples.

This allows developers to pick a voice that best suits their application or brand. Voice customization combined with multilingual output means you could have, say, a French female voice or an American English male voice for the assistant, etc., to align with user expectations.

In summary, Qwen3-Omni is truly multilingual by design. It can read and write nearly 120 languages, listen in 19, and speak in 10 – making it a versatile foundation for applications that need to operate across language boundaries. Whether it’s a multilingual customer support agent, a speech translator, or a content analyzer for international media, the model has the linguistic capabilities built-in.

Core Multimodal Reasoning Features

Beyond simply handling multiple input types, Qwen3-Omni is designed to perform advanced reasoning that integrates information across modalities. This is where the model’s “smarts” come into play – it’s not just doing vision or audio tasks in isolation, but combining them and applying high-level reasoning or knowledge.

A major feature in Qwen3-Omni is the introduction of a specialized “Thinking” mode/model for complex reasoning. In technical terms, the Qwen team trained a variant called Qwen3-Omni-30B-A3B-Thinking which equips the Thinker with chain-of-thought prompting and reasoning abilities. When this mode is enabled (either by using the Thinking model weights or toggling a flag in the API), the model will more explicitly reason through problems step-by-step internally, before finalizing an answer. This is especially useful for solving complex tasks like mathematical reasoning, logical inference, or multi-step questions that benefit from intermediate thinking. For example, if given an image of a math problem, the “Thinking” mode might internally work out the solution with step-by-step text (not shown to the user) and then give the final answer.

The Qwen3-Omni technical report notes that they achieved improved multimodal reasoning by mixing unimodal and cross-modal training data and by fine-tuning this Thinking model explicitly to handle any combination of inputs. In practice, this means the model can do things like video reasoning – e.g., watching a short video and answering why something happened, drawing on both visual clues and perhaps textual cues (subtitles or spoken words) if available. It can also handle audio-only reasoning, which might involve understanding context from a long speech (like figuring out the main points of a 30-minute lecture).

Notably, Qwen3-Omni demonstrates cross-modal “synergy” – capabilities that don’t even exist in single-modal models. For instance, it can align audio and video streams to answer temporal questions (who did what when in a video).

It can also connect spoken language with visual context, such as following voice instructions about an image (“The user says: ‘please describe this diagram’” and the model looks at the diagram and does so). The model’s training included a phase where unimodal (text-only) and multimodal data were mixed from early on, which the researchers found was key to avoiding the usual “modality trade-off” problem. As a result, Qwen3-Omni achieves parity in each modality (text, vision, audio) and gains these emergent cross-modal abilities as a bonus.

In evaluations, it excelled particularly in combined audio-visual benchmarks – for example, on 36 audio and audio-visual tasks it set new open-source state-of-the-art on 32 of them. This includes outperforming even some closed models on tasks like audiovisual speech recognition and video question answering.

A concrete example of its multimodal reasoning: using the Image Math demo from the official cookbooks, the model is given a rendered math equation image and asked to solve it. Qwen3-Omni (with thinking mode) can extract the equation via OCR, then reason stepwise to solve the math problem, yielding the answer – effectively demonstrating visual understanding plus logical reasoning.

In an audio-visual dialogue example, the model can watch a video (with audio) and answer questions that require understanding how the audio and visuals relate over time. For instance, consider a video of a person giving a presentation: Qwen3-Omni could be asked “What was the speaker’s main point when the graph was on screen?” – it would use the audio (speech content) to know the main point and the video frames to identify when the graph appeared, combining them to answer.

Another feature is long-context reasoning. Thanks to its very large context window (addressed in more detail later), Qwen3-Omni can ingest and reason about lengthy multimodal inputs – like a 50-page PDF converted to images or a one-hour meeting recording. It was specifically trained with a long context stage where the maximum sequence length was expanded to 32k tokens, improving its ability to handle long inputs without losing track.

This means the model can maintain coherence and refer back to earlier parts of a conversation or document, enabling more structured workflows (for example, analyzing a whole document section by section, or carrying information across many dialogue turns). Large context also helps in audio – the model can remember what was said 20 minutes ago in a recording and relate it to what is said at the end, which is crucial for summarization or Q&A about the content.

Finally, Qwen3-Omni allows fine-grained control via prompts that can influence its reasoning approach. Developers can use system prompts to nudge the model’s style of reasoning (concise vs detailed), or to request output in a certain format that is easier to parse (like JSON or bullet points). The model is generally good at following instructions for structured outputs when asked, due to its instruction-tuning.

For example, you can prompt: “Analyze these inputs and output the result as a JSON with fields X, Y, Z,” and Qwen3-Omni will attempt to comply. This is very useful in structured workflows where the model’s output needs to feed into another system. It also ties into the next section – tool use and agent integration – where the model can output specific actions.

In summary, the core reasoning features of Qwen3-Omni include its ability to fuse modalities seamlessly, perform step-by-step reasoning with a “Thinking” mode, leverage a long memory for context, and produce structured, low-hallucination outputs especially in domains like audio captioning (they even released a specialized audio captioner fine-tuned model for detailed sound descriptions).

These capabilities make it not just a passive classifier or Q&A system, but a true AI reasoning engine that can be embedded in complex multimodal tasks.

Agent Integration (Tool Use and Workflow Execution)

Qwen3-Omni is designed to be a tool-augmented AI, meaning it can work in tandem with external tools or functions to extend its capabilities. This is analogous to how some GPT models use “function calling” or plug-ins – Qwen3-Omni can decide to invoke an API or function when needed and incorporate the result into its response.

The model supports an explicit function-calling format in its outputs. In practice, this means developers can define a set of tools (e.g. a calculator, a database query function, a web search function) and if the user’s request triggers it, Qwen3-Omni will output a JSON or structured invocation of that function rather than a normal answer. The calling application can then execute the function and feed the result back to the model for completion of the answer.

For example, suppose a user asks: “What’s the current weather in Paris?” – if integrated with a weather API, Qwen3-Omni might output something like: {"action": "get_weather", "location": "Paris"} instead of a direct answer.

The system would call the get_weather tool, get the data (“15°C and sunny”), and then Qwen3-Omni would generate the final response to the user using that data. This approach yields more accurate and up-to-date information and allows the model to handle tasks it wasn’t explicitly trained on (like looking up real-time info, calculations, etc.) without hallucinating.

The Qwen team explicitly mentions function call support as a feature: “Qwen3-Omni supports function calling, enabling seamless integration with external tools and services.”. They envision its use in agentic workflows, where the model can plan and execute multi-step tool-using strategies. In fact, upcoming enhancements are aimed at strengthening agent-based workflows with Qwen3-Omni. This aligns with the concept of an AI agent that can observe (via vision), listen (via audio), think, speak, and also act (via tools).

Integrating Qwen3-Omni into an agent framework (such as LangChain or custom orchestration) is facilitated by its chat-based interface and function-calling ability. Developers can provide a system message describing available functions and their JSON schema, and the model will output a function call if appropriate, just like OpenAI’s GPT-4 function calling mechanism.

The model’s training likely included some data for tool use and it was fine-tuned to produce correct JSON for function calls when certain trigger phrases appear. Although detailed documentation of the exact usage is still evolving (the Qwen blog indicates they are enhancing this), users of the open-source model have already tested that it can output function call formats that are parseable and executable in loop.

This capability is very powerful for enterprise automation. Qwen3-Omni can be the cognitive layer that decides when to fetch more information or perform an action. For instance, an enterprise workflow might involve: user asks a question that requires data from internal database → Qwen3-Omni outputs a call to a database query function → system executes it and returns result → Qwen3-Omni incorporates result into final answer. Similarly, it could interface with a search engine for knowledge queries, with calculators for math, or with any custom APIs (booking systems, inventory management, etc.) to complete tasks.

Because Qwen3-Omni is multimodal, the agent integration scenarios become even richer. Imagine a virtual assistant robot with a camera and microphone: Qwen3-Omni could interpret what it sees (via image input) and hears (via audio input), then call appropriate functions to act on that info.

For example, “There is a person at the door, should I let them in?” – the AI might use face recognition (a tool) on the camera image, identify the person as an employee, and output an action to unlock the door. While that specific tool (face recognition) is external, Qwen3-Omni provides the reasoning and orchestration to use it correctly (e.g., knowing when to call it, and using the result in context).

Integration is also facilitated by OpenAI-compatible APIs provided by Alibaba and platforms like SiliconFlow, where Qwen3-Omni is offered with a similar interface to ChatGPT. This means developers can use existing libraries (OpenAI SDK, etc.) to call Qwen3-Omni and handle functions in a familiar way.

To summarize, Qwen3-Omni isn’t just a passive model – it can be the center of an intelligent agent that perceives (vision/audio), reasons (LLM core), and acts (tool use). Enterprises can leverage this to build automation pipelines where the AI drives external APIs or processes. As of the current version, basic function calling is supported (you’ll need to manage the actual function execution externally), and future updates promise even tighter workflow integration and tool compatibility as the Qwen ecosystem grows.

This bridges the gap between AI understanding and real-world action, making Qwen3-Omni suitable for complex applications like robotic process automation, autonomous assistants, and multi-step AI services.

Enterprise and Research Use Cases

Qwen3-Omni’s multimodal and agentic capabilities unlock a wide array of use cases in enterprise settings and advanced research workflows. Below are some key scenarios where this model can be applied:

Virtual Multimodal Assistants: Enterprises can build AI assistants that see, hear, and speak. For example, a customer service avatar that not only chats with users but can also take voice input and respond with a human-like voice. Qwen3-Omni can power a virtual receptionist who recognizes a visitor (via camera image) and converses with them, or a smart meeting assistant that listens in meetings (audio) and shows relevant info on screen (text) when needed. These agents benefit from the model’s multimodal perception and real-time dialogue abilities.

Enterprise Automation & Orchestration: Qwen3-Omni can serve as the brain in automation pipelines. It can process incoming documents (scanned forms, images), emails, or support tickets that include screenshots/audio, and then trigger appropriate workflows. For instance, an IT helpdesk automation might use Qwen3-Omni to read an error screenshot and the user’s description, then call a script to fix the issue (via tool calling). The model’s integration with tools means it can coordinate tasks – essentially acting as a high-level orchestrator that understands context and decides on actions (retrieving data, updating records, etc.) in an RPA (Robotic Process Automation) system.

Intelligent Search and Multimodal Retrieval: With Qwen3-Omni, search systems can go beyond text keywords. You could query a knowledge base with an image or audio snippet and natural language, and the model can interpret the query and retrieve relevant information. For example, “Find all incidents similar to this screenshot” – Qwen3-Omni can describe the screenshot (maybe an error log) and then find text reports of similar errors. Or searching video archives by asking in plain language (the model can watch each video quickly to find a match). Its ability to produce embeddings across modalities or to generate captions on the fly makes it a powerful component for multimodal retrieval solutions. It essentially brings semantic understanding to images and audio, enabling unified search indexes.

Document and Video Analytics: In industries like finance or law, Qwen3-Omni can analyze complex documents that include text, tables, and images (charts) all in one. It could summarize a PDF report that has embedded graphics, or extract key figures from slides that have diagrams and text. Likewise for video analytics: think of security or compliance – the model can watch a CCTV footage and flag events (“a person entered a restricted area at 3pm”), or analyze user research sessions by transcribing and summarizing what was said along with actions on screen. These analytics become much more insightful when the AI considers both visual evidence and spoken/written content together. Qwen3-Omni’s training on cross-modal data helps it handle these tasks within a single model.

Scientific Research and Modeling: Researchers dealing with multimodal data – such as bioinformatics (images from microscopes + sensor readings), or environmental science (audio of animal calls + video footage) – can leverage Qwen3-Omni to interpret data in a holistic way. For example, in healthcare, it could read patient scans (images) and doctor’s notes (text) together to provide comprehensive reports. In computational science, one could use it to parse charts/graphs and text from papers to auto-generate summaries or suggest insights. The model’s large context and reasoning ability also mean it can help with literature review by reading multiple sources and cross-referencing them. Its multilingual ability is useful for global research collaboration (consuming papers in multiple languages). In educational settings, it could transcribe lectures and then answer students’ questions about the material, acting as a knowledgeable tutor that covers audio (lecture) and text (slides, textbooks).

Backend Media Processing Pipelines: Qwen3-Omni can be deployed in the backend to process large volumes of media content. For example, a social media platform could use it to automatically caption videos (for accessibility), detect inappropriate content in images/videos (via description + filtering), or transcribe podcasts for indexing. Because it’s one unified model, maintenance is easier than juggling separate vision and speech models. Also, thanks to open-source availability, companies can host it on-premises for privacy – e.g. processing internal security camera feeds or call center recordings without sending data to external APIs.

These scenarios highlight how Qwen3-Omni’s capabilities translate into real-world applications. From live transcription & translation services to multimodal customer support agents, the model is a foundation for building systems that understand and act on all sorts of data. Notably, it can enhance media accessibility – generating captions for videos for the deaf, or audio descriptions for images for the blind. It can also be used in education – e.g. transcribing and summarizing lectures or translating multilingual content on the fly for students.

In summary, any use case that involves multiple data types and complex reasoning stands to benefit from Qwen3-Omni. The fact that it is one model doing all of this means less system complexity and more coherent understanding across modalities. Enterprises looking to deploy advanced AI across their workflows will find Qwen3-Omni particularly valuable, as it can reduce the need for separate specialized models and enable more seamless AI-driven experiences.

Python Examples (Using Qwen3-Omni in Code)

To illustrate how developers can use Qwen3-Omni, let’s walk through a Python example leveraging the Hugging Face Transformers integration. First, ensure you have the Qwen3-Omni model and dependencies installed (as of Nov 2025, you may need to install transformers from source for the Qwen3-Omni support, and also install the qwen-omni-utils toolkit for processing multimedia inputs).

1. Loading the Model and Processor – Qwen3-Omni is available as a 30B parameter model. We load the instruct variant (which includes both Thinker and Talker for full capabilities):

from transformers import Qwen3OmniMoeForConditionalGeneration, Qwen3OmniMoeProcessor

from qwen_omni_utils import process_mm_info

MODEL_NAME = "Qwen/Qwen3-Omni-30B-A3B-Instruct"

model = Qwen3OmniMoeForConditionalGeneration.from_pretrained(

MODEL_NAME, device_map="auto", dtype="auto", attn_implementation="flash_attention_2"

)

processor = Qwen3OmniMoeProcessor.from_pretrained(MODEL_NAME)

This initializes the model (automatically spreading it across available GPUs, using half-precision for efficiency). The processor will help prepare inputs (tokenization for text and pre-processing for images/audio). The qwen_omni_utils.process_mm_info is a helper to load and format multimedia files or URLs for the model.

2. Preparing a Multimodal Input – Qwen3-Omni expects a conversation-like input structure. We can format it as a list of messages, where each message has a role and a content. The content can include different types. For example, let’s say we want to ask the model about an image and an audio clip together:

conversation = [

{

"role": "user",

"content": [

{"type": "image", "image": "https://example.com/cars.jpg"},

{"type": "audio", "audio": "https://example.com/cough.wav"},

{"type": "text", "text": "What can you see and hear? Answer in one short sentence."}

]

}

]

Here we provided an image (by URL) and an audio (URL) along with a text query. The model will need to fetch those and incorporate them. We then use the processor to convert this into model inputs:

# Use helper to get raw tensors for audio/image

audios, images, videos = process_mm_info(conversation, use_audio_in_video=True)

# Apply chat template (adds special tokens, roles, etc.) and get tokenized text

text_input = processor.apply_chat_template(conversation, add_generation_prompt=True, tokenize=False)

inputs = processor(

text=text_input,

audio=audios, images=images, videos=videos,

return_tensors="pt", padding=True, use_audio_in_video=True

)

inputs = inputs.to(model.device).to(model.dtype)

The apply_chat_template will produce the combined text sequence with role indicators for the model, and process_mm_info loads the actual image pixels and audio waveform (note: if using local files, you could put file paths instead of URLs). Now inputs contains PyTorch tensors for the text tokens, plus the processed image and audio embeddings, ready for the model.

3. Generating a Response – We can now run the model to generate an answer. The .generate() method is used since Qwen3-Omni is a generative model. We will also capture the audio output if any. For example:

output = model.generate(**inputs, speaker="Ethan", thinker_return_dict_in_generate=True)

text_ids = output.sequences # token IDs of output text

response_text = processor.batch_decode(

text_ids[:, inputs["input_ids"].shape[1]:], skip_special_tokens=True

)[0]

print("Assistant text response:", response_text)

# If we want the spoken audio output:

if output.audio is not None:

import soundfile as sf

sf.write("response.wav", output.audio.cpu().numpy().reshape(-1), samplerate=24000)

print("Audio response saved to response.wav")

In this snippet, we pass speaker="Ethan" to choose a voice (Ethan is one of the default male voices). We set thinker_return_dict_in_generate=True just to get a structured return (which includes the output.audio tensor). After generation, we decode the new tokens to get the assistant’s text. We also save the audio array to a WAV file using SoundFile. When running this example, you would hear the model’s spoken answer if you play response.wav. For our cars & cough example, the response_text might be something like: “I see several cars parked on the street and I hear someone coughing.”

4. Extracting Structured Outputs – Qwen3-Omni can also produce answers in structured formats if prompted. For instance, if we want a JSON output, we can modify the user prompt accordingly. Suppose we have an image of a document (invoice) and we want the model to extract certain fields in JSON:

conversation = [

{"role": "user", "content": [

{"type": "image", "image": "invoice.png"},

{"type": "text", "text": "Extract the `invoice_date` and `total_amount` from this image. Provide the answer in JSON."}

]}

]

# ... (process conversation as above) ...

output = model.generate(**inputs)

response_text = processor.decode(output.sequences[0, inputs["input_ids"].shape[1]:])

print(response_text)

The model might output something like: {"invoice_date": "2025-11-01", "total_amount": "$1,234.56"} as plain text, which our code can then parse as JSON. The quality of such structured output depends on prompt clarity, but Qwen3-Omni has been instruction-tuned to follow formatting instructions well.

This has been a quick tour of using Qwen3-Omni in Python. The example above illustrated loading the model, sending multimodal inputs, and obtaining both textual and audio outputs. For batch processing or more complex usage (like disabling the Talker for text-only use to save memory), the Hugging Face model card and Qwen’s GitHub provide additional examples. But even with the simple flow shown, developers can integrate Qwen3-Omni into applications – from Jupyter notebooks for research to backend servers responding to user queries.

REST API Examples (HTTP Requests for Qwen3-Omni)

For production deployments, you might prefer to use Qwen3-Omni via a RESTful API rather than running the model locally. Alibaba Cloud provides a Model Studio API for Qwen-Omni, and third-party platforms like SiliconFlow also host it. The API is designed to be OpenAI-compatible, meaning it follows the same schema as OpenAI’s chat/completion API (with some extensions for multimodality). Here we’ll show an example API request and response format.

API Request: To call Qwen3-Omni via HTTP, you make a POST request to the chat completions endpoint. For example (using Alibaba’s DashScope international endpoint):

POST https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions

Authorization: Bearer YOUR_API_KEY

Content-Type: application/json

{

"model": "qwen3-omni-flash",

"messages": [

{"role": "user", "content": "Who are you?"}

],

"modalities": ["text", "audio"],

"audio": {"voice": "Cherry", "format": "wav"},

"stream": true,

"stream_options": {"include_usage": true}

}

Let’s break this down. We specify the model name (qwen3-omni-flash is the latest production optimized version). We provide a standard chat messages array with a user prompt. Here, we only use text in the prompt, but we request both "text" and "audio" in the output modalities. This tells the API we want the assistant’s answer in both written form and spoken form. The "audio" field further specifies the voice persona ("Cherry" is a voice option, perhaps a female voice, and we want WAV format audio). We set "stream": true because the API currently only supports streaming mode for Qwen-Omni. This means the response will be sent as a stream of events (similar to Server-Sent Events), where each chunk may contain bits of text or audio.

Streaming Response: The API will start returning data chunks. A simplified view of what comes back:

data: {"choices":[{"delta":{"content":"I"},"index":0}],"object":"chat.completion.chunk"}

data: {"choices":[{"delta":{"content":" am"},"index":0}],"object":"chat.completion.chunk"}

...

data: {"choices":[{"delta":{"content":" Qwen, a multilingual AI model.","audio":{"data": "/v8AA..."} }],"object":"chat.completion.chunk"}

...

data: {"choices":[{"finish_reason":"stop"}],"object":"chat.completion.chunk","usage":{"prompt_tokens":...,"completion_tokens_details":{"audio_tokens":...,"text_tokens":...}}}

Each data: line is a JSON fragment. The first few chunks contain the text response piece by piece (e.g. “I”, then “ am”, etc.). One of the later chunks includes an "audio": {"data": "...base64..."} field. This is a portion of the audio, encoded in Base64 (because it’s binary audio data being sent as text). The client needs to concatenate all "audio": {"data": ...} segments from the stream. Once the finish_reason":"stop" arrives, the message is complete. The final usage stats will also indicate how many tokens were used for text and audio.

To handle this in code (as shown in the earlier Python example from the docs), you would accumulate the delta.content strings to get the full text, and accumulate delta.audio.data strings, then Base64-decode them to get the audio bytes. The audio bytes can be saved as a WAV file (16 kHz, 16-bit mono by default). In our example, the assistant’s text might be “I am a large language model developed by Alibaba Cloud. My name is Qwen.”, and the audio data corresponds to that sentence spoken in Cherry’s voice.

If you only wanted a text response (no audio), you would omit the "audio" field and just use "modalities":["text"]. If you wanted to include an image or other input in the API call, the OpenAI-compatible method is to encode the image as Base64 and include it in a message with a special role or content format. For instance, SiliconFlow’s API expects an image_url content with a data URI base64 string for images. The specifics may differ, but essentially you provide the image bytes and the prompt in the JSON. The model will then incorporate that image in its response.

Example (Image via API): (Pseudo-code)

{

"model": "qwen3-omni-flash",

"messages": [

{"role": "user", "content": [

{"type": "image_url", "image_url": {"url": "data:image/png;base64,<BASE64_DATA>"}},

{"type": "text", "text": "What does this picture show?"}

]}

],

"modalities": ["text"]

}

This would send an image and ask a question, expecting just text back.

From a backend integration standpoint, you can use the official SDK or simply requests to POST to this API. Because Qwen3-Omni’s API is “OpenAI-compatible”, many existing tools that work with OpenAI’s chat API can be pointed to the Alibaba endpoint by just changing the base URL and API key.

One thing to keep in mind: the Qwen-Omni API currently requires streaming mode, so you must handle streaming responses. The first tokens of the reply often come in within a couple hundred milliseconds (the system has very low latency per design). This is great for user experience, but it means your client code should be prepared to receive partial messages.

Response Handling: As illustrated, you’ll assemble the final text and audio from the stream. Once done, you can deliver the text to the user and play the audio or provide a download link for it. If using a platform (like SiliconFlow), it might abstract some of this. But at the raw HTTP level, this is the process.

In summary, Qwen3-Omni is accessible via a robust API that supports multimodal I/O and function calling. The request above showed how to get both text and spoken output. The same API can be used to leverage the Thinking mode (by adding "enable_thinking": true in the payload to invoke chain-of-thought reasoning if needed). It can also call the dedicated captioning model if you specify that model name. For most enterprise uses, hooking into this API will be the practical way to deploy Qwen3-Omni at scale, as it offloads the heavy computation to managed servers. We saw how to include modalities and interpret the streamed results. With that knowledge, developers can integrate Qwen3-Omni into web services, mobile apps, or any system that can make HTTPS calls.

Prompt Engineering Best Practices (Multimodal Inputs)

Working with a powerful multimodal model like Qwen3-Omni requires careful prompt design to get the best results, especially when combining different types of inputs. Here are some best practices for prompt engineering with Qwen3-Omni:

Always provide a clear textual instruction along with any image/audio. Even though the model can “see” an image or “hear” an audio file, it still benefits greatly from explicit text telling it what to do with those inputs. For example, instead of just showing an image and saying “???”, ask “Describe this image in detail.” Or if you give an audio clip, include text like “Transcribe the following audio” or “What emotion is expressed in this audio?” in the user message. This clarifies the task and significantly improves accuracy. Essentially, treat multimodal inputs as additional context, but your actual question/command should be in text form every time.

Use system prompts to set context, persona, or format upfront. Qwen3-Omni supports system-level instructions that are not visible to the user, which you can use to guide behavior. For instance, you might have a system prompt: “You are Qwen-Omni, a helpful AI assistant that can see images and hear audio.” You can also include rules about style here (especially to manage speech output). The Qwen team demonstrated a long system prompt to ensure the Talker produces conversational speech (avoiding things like reading out punctuation or making lists). Key tips for voice output: instruct the model to avoid overly formal or structured text (since it will sound unnatural when spoken). Encourage a tone that matches how you want the speech to sound (e.g. “speak in a friendly and casual manner”). Because the Thinker and Talker can be controlled separately, you might include in the system prompt both a persona for the content and guidelines for the voice. For example: “When providing an audio answer, speak in a calm, clear voice.”

Maintain consistency in multi-turn dialogues and parameter usage. If you are having a conversation with multiple rounds, it’s important to keep certain parameters consistent. For example, if you set use_audio_in_video=True for a video input in one turn, do so for subsequent turns as well. In general, persist the system prompt throughout the conversation so the model doesn’t drift. Also, when the user or system wants to switch language or format, explicitly reset or change that in a new system message or by re-specifying in the user prompt (the model tends to stick to the last known style).

Leverage the “Thinking” mode for complex tasks via prompts. If you know a user query is complex (like a tricky reasoning puzzle or a multi-hop question), you can instruct the model with something like: “Think step by step.” Qwen3-Omni’s thinking model is trained to do this internally (or you can enable it via the API flag as mentioned). But even with the instruct model, a prompt like “Let’s think it through:” can trigger chain-of-thought style reasoning, which often leads to better answers. Just be cautious – if the Talker is enabled, you may not want it to read out the chain-of-thought. So one approach: use the thinking model variant for purely text output if you want the detailed reasoning, and use the instruct model for final answers. Or prompt the instruct model to do reasoning in hidden form (it might not reveal it anyway, since it usually only outputs the final result unless asked to show reasoning).

Control output length and detail via instructions. Qwen3-Omni can produce very detailed outputs (e.g. the audio captioner model purposely gives lengthy, precise descriptions). If you want a brief answer, say “Answer in one sentence” or “Keep the response concise.” Conversely, if you want detail, explicitly ask for it (e.g. “Describe everything you notice in the video”). The model is fairly good at following these length and detail instructions due to fine-tuning. When dealing with long inputs (like a 30-min audio), you likely want a summary – so prompt: “Summarize this meeting in 5 bullet points.” The clarity here will affect whether you get a usable summary or an overly verbose transcript.

Format outputs for downstream use. If the next step in your pipeline is machine processing of the model’s output (for example, another program parsing JSON, or text-to-speech reading the answer), format accordingly. Qwen3-Omni can output in JSON, XML, CSV, bullet lists, etc. if you ask. Always specify: “Output only JSON with the following keys…” to avoid extraneous text. The model’s flexibility is a double-edged sword – it might add explanatory text if not instructed to stick to format. So be direct: “No explanations, just the JSON.” Similarly, for speech output, if you want just the spoken content with no asides, instruct the model not to include e.g. sound effect descriptions or bracketed text, since the Talker will literally speak whatever text is generated.

Utilize persona and style tuning for user satisfaction. Qwen3-Omni allows persona switching using system prompts. For a professional setting, you might set a formal tone; for an educational tutor, a patient encouraging tone; for a kids app, a playful tone. The model will adhere to these stylistic guidelines quite well (thanks to RLHF training). One can even adjust how the model refers to itself and the user (the example system prompt ensures the assistant uses “I” for itself and “you” for user, handling pronouns carefully – such consistency matters in voice interfaces).

Know the limitations of modalities and prompt around them. For instance, if an image is very complex or unclear, the model might hallucinate details. It’s good to ask specific questions about images rather than “Tell me everything,” to focus it. For audio, if there are multiple speakers but the model isn’t great at diarization (speaker separation), you might not get perfect results – a prompt like “there are two speakers, label their statements” could help, but it might not be fully reliable until multi-speaker support improves. Being aware of these limits (discussed more in the next section) will help you craft prompts that avoid asking the impossible.

In summary, effective multimodal prompting with Qwen3-Omni involves clarity, explicit instructions, and sometimes additional system context. Provide text guidance for every input, use system prompts to lock in behavior, and format outputs to your needs. The result can be remarkably accurate and useful responses that leverage the full breadth of the model’s capabilities. As always with prompt engineering, a bit of experimentation is needed to find what phrasing yields the best result for your particular task.

Performance Considerations (Latency, Context, Resource Usage)

Deploying a 30B multimodal model like Qwen3-Omni requires understanding its performance characteristics and resource needs. Here are some important considerations:

Latency and Throughput: Qwen3-Omni is optimized for real-time interaction. Thanks to the multi-codebook streaming approach, it achieves extremely low latency for first token and first audio frame. Internal benchmarks show ~211 ms response latency in audio-only tasks, and ~0.5 second latency in audio+video scenarios (likely for the first content to appear). This is end-to-end latency including audio generation, which is impressive for a model of this size. In practical terms, users will start hearing the model’s speech almost immediately. However, overall response time does scale with input length and output length – processing a 30-minute audio obviously takes longer than a 30-second clip. The model uses windowed attention and other tricks to handle streaming, but be mindful that very large inputs (like tens of thousands of tokens) will incur more computation.

Context Window (Max Sequence Length): Qwen3-Omni supports a huge context window – up to 65,536 tokens in the latest “flash” version for thinking mode. In non-thinking mode it’s around 49k tokens context. This is significantly larger than many other LLMs (GPT-4 for instance is 32k max). A 65k context means roughly 50 pages of text or a 4-hour audio transcript could, in theory, be processed in one go (though that would be extremely heavy). The input (prompt) part is typically limited to around 16k tokens, with additional space allocated for model’s chain-of-thought and output. When planning usage, if you have very long documents or transcriptions, Qwen3-Omni can handle them, but watch out for memory and time – see below.

Memory and Compute Requirements: This model is resource-intensive. Running Qwen3-Omni-30B with both Thinker and Talker at full capacity requires a multi-GPU setup. The developers provide a table of minimum GPU memory needed for various input durations. For example, processing a 15-second video with the 30B instruct model in BF16 precision took around 79 GB of GPU memory; a 60-second video input can demand over 107 GB. These figures assume usage of FlashAttention and memory optimizations, but clearly, we are in the range of needing 4×24GB GPUs or more for comfortable inference on longer inputs. If you disable the Talker (text-only mode), memory usage drops by roughly 10 GB as noted by the Qwen team. There are ways to reduce footprint: you can use 4-bit or 8-bit quantization at some quality cost, or deploy on a GPU cluster with model parallelism. Alternatively, using the API service offloads this – but then you’re paying per token. For researchers with limited hardware, loading Qwen3-Omni on CPU is possible but extremely slow (not recommended for real-time use). A happy medium is to use vLLM or other optimized inference engines which can better handle the large context.

Resolution and Modal Limits: For images, the model’s vision encoder likely works on a fixed input size (like 224×224 or 384×384 pixels). If you feed a very high-res image, it will be resized. Very tiny text in an image might not be legible to it if beyond resolution. For video, the model samples frames at a dynamic rate to align with audio at 12.5 Hz. This means long videos are sub-sampled. If something happens in between sampled frames (blink-and-miss), the model might not catch it. Also, extremely long videos (beyond a few minutes) are not practically feasible to feed entirely due to token limits – one would need to split them into chunks (the model can handle ~30min of audio, but video with 30min of frames is huge – likely the dynamic frame sampling means it can do it by treating it as periodic frames). Audio is similarly downsampled into 80ms frames, and a single audio sequence can be about 40 minutes for the full 32k token limit. If you have longer audio, you’d process in segments. All this is to say: Qwen3-Omni can handle a lot, but not infinite. Enterprises should plan to chunk very large media and possibly summarize incrementally.

Concurrency and Scaling: The MoE design and the separation of Thinker/Talker allow some scaling benefits. MoE essentially means certain layers have multiple expert sub-networks – in inference, typically only some are active per token. This can improve throughput or allow deploying on multiple devices. The architecture is aimed at high concurrency, meaning serving multiple user sessions in parallel on a server. The mixture-of-experts can route different queries to different experts, potentially handling many requests at once more efficiently than a dense model (in theory). In practice, using the official API, concurrency is handled behind the scenes. If self-hosting, you’d want to use libraries like vLLM which specialize in serving many requests by reusing KV caches, etc. Given the large context, also be mindful of per-token speed: generating each token can be slower when context is huge (due to self-attention cost). But if you have streaming on, the user will get tokens as they’re ready, which mitigates perceived delay.

Flash vs Non-Flash Models: In the documentation, “qwen3-omni-flash” refers to a specific performant variant (likely using FlashAttention and other optimizations). There was also mention of an older “qwen-omni-turbo” with smaller capabilities. For best performance and features, one should use the latest Flash model. It’s essentially the same architecture but tuned for speed. The context sizes given (65k) apply to flash. The older turbo was 32k context and fewer languages. So ensure you’re using updated versions to get the performance improvements and extended multilingual support.

Batching: Qwen3-Omni can batch process multiple multimodal inputs together (the HF code demonstrates batching up to 4 mixed inputs). However, when audio output is needed, currently it doesn’t support batching audio generation (they disable Talker in batch mode). So, if you need to scale to many requests, you might separate text-only queries (which you can batch) from audio-output queries (which might be handled individually or with streaming). Batching can help throughput significantly for pure text uses (the model becomes similar to a 30B LLM which is batch-friendly).

First-packet vs full response latency: The 234 ms number often cited is for the first audio packet in a cold start. That’s great for user perception – you hear something in under 0.3s. The model will continue generating the rest. If the answer is long, the remaining time will depend on length (speaking 50 tokens might take a few seconds). The system is tuned so that audio is output frame by frame without waiting for whole sentences. This is important for voice assistant use. If using text only, first token latency is similarly low, and tokens stream out. Comparatively, older models or naive approaches might have added 1–2 seconds overhead for buffering; Qwen3-Omni essentially streams immediately. High concurrency scenarios should be tested for throughput – MoE and efficient attention help, but there’s always a limit to how many parallel sessions one GPU can handle. Profiling with your hardware and typical input sizes is recommended.

In summary, Qwen3-Omni offers cutting-edge performance for a model of its size – particularly in its real-time streaming ability and enormous context support. But it also demands cutting-edge infrastructure (multiple GPUs or cloud instances with plenty of memory) to unleash its full potential. When planning deployment, consider using optimized inference engines and possibly the official cloud service if you cannot meet the hardware requirements on-premise. The latency is low enough for interactive applications (it truly enables live conversations and instant multimedia analysis), but watch out for the heavy memory usage on long inputs. If needed, you can trim inputs (e.g., image resizing, summarizing long text before feeding) as a pre-processing step to fit within feasible limits. The good news is that Qwen3-Omni was built with production in mind – hence the “Flash” version – so it’s arguably one of the most deployable large multimodal models to date given its balance of capability and optimization.

Limitations and Considerations

While Qwen3-Omni is a state-of-the-art multimodal model, it’s not without limitations. Developers should be aware of these and design systems to mitigate them:

Huge Model Size & Compute Cost: As discussed, 30B parameters with MoE (effectively more) plus large context and multimodal encoders means this model is computationally expensive. Running it in real-time requires top-end GPUs and lots of VRAM. This can be a limiting factor for smaller organizations or on-device applications. Quantization or using a smaller variant (if released in the future) might be necessary for low-resource environments. There’s also power consumption and cost to consider – serving many queries with a 30B model can rack up cloud GPU bills (though Alibaba’s pricing on SiliconFlow is about $0.1 per 1M tokens input, $0.4 per 1M output, which can be manageable depending on usage).

Modal Trade-offs in Extreme Cases: The Qwen team achieved no performance degradation overall across modalities, but that is within the range of tasks and data they tested. It’s possible that on some niche tasks, a dedicated model might still outperform Qwen3-Omni. For example, highly specialized vision tasks (like medical imaging diagnostics) or audio tasks (like polyphonic music transcription) might require fine-tuning the model or using a specialist. Qwen3-Omni was not specifically trained on medical or legal data, for instance, so domain-specific knowledge might be limited compared to specialized models.

No Visual Output (No Image Generation): Qwen3-Omni can describe images but cannot generate images. If you ask it to create an image, it will likely say it doesn’t have that ability or give a textual description instead. This model should be thought of as analysis/understanding oriented for vision, not generative like DALL-E or Stable Diffusion. Similarly, it can’t generate video (only analyze). It does generate speech audio, which is one form of generative output, but other modalities it won’t create new content for (aside from possibly very simple sounds via onomatopoeia, but essentially no).

Potential Errors in OCR and Fine Details: The vision encoder is strong but reading fine text in images (OCR) can still be challenging, especially if text is small or stylized. While one of the example use cases is OCR on complex images, results may vary. It might misread characters or skip some parts. If critical, one might pair Qwen3-Omni with a dedicated OCR engine and then feed the extracted text back for higher-level reasoning.

Limited Multi-Speaker Handling: The current audio understanding is excellent for single-speaker input (e.g. dictation, monologues). However, speaker diarization (distinguishing multiple speakers in one audio) is not a focus of the model’s training. They specifically mention working on multi-speaker ASR in the future. So, if given a conversation recording with overlapping speakers, Qwen3-Omni might produce a combined transcript without labeling who is who. Workarounds include pre-separating speakers with an external tool, or prompting the model to distinguish speakers (it might attempt to label them as Speaker A/B, but not guaranteed accurate without fine-tune).

No Real-Time Vision (No video feed analysis beyond provided clip): One has to feed a video file or frames. Qwen3-Omni doesn’t have a notion of continuous vision input beyond a single query. If you need a continuous video stream analysis, you’d have to break it into segments and call the model repeatedly, which could be slow or disjointed. Also, “video OCR” is mentioned as a future improvement, implying that reading text that appears in video frames over time might be something they want to handle better.

Hallucination and Accuracy Limitations: As with any LLM, Qwen3-Omni can still hallucinate information, especially in open-ended Q&A. The team tried to reduce hallucinations (e.g., the audio captioner model is tuned for low hallucination descriptions of sounds), but it’s not eliminated. If the model is unsure, it might make up a plausible answer. This is especially tricky in vision – it might assert something about an image that isn’t there, if the prompt biases it. Or in audio, it might hallucinate hearing a word in unclear audio. Therefore, for mission-critical applications, you need human verification or confidence checks. It’s also wise to use the model’s ability to say “I’m not sure” by prompting it that it’s allowed to say that (it tends to be more factual than some models, but caution is still due).

Biases and Ethical Considerations: Multimodal models carry the risk of biases from training data. Qwen3-Omni was trained on a large multilingual corpus and presumably lots of web images/videos. It may have embedded stereotypes or biases – e.g., misidentifying people in images if training data was skewed, or being less accurate for languages that had less data. The Qwen team likely applied some alignment and filtering, but those details aren’t fully known. Enterprises should evaluate the model on their specific domain for fairness and accuracy. Because it’s open source, one can fine-tune or adjust if needed (within the license terms, which allow commercial modification under Apache 2.0).

System and Prompt Leaking: In chat mode, ensure that system prompts or any internal usage instructions are framed properly so the model doesn’t reveal them. Qwen3-Omni is trained similarly to ChatGPT with system, user, assistant roles, so it usually won’t reveal system content, but prompt hijacking is always a possibility if an adversarial user tries to get it to break character. The robust solution is to have a user content filtration and never fully trust the model not to be manipulated by cleverly crafted user input (though it has a built-in guardrails from training likely, as Alibaba would have aligned it like their Tongyi Qianwen models).

Emergent Issues with Long Contexts: Although it supports 65k context, not all parts of that context are equally attended with full fidelity due to the nature of positional encoding (even with rotary embeddings, very long sequences can see some degradation in attention on far history). The technical report hints at challenges with positional extrapolation beyond seen lengths. So while it can go to 65k, the quality of its use of the last portions might drop. Also, memory and speed constraints mean you might not actually push it to the max in practice.

Evolving Software Support: As of end of 2025, the Hugging Face integration was just merged and one needs cutting-edge transformers library. Some tools might not fully support the multimodal input format out-of-the-box yet (e.g., certain libraries might assume text only). This will improve over time, but early adopters might face integration quirks. Using the provided Qwen3OmniMoeProcessor and utility functions is key.

In conclusion on limitations, Qwen3-Omni is at the frontier of multimodal AI, so it inherits both the powers and some imperfections of that frontier. It performs remarkably well across a broad set of tasks, but careful testing is needed for any specific application. Employing human in the loop for critical tasks is recommended.

Many limitations (like multi-speaker ASR, even larger coverage of languages, etc.) are already identified by the team and likely to be addressed in future versions. The open model nature means the community can also fine-tune or improve it (for example, someone could fine-tune it on medical images or specific languages).

From an engineering standpoint, allocate ample resources and implement fallbacks (like if the model fails to extract something, perhaps log that for review or try a different prompt). By being mindful of these considerations, developers can mitigate risks and leverage Qwen3-Omni’s capabilities to their fullest.

Developer FAQs

Finally, let’s address some frequently asked questions that developers and engineers might have about Qwen3-Omni:

What model variants are available and what’s the difference between Instruct, Thinking, and Captioner models?

What are the hardware requirements to run Qwen3-Omni locally? Can I run it on a single GPU or CPU?

model.disable_talker() to save ~10 GB VRAM. There is no smaller parameter count version published yet (like no 7B or 14B for Qwen3-Omni as of now), so 30B is what we have.How do I make Qwen3-Omni call external tools or functions in practice?

getWeather(city) that returns the weather for a city.” Provide input/output format), (2) ask a question that would require that function. If all goes well, the model will output something like {"function": "getWeather", "args": {"city": "Paris"}} instead of a normal answer. Your code should detect this (e.g. by checking if the first character is { and contains "function" key), parse it, execute the actual function (say, call a weather API), then feed the result back into the model (e.g. as assistant’s function result message). This is similar to how you’d orchestrate with GPT-4’s function calling. The Qwen-Omni blog confirmed it supports function call format. We expect more examples and built-in support to come. If using the Alibaba API, there might be a parameter to directly integrate function calling (OpenAI’s API has a functions field – if Alibaba’s is compatible, it could have the same). If not, you can always manually do the above via prompts. Keep an eye on official docs as they expand tool usage support.Is Qwen3-Omni really open source for commercial use?

How can I fine-tune or customize Qwen3-Omni for my specific domain or tasks?

How do I handle very long inputs (like a 200-page document or 2-hour video)?

What voices are available for the Talker (speech output), and can it speak in different accents or genders?

speaker or voice parameter. In HuggingFace generate(), we saw speaker="Ethan" used; the API example used "voice": "Cherry". These presumably correspond to specific voice profiles (likely internal codebooks tuned to sound like different speakers). The voices cover different genders and perhaps accents or styles. For example, Cherry might be a female voice, Ethan male; there could be voices for Chinese, etc. The documentation hints the number of supported output languages is 10 (so voices will at least cover those languages with proper pronunciation). If you set a voice that doesn’t match the language (say an English voice but output is Chinese text), the accent might be off or it could even mix languages – unclear. Ideally, choose a voice that matches the language: e.g., a Chinese voice for Chinese output, etc. The list of voice names isn’t fully given in the docs we saw, but presumably includes a variety (common English names, etc.). If you use the open-source model locally, it might default to one voice if not specified. The API expects you to specify one if you request audio. So yes, multiple voices are supported; pick the one that suits your use case. Over time, they might add more regional accents or expressive styles.How does Qwen3-Omni handle user privacy and sensitive data?

These FAQs address common points of curiosity and concern. Qwen3-Omni is a complex tool, but understanding its variants, how to integrate it, and its limitations will empower developers to build great solutions with it.

As the model and its ecosystem evolve (we might see updates like Qwen4, etc.), some answers may change, but the principles of multimodal LLM usage will remain similar.

Always refer to the latest documentation and community forums for up-to-date tips, and happy building with Qwen3-Omni – this model truly opens up new frontiers for AI applications.